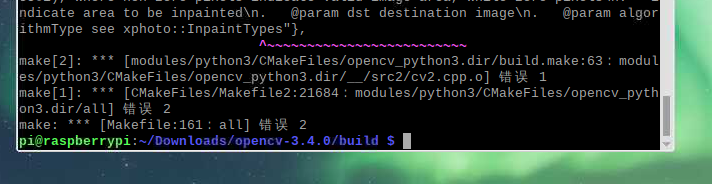

1.编译错误 到99%

2.结果如图

3.错误问题#make错误,退出

make[2]: *** [modules/python3/CMakeFiles/opencv_python3.dir/build.make:56: modules/python3/CMakeFiles/opencv_python3.dir/__/src2/cv2.cpp.o] Error 1

make[1]: *** [CMakeFiles/Makefile2:21149: modules/python3/CMakeFiles/opencv_python3.dir/all] Error 2

make: *** [Makefile:138: all] Error

python3.4.0安装opencv出现问题

- 写回答

- 好问题 0 提建议

- 追加酬金

- 关注问题

- 邀请回答

-

1条回答 默认 最新

悬赏问题

- ¥15 安卓adb backup备份应用数据失败

- ¥15 eclipse运行项目时遇到的问题

- ¥15 关于#c##的问题:最近需要用CAT工具Trados进行一些开发

- ¥15 南大pa1 小游戏没有界面,并且报了如下错误,尝试过换显卡驱动,但是好像不行

- ¥15 没有证书,nginx怎么反向代理到只能接受https的公网网站

- ¥50 成都蓉城足球俱乐部小程序抢票

- ¥15 yolov7训练自己的数据集

- ¥15 esp8266与51单片机连接问题(标签-单片机|关键词-串口)(相关搜索:51单片机|单片机|测试代码)

- ¥15 电力市场出清matlab yalmip kkt 双层优化问题

- ¥30 ros小车路径规划实现不了,如何解决?(操作系统-ubuntu)