我使用python爬取网页表格数据的时候使用 request.get获取不到页面内容。

爬取网址为:http://data.10jqka.com.cn/rank/cxg/board/4/field/stockcode/order/desc/page/2/ajax/1/free/1/

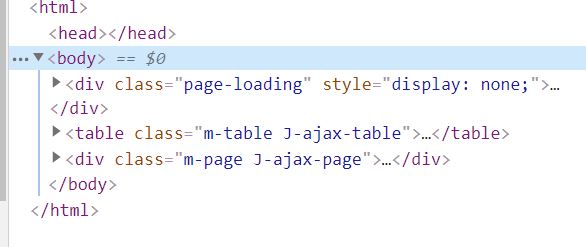

这是Elements

import os

import requests

from lxml import etree

url='http://data.10jqka.com.cn/rank/cxg/board/4/field/stockcode/order/desc/page/2/ajax/1/free/1/'

#url1='http://data.10jqka.com.cn/rank/cxg/'

headers={'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.106 Safari/537.36'}

res = requests.get(url, headers=headers)

res_elements = etree.HTML(res.text)

table = res_elements.xpath('/html/body/table')

print(table)

table = etree.tostring(table[0], encoding='utf-8').decode()

df = pd.read_html(table, encoding='utf-8', header=0)[0]

results = list(df.T.to_dict().values()) # 转换成列表嵌套字典的格式

df.to_csv("std.csv", index=False)

res.text 里的数据为 (不包含列表数据)

'<html><body>\n <script type="text/javascript" src="//s.thsi.cn/js/chameleon/chameleon.min.1582008.js"></script> <script src="//s.thsi.cn/js/chameleon/chameleon.min.1582008.js" type="text/javascript"></script>\n <script language="javascript" type="text/javascript">\n window.location.href="http://data.10jqka.com.cn/rank/cxg/board/4/field/stockcode/order/desc/page/2/ajax/1/free/1/";\n </script>\n </body></html>\n'