Private hhook As Long

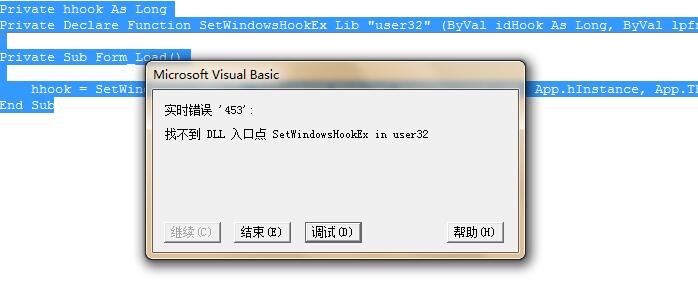

Private Declare Function SetWindowsHookEx Lib "user32" (ByVal idHook As Long, ByVal lpfn As Long, ByVal hmod As Long, ByVal dwThreadId As Long) As Long

Private Sub Form_Load()

hhook = SetWindowsHookEx(2, AddressOf Module1.AddControlHookA, App.hInstance, App.ThreadID)

End Sub

请问,是64位的win7不支持这个方法吗?64位win7要怎么操作呢?