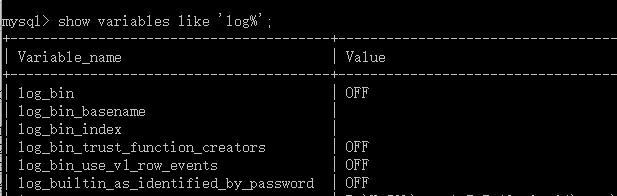

安装的是解压缩版的MySQL5.7.19,可以正常启动和进入mysql,但是一直无法启动日志,

以下是我的my.ini内容,希望各路大神能够指导一下我:

# For advice on how to change settings please see

# http://dev.mysql.com/doc/refman/5.7/en/server-configuration-defaults.html

# *** DO NOT EDIT THIS FILE. It's a template which will be copied to the

# *** default location during install, and will be replaced if you

# *** upgrade to a newer version of MySQL.

[client]

default-character-set=utf8

[mysqld]

port=3306

log-bin =mysql-bin

default-storage-engine=INNODB

character-set-server=utf8

collation-server=utf8_general_ci

basedir ="E:\MySQL\mysql-5.7.19-winx64/"

datadir ="E:\MySQL\mysql-5.7.19-winx64/data/"

tmpdir ="E:\MySQL\mysql-5.7.19-winx64/data/"

socket ="E:\MySQL\mysql-5.7.19-winx64/data/mysql.sock"

log-error="E:\MySQL\mysql-5.7.19-winx64/data/mysql_error.log"

server-id =1

#skip-locking

max_connections=100

table_open_cache=256

query_cache_size=1M

tmp_table_size=32M

thread_cache_size=8

innodb_data_home_dir="E:\MySQL\mysql-5.7.19-winx64/data/"

innodb_flush_log_at_trx_commit =1

innodb_log_buffer_size=128M

innodb_buffer_pool_size=128M

innodb_log_file_size=10M

innodb_thread_concurrency=16

innodb-autoextend-increment=1000

join_buffer_size = 128M

sort_buffer_size = 32M

read_rnd_buffer_size = 32M

max_allowed_packet = 32M

explicit_defaults_for_timestamp=true

sql-mode="STRICT_TRANS_TABLES,NO_AUTO_CREATE_USER,NO_ENGINE_SUBSTITUTION"

skip-grant-tables

#sql_mode=NO_ENGINE_SUBSTITUTION,STRICT_TRANS_TABLES