-*- coding: utf-8 -*-

import urllib, sys, os, re

from selenium import webdriver

from bs4 import BeautifulSoup

reload(sys)

sys.setdefaultencoding('utf-8')

def mkdir(path):

if not os.path.exists(path):

os.makedirs(path)

def save(driver, html, parentpath):

driver.get(html)

content = driver.page_source

print content

soup = BeautifulSoup(content, 'lxml')

alist = soup.select("li.tit")

print alist

for i in alist:

path = parentpath

a = i.select('a')[0]

text = a.text

title = text.strip().replace(" ", "")

path = path + "/" + title # (文件夹名字)

try:

mkdir(path)

except Exception, e:

print '创建文件夹出错'

continue

url = 'http://www.cqzj.gov.cn/' + i.attrs['href']

driver.get(url)

content = driver.page_source

soup = BeautifulSoup(content, 'lxml')

title = soup.select('span[id="Contentontrol_lblTitle"]')[0].text.replace("\n", "")

#title1 = title + "1"

# print title

# time = soup.select('td.articletddate3')[0].text.replace("\n", "")

# print time

content = soup.select('div.con')[0].text.strip()

try:

fileName = (path + '/' + title + '.txt').replace("\n", "").replace(" ", "").replace("<", "").replace(">",

"").replace(

"《", "").replace("》", "").replace("|", "").decode("utf-8")

#filename1 = (path + '/' + title1 + '.txt').replace("\n", "").replace(" ", "").replace("<", "").replace(

# ">","").replace("《", "").replace("》", "").replace("|", "").decode("utf-8")

file = open(fileName, 'w')

file.write(title + '\n\n' + content)

file.flush()

file.close()

except Exception, e:

print 0

continue

for i in alist:

downlName = i.text

href = a.attrs['href']

if href != '' and i.text != '':

href="http://www.cqzj.gov.cn/"+a.attrs['href'][3:]

fm = href.rfind('.')

downlName = re.sub('.*/|\..*', '', downlName) + href[fm:]

name = path + '/' + downlName

try:

urllib.urlretrieve(href, unicode(name))

except Exception, e:

continue

def getMaxPage(content):

soup = BeautifulSoup(content)

pagenum = soup.select("td")

pagenum = 23

return pagenum

root_html = 'http://www.cqzj.gov.cn/ZJ_Page/List.aspx?levelid=324&dh=1'

driver = webdriver.PhantomJS(executable_path='E:/work/PyCharm/phantomjs-2.1.1-windows/bin/phantomjs.exe') # 改这里

driver.get(root_html)

content = driver.page_source

page_num = getMaxPage(content)

htmls = [root_html]

for html in htmls:

print html

save(driver, html, 'D:/数据采集/重庆/质检') # 改这里

跑出来结果 爬不到我需要的列表 总是为空

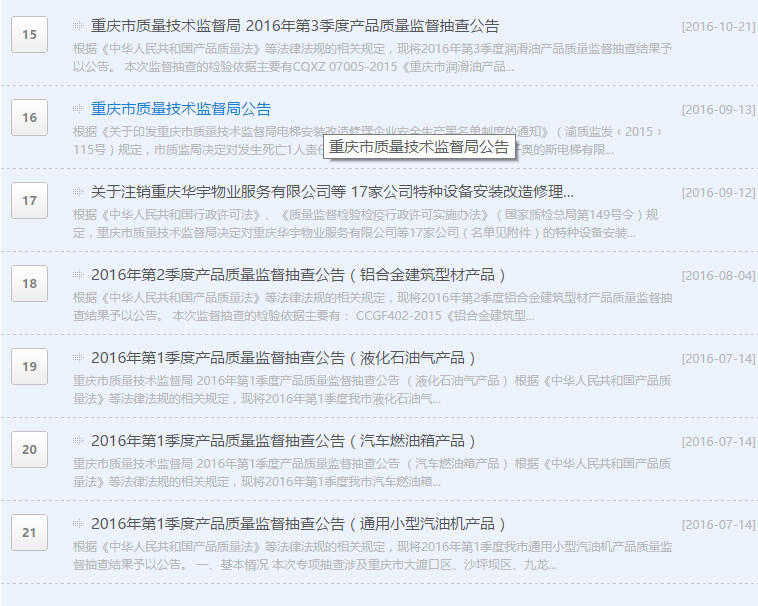

这些我爬不到

我也不太会这个 程序是别人给的 我改了改哪些路径 求大神指点