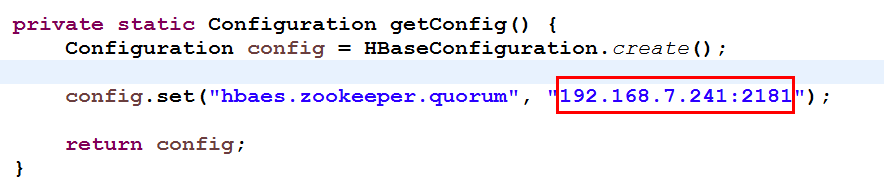

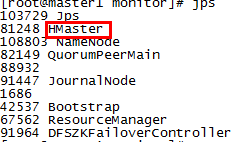

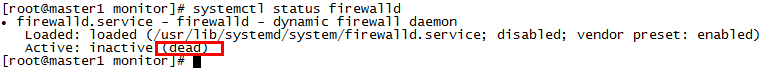

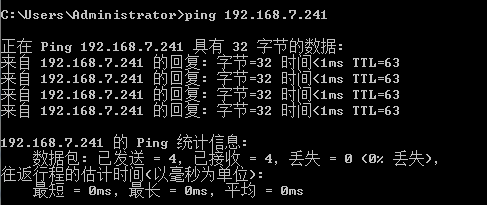

Linux和Windows环境都已经关闭了防火墙,也可以ping通并且HMaster的2181端口也在监听,但是连接不上zookeeper

以下是截图:

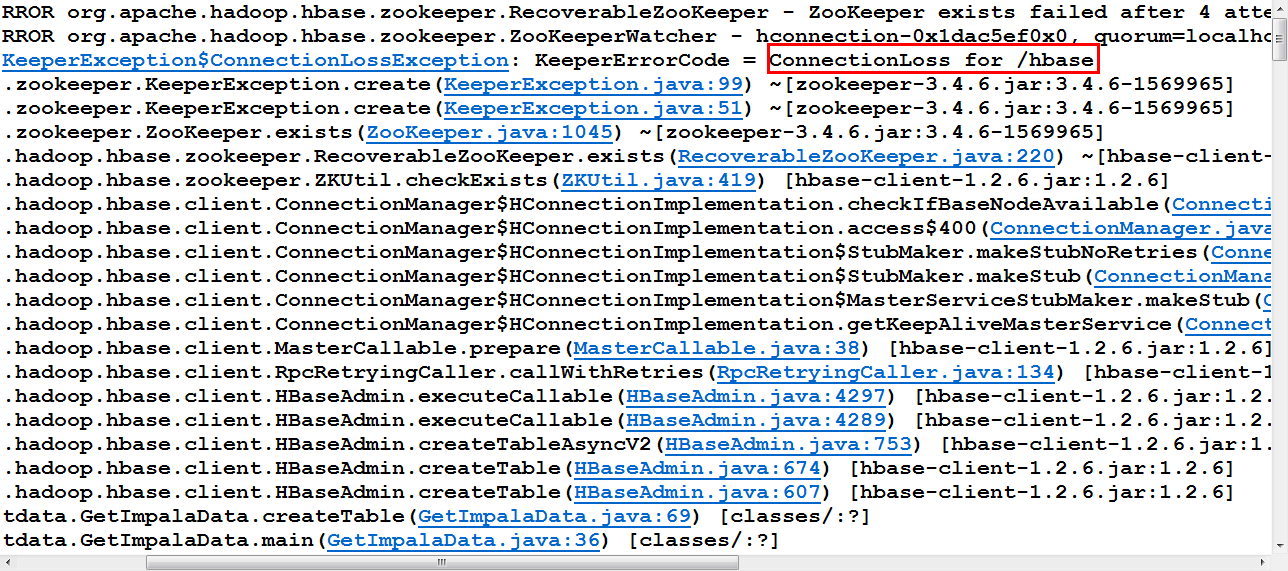

KeeperErrorCode=ConnectionLoss for /hbase/hbaseid

- 写回答

- 好问题 0 提建议

- 关注问题

- 邀请回答

-

3条回答 默认 最新

sanjer 2018-03-17 05:28关注

sanjer 2018-03-17 05:28关注防火墙iptables文件中要配置允许2181端口(或者关闭防火墙测试)。如果是阿里云主机,还要在阿里云管理平台上,给实例安全组添加上2181端口。

阿里云主机开放端口参考链接https://jingyan.baidu.com/article/03b2f78c31bdea5ea237ae88.html解决 无用评论 打赏 举报