刚入门学习hadoop,然后在sqoop数据迁移这里遇到了问题,linux下使用sqoop连接不上windows系统的MySQL数据库,按照网上的许多方法都没解决。

linux系统是centos6.4,然后hadoop2.4.1,sqoop1.4.7,windows下是mysql5.7

下面是报错信息:

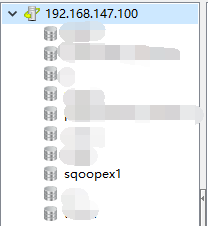

[root@itcast01 bin]# ./sqoop list-tables --connect jdbc:mysql://192.168.147.100:3306/sqoopex1 --username root -password 1234

18/07/12 16:17:28 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7

18/07/12 16:17:28 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

18/07/12 16:17:28 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

18/07/12 16:18:31 ERROR manager.CatalogQueryManager: Failed to list tables

com.mysql.jdbc.exceptions.jdbc4.CommunicationsException: Communications link failure

The last packet successfully received from the server was 1,531,383,511,816 milliseconds ago. The last packet sent successfully to the server was 0 milliseconds ago.

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:408)

at com.mysql.jdbc.Util.handleNewInstance(Util.java:406)

at com.mysql.jdbc.SQLError.createCommunicationsException(SQLError.java:1074)

at com.mysql.jdbc.ConnectionImpl.createNewIO(ConnectionImpl.java:2214)

at com.mysql.jdbc.ConnectionImpl.(ConnectionImpl.java:773)

at com.mysql.jdbc.JDBC4Connection.(JDBC4Connection.java:46)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:408)

at com.mysql.jdbc.Util.handleNewInstance(Util.java:406)

at com.mysql.jdbc.ConnectionImpl.getInstance(ConnectionImpl.java:352)

at com.mysql.jdbc.NonRegisteringDriver.connect(NonRegisteringDriver.java:282)

at java.sql.DriverManager.getConnection(DriverManager.java:664)

at java.sql.DriverManager.getConnection(DriverManager.java:247)

at org.apache.sqoop.manager.SqlManager.makeConnection(SqlManager.java:904)

at org.apache.sqoop.manager.GenericJdbcManager.getConnection(GenericJdbcManager.java:59)

at org.apache.sqoop.manager.CatalogQueryManager.listTables(CatalogQueryManager.java:102)

at org.apache.sqoop.tool.ListTablesTool.run(ListTablesTool.java:49)

at org.apache.sqoop.Sqoop.run(Sqoop.java:147)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70)

at org.apache.sqoop.Sqoop.runSqoop(Sqoop.java:183)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:234)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:243)

at org.apache.sqoop.Sqoop.main(Sqoop.java:252)

Caused by: com.mysql.jdbc.exceptions.jdbc4.CommunicationsException: Communications link failure

The last packet successfully received from the server was 1,531,383,511,809 milliseconds ago. The last packet sent successfully to the server was 0 milliseconds ago.

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:408)

at com.mysql.jdbc.Util.handleNewInstance(Util.java:406)

at com.mysql.jdbc.SQLError.createCommunicationsException(SQLError.java:1074)

at com.mysql.jdbc.MysqlIO.(MysqlIO.java:341)

at com.mysql.jdbc.ConnectionImpl.createNewIO(ConnectionImpl.java:2137)

... 21 more

Caused by: java.net.ConnectException: 连接超时

at java.net.PlainSocketImpl.socketConnect(Native Method)

at java.net.AbstractPlainSocketImpl.doConnect(AbstractPlainSocketImpl.java:345)

at java.net.AbstractPlainSocketImpl.connectToAddress(AbstractPlainSocketImpl.java:206)

at java.net.AbstractPlainSocketImpl.connect(AbstractPlainSocketImpl.java:188)

at java.net.SocksSocketImpl.connect(SocksSocketImpl.java:392)

at java.net.Socket.connect(Socket.java:589)

at java.net.Socket.connect(Socket.java:538)

at java.net.Socket.(Socket.java:434)

at java.net.Socket.(Socket.java:244)

at com.mysql.jdbc.StandardSocketFactory.connect(StandardSocketFactory.java:253)

at com.mysql.jdbc.MysqlIO.(MysqlIO.java:290)

... 22 more

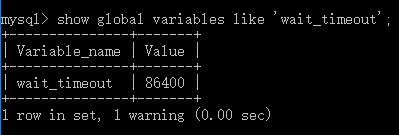

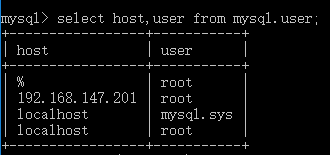

zookeeper和hadoop服务都开启了的,防火墙也关闭了,去度娘有人说修改my.ini文件,说在[mysqld] 那里加一行: wait_timeout=86400 。 但是我修改后还是报同样的错误。mysql权限也赋予了的。数据库连接驱动使用mysql-connector-5.1.8.jar。

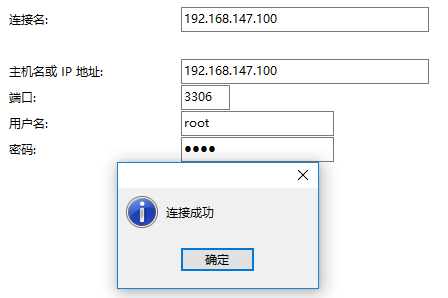

连接的ip地址192.168.147.100是windows的VMnet1的ip地址,能ping通。然后就是连接不上数据库。使用Navicat连接也能连得上。

有没有大牛知道我问题出在哪里?感激不尽!