D:\hailong\scrapy\movie>Scrapy crawl meiju

2021-05-13 22:29:09 [scrapy.utils.log] INFO: Scrapy 2.5.0 started (bot: movie)

2021-05-13 22:29:09 [scrapy.utils.log] INFO: Versions: lxml 4.6.3.0, libxml2 2.9.5, cssselect 1.1.0, parsel 1.6.0, w3lib 1.22.0, Twisted 21.2.0, Python 3.6.3 (v3.6.3:2c5fed8, Oct 3 2017, 18:11:49) [MSC v.1900 64 bit (AMD64)], pyOpenSSL 20.0.1 (OpenSSL 1.1.1k 25 Mar 2021), cryptography 3.4.7, Platform Windows-10-10.0.18362-SP0

2021-05-13 22:29:09 [scrapy.utils.log] DEBUG: Using reactor: twisted.internet.selectreactor.SelectReactor

2021-05-13 22:29:09 [scrapy.crawler] INFO: Overridden settings:

{'BOT_NAME': 'movie',

'DOWNLOAD_DELAY': 1,

'NEWSPIDER_MODULE': 'movie.spiders',

'SPIDER_MODULES': ['movie.spiders']}

2021-05-13 22:29:09 [scrapy.extensions.telnet] INFO: Telnet Password: d70954f5035d6949

2021-05-13 22:29:09 [scrapy.middleware] INFO: Enabled extensions:

['scrapy.extensions.corestats.CoreStats',

'scrapy.extensions.telnet.TelnetConsole',

'scrapy.extensions.logstats.LogStats']

2021-05-13 22:29:09 [scrapy.middleware] INFO: Enabled downloader middlewares:

['scrapy.downloadermiddlewares.httpauth.HttpAuthMiddleware',

'scrapy.downloadermiddlewares.downloadtimeout.DownloadTimeoutMiddleware',

'scrapy.downloadermiddlewares.defaultheaders.DefaultHeadersMiddleware',

'scrapy.downloadermiddlewares.useragent.UserAgentMiddleware',

'scrapy.downloadermiddlewares.retry.RetryMiddleware',

'scrapy.downloadermiddlewares.redirect.MetaRefreshMiddleware',

'scrapy.downloadermiddlewares.httpcompression.HttpCompressionMiddleware',

'scrapy.downloadermiddlewares.redirect.RedirectMiddleware',

'scrapy.downloadermiddlewares.cookies.CookiesMiddleware',

'scrapy.downloadermiddlewares.httpproxy.HttpProxyMiddleware',

'scrapy.downloadermiddlewares.stats.DownloaderStats']

2021-05-13 22:29:09 [scrapy.middleware] INFO: Enabled spider middlewares:

['scrapy.spidermiddlewares.httperror.HttpErrorMiddleware',

'scrapy.spidermiddlewares.offsite.OffsiteMiddleware',

'scrapy.spidermiddlewares.referer.RefererMiddleware',

'scrapy.spidermiddlewares.urllength.UrlLengthMiddleware',

'scrapy.spidermiddlewares.depth.DepthMiddleware']

2021-05-13 22:29:09 [scrapy.middleware] INFO: Enabled item pipelines:

['movie.pipelines.MoviePipeline']

2021-05-13 22:29:09 [scrapy.core.engine] INFO: Spider opened

2021-05-13 22:29:09 [scrapy.extensions.logstats] INFO: Crawled 0 pages (at 0 pages/min), scraped 0 items (at 0 items/min)

2021-05-13 22:29:09 [scrapy.extensions.telnet] INFO: Telnet console listening on 127.0.0.1:6023

2021-05-13 22:29:09 [scrapy.core.engine] DEBUG: Crawled (200) <GET https://www.meijutt.tv/new100.html> (referer: None)

888888888

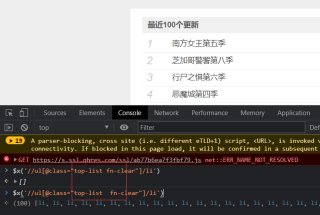

[]

2021-05-13 22:29:10 [scrapy.core.engine] INFO: Closing spider (finished)

2021-05-13 22:29:10 [scrapy.statscollectors] INFO: Dumping Scrapy stats:

{'downloader/request_bytes': 225,

'downloader/request_count': 1,

'downloader/request_method_count/GET': 1,

'downloader/response_bytes': 7510,

'downloader/response_count': 1,

'downloader/response_status_count/200': 1,

'elapsed_time_seconds': 0.237611,

'finish_reason': 'finished',

'finish_time': datetime.datetime(2021, 5, 13, 14, 29, 10, 87193),

'httpcompression/response_bytes': 48328,

'httpcompression/response_count': 1,

'log_count/DEBUG': 1,

'log_count/INFO': 10,

'response_received_count': 1,

'scheduler/dequeued': 1,

'scheduler/dequeued/memory': 1,

'scheduler/enqueued': 1,

'scheduler/enqueued/memory': 1,

'start_time': datetime.datetime(2021, 5, 13, 14, 29, 9, 849582)}

2021-05-13 22:29:10 [scrapy.core.engine] INFO: Spider closed (finished)

以下是代码部分:

meiju.py

import scrapy

from movie.items import MovieItem

class MeijuSpider(scrapy.Spider):#继承这个类

name = 'meiju'#名字

allowed_domains = ['meijutt.tv']#域名

start_urls = ['https://www.meijutt.tv/new100.html']#要补充完整

def parse(self, response):

print(888888888)

movies = response.xpath('//ul[@class="top-list fn-clear"]/li')#意思是选中所有的属性class值为"top-list fn-clear"的ul下的li标签内容

print(movies)

for each_movie in movies:

item = MovieItem()

item['name'] = each_movie.xpath('./h5/a/@title').extract()[0]

# .表示选取当前节点,也就是对每一项li,其下的h5下的a标签中title的属性值

yield item #一种特殊的循环

settings.py

ITEM_PIPELINES = {'movie.pipelines.MoviePipeline':100}

ROBOTSTXT_OBEY = False

DOWNLOAD_DELAY = 1

items.py

import scrapy

class MovieItem(scrapy.Item):

name = scrapy.Field()

pipelines.py

import json

from itemadapter import ItemAdapter

class MoviePipeline(object):

def process_item(self, item, spider):

return item