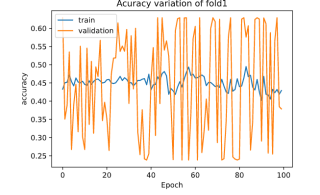

如题,我所用的CNN代码和训练结果见下:我用了Adam和RMSprop,感觉可能用adam激活器要更好一点?但是感觉训练的也不是很令人满意,有大佬可以帮忙指点一下要怎么改进吗?

import tensorflow as tf

from keras.models import Model

from keras.layers.core import Dense, Dropout

from keras.layers.convolutional import Convolution1D, MaxPooling1D

from keras.layers import Embedding,BatchNormalization,LSTM, Bidirectional,Masking, Input, Multiply, Activation, Lambda, Add,Reshape

from keras import models, Sequential

from keras import backend as K

from sklearn import metrics

def cnn_bilstm_am_model(filters=32, kernel_size=3, pooling_size=2, lstm_units=32, embedding_vec=encoding_vectors, attention_size=50):

model = Sequential()

model.add(Embedding(len(embedding_vec), len(embedding_vec[0]), weights=[embedding_vec],input_length=2000,trainable=False) )

model.add(Convolution1D(32, kernel_size, padding='same', activation='relu', use_bias=False) )

model.add(BatchNormalization())

model.add(MaxPooling1D(pool_size=pooling_size, strides=1))

model.add(Convolution1D(64, kernel_size, padding='same', activation='relu', use_bias=False) )

model.add(BatchNormalization())

model.add(MaxPooling1D(pool_size=pooling_size, strides=1))

#model.add(Bidirectional(LSTM(lstm_units, dropout=0.1, return_sequences=True) ) )

model.add(tf.keras.layers.Flatten())

model.add(Dense(256,activation='sigmoid'))

model.add(Dropout(0.5))

model.add(Dense(2))

from keras import optimizers

optim = optimizers.RMSprop(lr=0.001)

model.compile(loss='binary_crossentropy', optimizer=optim , metrics=['accuracy'],)

return model

model = cnn_bilstm_am_model()

model.summary()

from keras.callbacks import ModelCheckpoint,EarlyStopping,ReduceLROnPlateau

for fold_num, (train_indices, test_indices) in enumerate(folds):

if fold_num == 0:

print('Evaluating fold{}'.format(fold_num+1))

x_train = X[train_indices]

y_train = y[train_indices]

x_test = X[test_indices]

y_test = y[test_indices]

filepath='./best_model/rmsprop_2cnn_2dense_weights_of_fold{}.best.hdf5'.format(fold_num)

checkpoint = ModelCheckpoint(filepath, monitor='val_accuracy', verbose=1,save_best_only=True,mode='max',period=1)

reducelr = ReduceLROnPlateau(monitor='val_accuracy', factor=0.8,patience=10,verbose=1,mode="max",min_lr=0.0001)

callbacks_list = [checkpoint,reducelr]

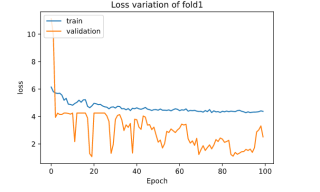

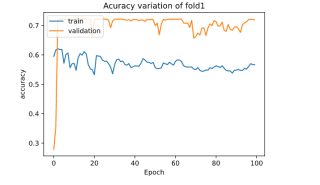

train_history = model.fit(x_train,y_train,validation_split=0.1,epochs=100,batch_size=100,verbose=2,callbacks = callbacks_list)激活器为RMSprop

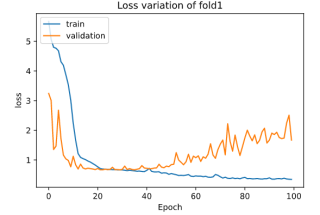

激活器为Adam