def __init__(self):

self.start_urls = pd.read_excel('xinfangcitymatch.xlsx')['https'][30:45]

self.quchong = {}

self.cookies = RequestsCookieJar

self.headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; ox64) AppleWebKit/537.36'

' (KHTML, like Gecko) Chrome/75.0.3770.142 Safari/537.36',

'accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9',

'accept-encoding': 'gzip, deflate, br',

'accept-language': 'zh-CN,zh;q=0.9,zh-TW;q=0.8,en-US;q=0.7,en;q=0.6',

}

self.cookies1 = {

'global_cookie': '2a8hamrvwdz0punlkee3ifojo26jyqn1d1y',

'unique_cookie': 'U_2a8hamrvwdz0punlkee3ifojo26jyqn1d1y*1',

'city': 'www',

}

self.excel_head = ['date','city','perprice', 'xiaqu','fangwu', 'zhuangxiu', 'huanxian', 'zhandi', 'jianmian', 'rongji', 'lvhua', 'chewei', 'wuye']

self.today_str = datetime.strftime(datetime.now(), '%Y-%m-%d')

def get_html(self, url):

self.headers['Referer'] = url

try:

response = requests.get(url, headers=self.headers, timeout=5, allow_redirects=False, cookies=self.cookies1)

except Exception as e:

print(url)

print('网络不好,正在重新爬取')

time.sleep(1)

return self.get_html(url)

if response.status_code == 200:

#

return response.content.decode('gb2312', errors='ignore')

elif response.status_code == 403:

print(response.status_code)

time.sleep(1)

return self.get_html(url)

elif response.status_code == 302:

print(url)

# print('cookies失效')

print('访问受限')

time.sleep(1)

return self.get_html(url)

else:

time.sleep(1)

print(response.status_code)

return self.get_html(url)

def get_html_two(self, url):

self.headers['Referer'] = url

try:

response = requests.get(url, headers=self.headers, timeout=5, allow_redirects=False, cookies=self.cookies1)

except Exception as e:

print(url)

print('网络不好,正在重新爬取')

time.sleep(1)

return self.get_html(url)

if response.status_code == 200:

#gb2312

return response.content.decode('utf8', errors='ignore')

elif response.status_code == 403:

print(response.status_code)

time.sleep(1)

return self.get_html(url)

elif response.status_code == 302:

print(url)

# print('cookies失效')

print('访问受限')

time.sleep(1)

return self.get_html(url)

else:

time.sleep(1)

print(response.status_code)

return self.get_html(url)

def parse(self, current_city_url, html, city_name):

file_name = f'C:/Users/DELL/Desktop/新房/{city_name}{self.today_str}房天下新房.xlsx'

if not os.path.exists(file_name):

wb = openpyxl.Workbook()

ws = wb.worksheets[0]

self.save_to_excel(ws, 0, self.excel_head)

wb.save(file_name)

row_count = 1

html_eles = etree.HTML(html)

# 获取尾页

total_href = html_eles.xpath('//a[@class="last"]/@href')

if not total_href:

print(city_name + '解析分页方式有误')

return

# 处理总页码

total_pn = int(total_href[0].split('/')[-2].replace('b9', ''))

for pn in range(2, total_pn+1):

wb = openpyxl.load_workbook(file_name)

ws = wb.worksheets[0]

# house/s/b91/

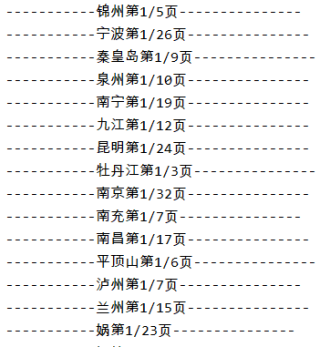

print(f'-----------{city_name}第{pn-1}/{total_pn}页---------------')

next_url = current_city_url + 'house/s/b9' + str(pn) + '/'

# 获取网页所有楼盘信息

house_eles = html_eles.xpath('//div[@id="newhouse_loupai_list"]/ul/li')

# 遍历每个楼盘获取楼盘信息

for house_ele in house_eles:

# 获取楼盘链接

house_url = house_ele.xpath('.//div[@class="nlcd_name"]/a/@href')

if house_url: # 图片数量不存在说明,是广告,不做处理

house_url = 'https:' + house_url[0] # 拼全url

try:

# 提取楼板id

if house_url in self.quchong[city_name]:

continue

# 楼盘均价(perprice)

perprice = ''.join([field.replace('/O', '/㎡') for field in house_ele.xpath('.//div[@class="nhouse_price"]//text()') if field.isdigit() or '元' in field ])

perprice = perprice if perprice else '价格待定'

# 辖区

xiaqu_item = house_ele.xpath('.//div[@class="address"]/a/text()')

xiaqu = re.findall('\[(.*?)\]', xiaqu_item[0].strip())[0] if xiaqu_item and re.findall('\[(.*?)\]', xiaqu_item[0].strip()) else '无'

if xiaqu == '无':

xiaqu_item = house_ele.xpath('.//span[@class="sngrey"]/text()')

xiaqu = re.findall('\[(.*?)\]', xiaqu_item[0].strip())[0] if xiaqu_item and re.findall(

'\[(.*?)\]', xiaqu_item[0].strip()) else '无'

# 房屋面积(fangwu)

# print(house_url)

fangwu = re.sub('\t|\n|-', '', house_ele.xpath('.//div[@class="house_type clearfix"]//text()')[-1])

fangwu = fangwu if fangwu else '无'

# 获取二级页面

html = self.get_html(house_url)

if city_name not in html:

html = self.get_html_two(house_url)

# 获取更多详细信息链接

more_info_url = etree.HTML(html).xpath('//a[text()="更多详细信息>>"]/@href')[0]

# 获取三级页面

if '//' not in more_info_url:

continue

html = self.get_html('https:' + more_info_url)

if city_name not in html:

html = self.get_html_two('https:' + more_info_url)

house_ele = etree.HTML(html)

# 装修状况

try:

zhuangxiu = re.sub('\n|\t| |', '', house_ele.xpath('//div[text()="装修状况:"]/following-sibling::div[1]/text()')[0])

except:

zhuangxiu = ''

# 环线位置(huanxian)

huanxian = re.sub('\n|\t| |', '', house_ele.xpath('//div[text()="环线位置:"]/following-sibling::div[1]/text()')[0])\

if house_ele.xpath('//div[text()="环线位置:"]/following-sibling::div[1]/text()') else '无'

# 占地面积(zhandi)、建筑面积(jianmian)、容积率(rongji)、绿化率(lvhua)、停车位(chewei)、物业费(wuye)

zhandi = house_ele.xpath('//div[text()="占地面积:"]/following-sibling::div[1]/text()')[0]

jianmian = house_ele.xpath('//div[text()="建筑面积:"]/following-sibling::div[1]/text()')[0]

guihua_info = house_ele.xpath('//h3[text()="小区规划"]/following-sibling::ul[1]/li')

rongji = guihua_info[2].xpath('./div[2]/text()')[0].strip('\xa0 ')

lvhua = guihua_info[3].xpath('./div[2]/text()')[0]

chewei = guihua_info[4].xpath('./div[2]/text()')[0]

wuye = guihua_info[8].xpath('./div[2]/text()')[0].replace('/O', '/㎡')

except Exception as e:

continue

else:

if row_count > 1000:

wb.save(file_name)

return

if house_url[0] not in self.quchong[city_name]:

print(perprice, xiaqu, fangwu, zhuangxiu, huanxian, zhandi, jianmian, rongji, lvhua, chewei, wuye)

print(f'正在爬取:{city_name}-->第{row_count}条新房信息', )

# 保存数据

self.save_to_excel(ws, row_count, [self.today_str,city_name,perprice, xiaqu, fangwu, zhuangxiu, huanxian, zhandi, jianmian, rongji, lvhua, chewei, wuye])

row_count += 1

self.quchong[city_name].append(house_url) # 将爬取过的楼盘id放进去,用于去重

else:

print('已存在')

wb.save(file_name)

html = self.get_html(next_url)

if not html:

return

html_eles = etree.HTML(html)

def run_spider(self, city_url_list):

for city_url in city_url_list:

current_city_url = city_url

city_url = city_url + 'house/s/' if 'house/s/' not in city_url else city_url

try:

html = self.get_html(city_url)

city_name = re.findall(re.compile('class="s4Box"><a href="#">(.*?)</a>'), html)[0] # 获取城市名

self.quchong[city_name] = [] # 构建{'城市名': [新房1,2,3,4,]}用于去重

self.parse(current_city_url, html, city_name)

except Exception as e:

print(city_url)

pass

# 数组拆分 (将一个大元组拆分多个小元组,用于多线程任务分配)

def div_list(self, ls, n):

result = []

cut = int(len(ls)/n)

if cut == 0:

ls = [[x] for x in ls]

none_array = [[] for i in range(0, n-len(ls))]

return ls+none_array

for i in range(0, n-1):

result.append(ls[cut*i:cut*(1+i)])

result.append(ls[cut*(n-1):len(ls)])

return result

def save_to_excel(self, ws, row_count, data):

for index, value in enumerate(data):

ws.cell(row=row_count+1, column=index + 1, value=value) # openpyxl 是以1,开始第一行,第一列

# 单线程

# for city_url in spider.start_urls[:1]:

# spider.run_spider([city_url])

# spider.run_spider(['https://qhd.newhouse.fang.com/'])

原本应该是洛阳的变成了娲 不知道该如何解决