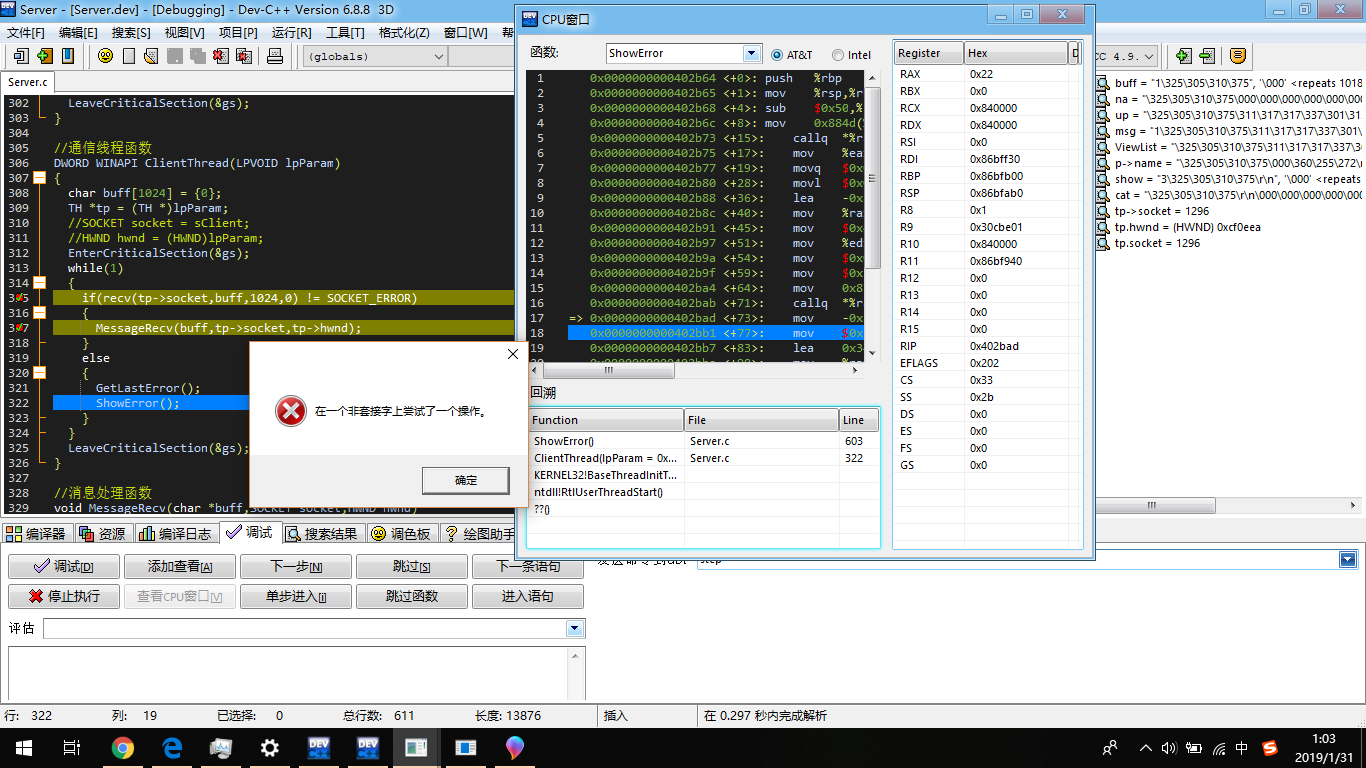

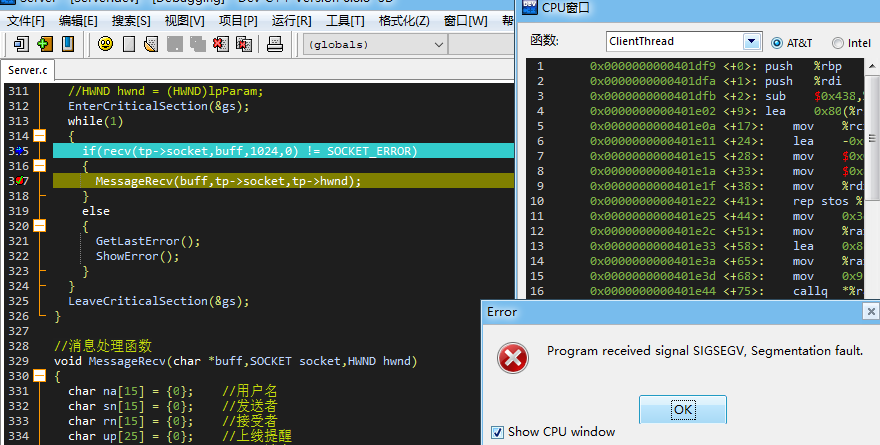

如图,通过右边的变量信息可知在第一个线程中socket没错,recv成功接收到消息,但把socket传递给下一个消息循环接收处理线程却报错:在一个非套接字上尝试了一个操作。继续执行,则会出现第二张图上的错误提示

即段错误,不知该怎么解决,望有大神能够解答下我。多谢

下面是两个线程函数代码

//服务器线程函数

DWORD WINAPI ServerThread(LPVOID lpParam)

{

int AddrSize;

char buff[1024] = {0}; //存放接受信息

TH tp; //传递的参数

struct sockaddr_in their_addr;

EnterCriticalSection(&gs);

AddrSize = sizeof(struct sockaddr_in);

tp.hwnd = (HWND)lpParam;

tp.socket = accept(sockfd ,(struct sockaddr*)&their_addr ,&AddrSize);

if(tp.socket != INVALID_SOCKET)

{

recv(tp.socket,buff,20,0);

MessageRecv(buff,tp.socket,tp.hwnd);

cThread = (HANDLE)CreateThread(NULL,0,ClientThread ,&tp, 0, NULL);

if (cThread == NULL)

{

GetLastError();

ShowError();

}

}

LeaveCriticalSection(&gs);

}

//通信线程函数

DWORD WINAPI ClientThread(LPVOID lpParam)

{

char buff[1024] = {0};

TH *tp = (TH *)lpParam;

EnterCriticalSection(&gs);

while(1)

{

if(recv(tp->socket,buff,1024,0) != SOCKET_ERROR)

{

MessageRecv(buff,tp->socket,tp->hwnd);

}

else

{

GetLastError();

ShowError();

}

}

LeaveCriticalSection(&gs);

}