kafka 使用kerberos协议的时候,启动kakfa的时候报zookeeper校验不通过。

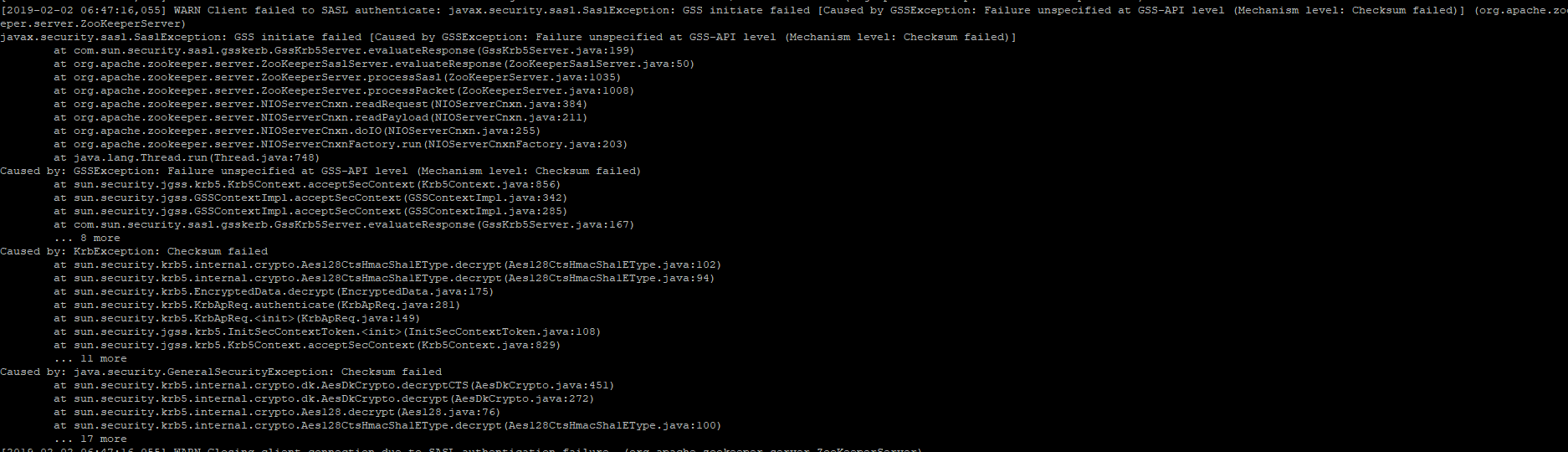

错误信息如下:

kerberos的etc/krb5.conf配置信息:[logging]

default = FILE:/var/log/krb5libs.log

kdc = FILE:/var/log/krb5kdc.log

admin_server = FILE:/var/log/kadmind.log

[libdefaults]

default_realm = EXAMPLE.COM

default_tkt_enctypes = arcfour-hmac-md5

dns_lookup_realm = false

dns_lookup_kdc = false

ticket_lifetime = 24h

renew_lifetime = 7d

forwardable = true

[realms]

EXAMPLE.COM = {

kdc = 192.168.1.41

admin_server = 192.168.1.41

}

[domain_realm]

kafka = EXAMPLE.COM

zookeeper = EXAMPLE.COM

weiwei = EXAMPLE.COM

192.168.1.41 = EXAMPLE.COM

127.0.0.1 = EXAMPLE.COM

kerberos 的var/kerberos/krb5kdc/kdc.conf的配置信息:

[kdcdefaults]

kdc_ports = 88

kdc_tcp_ports = 88

[realms]

EXAMPLE.COM = {

#master_key_type = aes256-cts

acl_file = /var/kerberos/krb5kdc/kadm5.acl

dict_file = /usr/share/dict/words

admin_keytab = /var/kerberos/krb5kdc/kadm5.keytab

max_renewable_life = 7d

supported_enctypes = aes128-cts:normal des3-hmac-sha1:normal arcfour-hmac:normal des-hmac-sha1:normal des-cbc-md5:normal des-cbc-crc:normal

}

kafka的kafka_server_jaas.conf的配置信息:

KafkaServer {

com.sun.security.auth.module.Krb5LoginModule required

useKeyTab=true

storeKey=true

keyTab="/var/kerberos/krb5kdc/kafka.keytab"

principal="kafka/weiwei@EXAMPLE.COM";

};

Client {

com.sun.security.auth.module.Krb5LoginModule required

useKeyTab=true

storeKey=true

keyTab="/var/kerberos/krb5kdc/kafka.keytab"

principal="zookeeper/192.168.1.41@EXAMPLE.COM";

};

zookeeper_jaas.conf的配置信息:

Server{

com.sun.security.auth.module.Krb5LoginModule required

useKeyTab=true

storeKey=true

useTicketCache=false

keyTab="/var/kerberos/krb5kdc/kafka.keytab"

principal="zookeeper/192.168.1.41@EXAMPLE.COM";

};

zookeeper.properties的新增配置信息:

authProvider.1=org.apache.zookeeper.server.auth.SASLAuthenticationProvider

requireClientAuthScheme=sasl

jaasLoginRenew=3600000

server.properties 新增的配置信息:

advertised.host.name=192.168.1.41

advertised.listeners=SASL_PLAINTEXT://192.168.1.41:9092

listeners=SASL_PLAINTEXT://192.168.1.41:9092

#listeners=PLAINTEXT://127.0.0.1:9093

security.inter.broker.protocol=SASL_PLAINTEXT

sasl.mechanism.inter.broker.protocol=GSSAPI

sasl.enabled.mechanisms=GSSAPI

sasl.kerberos.service.name=kafka

zookeeper-server-start.sh 新增的配置信息

export KAFKA_OPTS='-Djava.security.krb5.conf=/etc/krb5.conf -Djava.security.auth.login.config=/home/shubei/Downloads/kafka_2.12-1.0.0/config/zookeeper_jaas.conf'

kafka-server-start.sh 新增的配置信息:

export KAFKA_OPTS='-Djava.security.krb5.conf=/etc/krb5.conf -Djava.security.auth.login.config=/home/shubei/Downloads/kafka_2.12-1.0.0/config/kafka_server_jaas.conf'

配置信息基本是这样,快过年了,小弟在线求救,再预祝大侠们新年快乐。