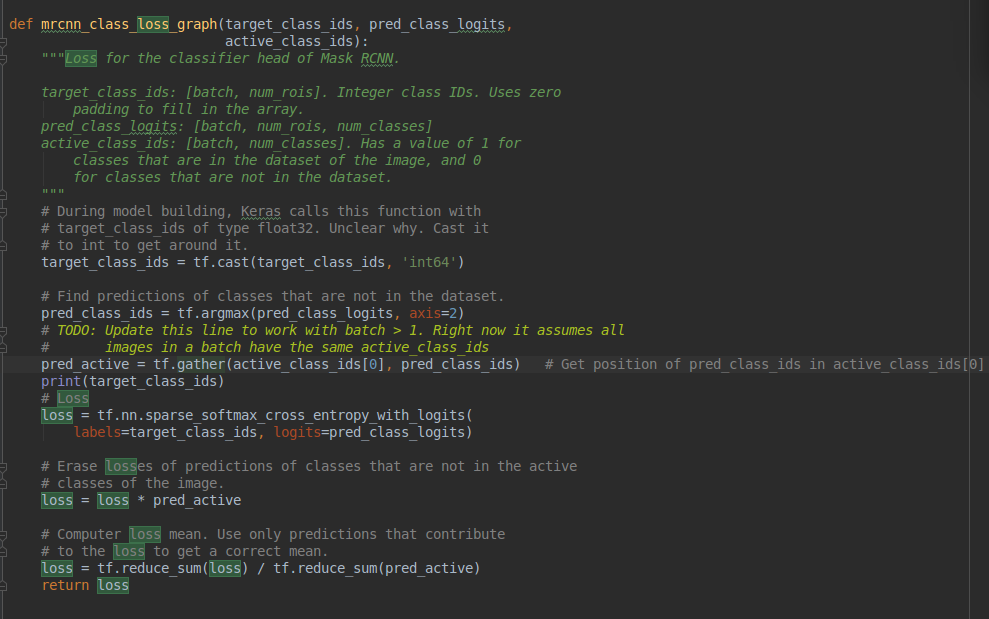

大家能帮忙看一下这个tf.nn.sparse_softmax_cross_entropy_with_logits的输入都是几维的么?为什么看注释的话好像预测值和标签值维度是不一样的阿

大家能帮忙看一下这个tf.nn.sparse_softmax_cross_entropy_with_logits的输入都是几维的么?

- 写回答

- 好问题 0 提建议

- 追加酬金

- 关注问题

- 邀请回答

-

1条回答 默认 最新

关注不知道你这个问题是否已经解决, 如果还没有解决的话:

关注不知道你这个问题是否已经解决, 如果还没有解决的话:- 这篇文章:坑爹啊,tf.nn.softmax_cross_entropy_with_logits坑了我好久 也许有你想要的答案,你可以看看

- 你还可以看下tensorflow参考手册中的 tf.nn.sparse_softmax_cross_entropy_with_logits

- 除此之外, 这篇博客: tf.nn.softmax_cross_entropy_with_logits 和 tf.contrib.legacy_seq2seq.sequence_loss_by_example 的联系与区别中的 1.2 tf.nn.sparse_softmax_cross_entropy_with_logits 部分也许能够解决你的问题, 你可以仔细阅读以下内容或者直接跳转源博客中阅读:

主要区别:与上边函数不同,输入 labels 不是 one-hot 格式所以会少一维

函数输入:logits: [batch_size, num_classes]

labels: [batch_size]

logits和 labels 拥有相同的shape代码示例:

import tensorflow as tf labels = [0,1,2] #只需给类的编号,从 0 开始 logits = [[2,0.5,1], [0.1,1,3], [3.1,4,2]] logits_scaled = tf.nn.softmax(logits) result = tf.nn.sparse_softmax_cross_entropy_with_logits(labels=labels, logits=logits) with tf.Session() as sess: print(sess.run(result))

如果你已经解决了该问题, 非常希望你能够分享一下解决方案, 写成博客, 将相关链接放在评论区, 以帮助更多的人 ^-^解决 无用评论 打赏 举报