昨天第一次用了成功了,然后再用就没反应了,不知道为啥,代码如下

# 1. 如何提取单个页面的数据

# 2. 上线程池,多个页面同时抓取

import requests

from lxml import etree

import csv

from concurrent.futures import ThreadPoolExecutor

result = []

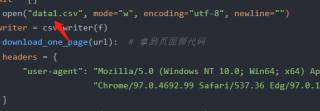

f = open("data1.csv", mode="w", encoding="utf-8", newline="")

csvwriter = csv.writer(f)

def download_one_page(url): # 拿到页面源代码

headers = {

"user-agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) "

"Chrome/97.0.4692.99 Safari/537.36 Edg/97.0.1072.69"

}

resp = requests.get(url, headers=headers)

html = etree.HTML(resp.text)

table = html.xpath("/html/body/div[3]/div[1]/div/div[1]/ol")[0]

trs = table.xpath("./li") # [1:] # 不要第0个数据

# trs = table.xptah("./li[position()>1]") # 不要第0个数据

for li in trs:

title = li.xpath("./div/div[2]/div[1]/a/span[1]/text()")

rating = li.xpath("./div/div[2]/div[2]/div/span[2]/text()")

oneresult = [title, rating]

result.append(oneresult)

#存放数据

csvwriter.writerow([title, rating])

f.close()

print(url, "提取完毕")

if __name__ == "__main__":

# 创建线程池

with ThreadPoolExecutor(50) as t:

for i in range(0, 250):

# 把下载任务交给线程池

t.submit(download_one_page, f"https://movie.douban.com/top250?start={i}&filter=")

print("全部下载完毕")

报错信息如下,也不知道是不是算报错信息,每次运行代码时候都提示

而且你们看她只执行了最后的print,函数def里面的print直接忽略了

C:\Python\Python37\python.exe D:/文档/研究生阶段/python/爬虫/小试牛刀/线程池和进程池实战.py

C:\Python\Python37\lib\site-packages\requests_init_.py:104: RequestsDependencyWarning: urllib3 (1.26.8) or chardet (2.0.3)/charset_normalizer (2.0.10) doesn't match a supported version!

RequestsDependencyWarning)

全部下载完毕

Process finished with exit code 0