我打印的所有的ip全是错误的

但是我自己单独使用不出错 应该是verify函数出错了 但是不知道怎么改 感觉timeout还没判断就执行到except去了

要没有人能够看出问题 下面是代码

import json

import requests

from bs4 import BeautifulSoup

import aiohttp

import aiofiles

import asyncore

import json

from lxml import etree

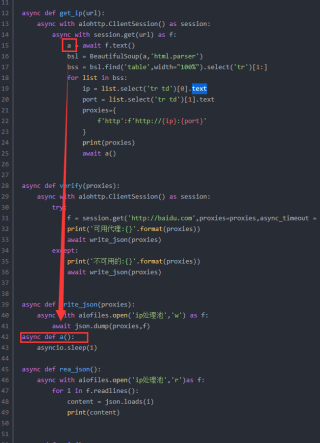

async def get_ip(url):

async with aiohttp.ClientSession() as session:

async with session.get(url) as f:

a = await f.text()

bsl = BeautifulSoup(a,'html.parser')

bss = bsl.find('table',width="100%").select('tr')[1:]

for list in bss:

ip = list.select('tr td')[0].text

port = list.select('tr td')[1].text

proxies={

f'http':f'http://{ip}:{port}',

f'https':f'https://{ip}:{port}'

}

asyncio.gather(verify(proxies))

async def verify(proxies):

async with aiohttp.ClientSession() as session:

try:

f = await session.get('https://www.baidu.com',proxies=proxies,async_timeout = 3)

print('可用代理:{}'.format(proxies))

await write_json(proxies)

except:

print('不可用的:{}'.format(proxies))

async def write_json(proxies):

async with aiofiles.open('ip处理池.json','a') as f:

await json.dump(proxies,f)

async def rea_json():

async with aiofiles.open('ip处理池.json','r')as f:

for i in f.readlines():

content = json.loads(i.strip())

print(content)

async def main():

tasks = []

for i in range(100):

url = f'http://www.66ip.cn/{i}.html'

tasks.append(asyncio.create_task(get_ip(url)))

await asyncio.wait(tasks)

if __name__ == '__main__':

loop = asyncio.get_event_loop()

loop.run_until_complete(main())