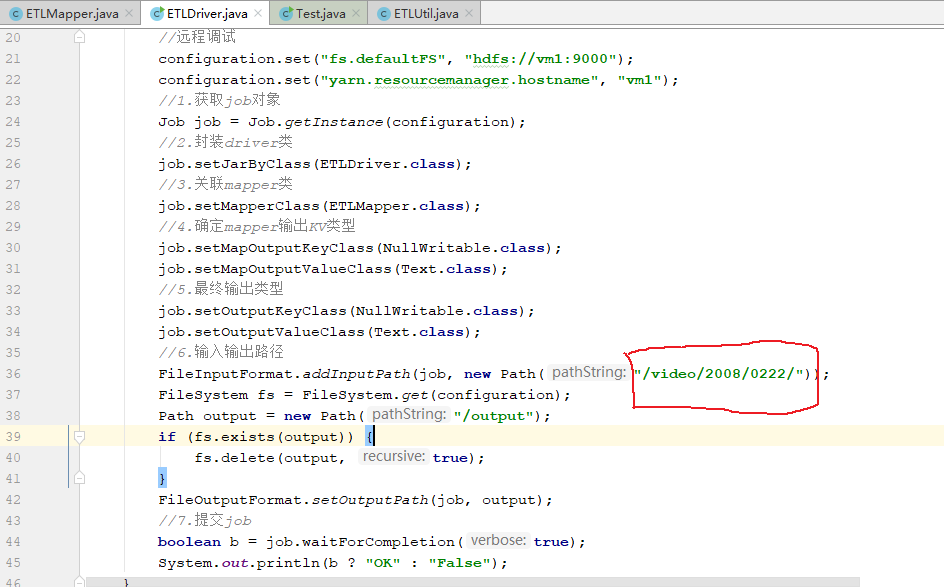

为什么这个地址需要指定到文件名才行,不然就要报空指针异常?

hadoop本地调试在输入路径中指定到文件名字才不报错,但是不指定具体的文件名字就报空指针

- 写回答

- 好问题 0 提建议

- 追加酬金

- 关注问题

- 邀请回答

-

1条回答 默认 最新

司砚章 2019-07-28 15:38关注

司砚章 2019-07-28 15:38关注最近编程遇到关于学习率的问题,查找资料已知keras学习率默认值为0.01,想修改这个默认值,网络上说修改keras安装路径下optimizer.py文件即可,但是optimizer.py文件有好几个,不知修改哪一...

解决 无用评论 打赏 举报

悬赏问题

- ¥100 c语言,请帮蒟蒻写一个题的范例作参考

- ¥15 名为“Product”的列已属于此 DataTable

- ¥15 安卓adb backup备份应用数据失败

- ¥15 eclipse运行项目时遇到的问题

- ¥15 关于#c##的问题:最近需要用CAT工具Trados进行一些开发

- ¥15 南大pa1 小游戏没有界面,并且报了如下错误,尝试过换显卡驱动,但是好像不行

- ¥15 没有证书,nginx怎么反向代理到只能接受https的公网网站

- ¥50 成都蓉城足球俱乐部小程序抢票

- ¥15 yolov7训练自己的数据集

- ¥15 esp8266与51单片机连接问题(标签-单片机|关键词-串口)(相关搜索:51单片机|单片机|测试代码)