1条回答 默认 最新

繁华三千东流水 2019-08-28 11:09关注

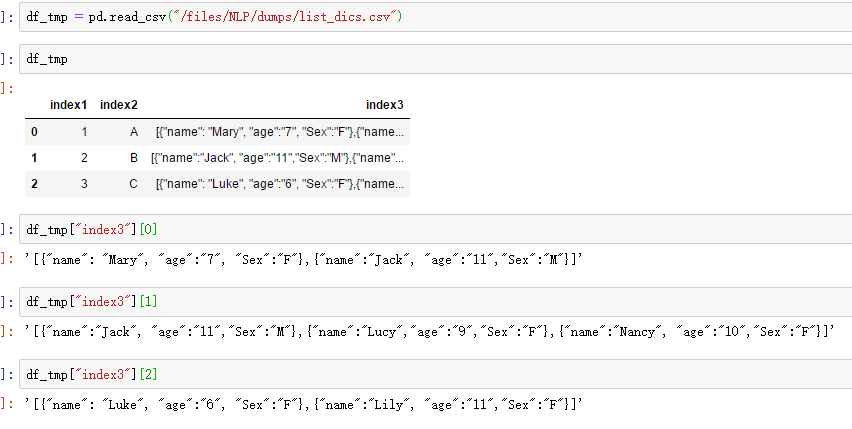

繁华三千东流水 2019-08-28 11:09关注只取name的值的话,可能正则匹配会快一些吧。首先取出第三例,每一行的列表转为字符串,使用re.findall去匹配name后面的值

import re ss = [{'name':'aaa','age':'17'},{'name':'bbb','age':'17'}] ss = str(ss) # print(ss) s = re.findall(r".*?name': '([a-z]+)",ss) print(s) ''' >>['aaa', 'bbb'] '''解决 无用评论 打赏 举报

悬赏问题

- ¥15 素材场景中光线烘焙后灯光失效

- ¥15 请教一下各位,为什么我这个没有实现模拟点击

- ¥15 执行 virtuoso 命令后,界面没有,cadence 启动不起来

- ¥50 comfyui下连接animatediff节点生成视频质量非常差的原因

- ¥20 有关区间dp的问题求解

- ¥15 多电路系统共用电源的串扰问题

- ¥15 slam rangenet++配置

- ¥15 有没有研究水声通信方面的帮我改俩matlab代码

- ¥15 ubuntu子系统密码忘记

- ¥15 保护模式-系统加载-段寄存器