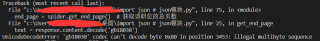

这是咋回事啊?改了好久都不对。

import json

import re

import requests

from lxml import etree

import csv

class Spider(object):

def __init__(self):

self.keyword = input("请输入搜索关键词:")

self.url = 'https://search.51job.com/list/000000,000000,0000,00,9,99,{},2,{}.html' # 网页url

self.headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.117 Safari/537.36',

'cookie': '_uab_collina=162694245076236003474511; guid=2806f21dc07e57b92a6d38ae6ad831db; nsearch=jobarea%3D%26%7C%26ord_field%3D%26%7C%26recentSearch0%3D%26%7C%26recentSearch1%3D%26%7C%26recentSearch2%3D%26%7C%26recentSearch3%3D%26%7C%26recentSearch4%3D%26%7C%26collapse_expansion%3D; search=jobarea%7E%60000000%7C%21ord_field%7E%600%7C%21recentSearch0%7E%60000000%A1%FB%A1%FA000000%A1%FB%A1%FA0000%A1%FB%A1%FA00%A1%FB%A1%FA99%A1%FB%A1%FA%A1%FB%A1%FA99%A1%FB%A1%FA99%A1%FB%A1%FA99%A1%FB%A1%FA99%A1%FB%A1%FA9%A1%FB%A1%FA99%A1%FB%A1%FA%A1%FB%A1%FA0%A1%FB%A1%FA%C9%CC%CE%F1%D3%A2%D3%EF%A1%FB%A1%FA2%A1%FB%A1%FA1%7C%21; acw_tc=2f624a4216269424503425150e0da85d25580876b2bc112b8d45305e878b60; acw_sc__v2=60f92bf226271b72b3adf6a65ef4bd4094c491a1; ssxmod_itna=QqmxnQi=qmuDB7DzOD2YLDkYOIhm0DDTaRweqxKDspbDSxGKidDqxBmnj2wAehYi+zDBIaoe=Du+QLzm2idILYIYfAa/UDB3DEx064btxYAkDt4DTD34DYDixibsxi5GRD0KDF8NVt/yDi3DbE=Di4D+8MQDmqG0DDU7S4G2D7U9R7Dr8q2U7nt3EkDeLA+9D0tdxBLeFpTYOcarBqrb=1K4KDDHD8H=9cDKY8GxHgQDzqODtqNMSMd3yPtVRk0s+SDolhGTK7P4fGAG7BhzlIx=b+hnv0Dbf0D+YbudUiL6xDf57B5QeD==; ssxmod_itna2=QqmxnQi=qmuDB7DzOD2YLDkYOIhm0DDTaRweqxikfoOqDlZ0DjbP6kjY6h6xlUxnzDCA6QPs7q0=PLYWwuza8UL+RLPoiBAFL=xnR6L1xqP8B2Fr8=xT67LRjkMy+62=MZEon/zHzWxTPGiqxdcqqQ0K=y=DbyjqrMCHWWfFrt3bIw2B150E=SnYWY2P7dZIi+=FTE3K80nq7eWfj=Fr=4SI7S+nLg/K0OlKXNZ9jaj96GEkTLSQQv+PbLflkyG3=Y1y1Z68NhO4Us7qqNQStVY90lDw7edKHeoFRuV/c=NnM2v3Z2TQS=dVZUTgKS63hiXTcqSp6K+NC6N8cYY96Mc=PKrujN3Er32PKmohY50Dr3O25tratE3UbbZb3S0K1XdPTkrrOFi8trK9To+iHUih/Uetr0rqrITbFW7/DaDG2z0qYDQKGmBRxtYa6S=AfhQifW9bD08DiQrYD==='

}

self.header = ['position', 'company', 'wages', 'place', 'education', 'work_experience', 'release_date',

'limit_people', 'address', 'company_type', 'company_size', 'industry']

self.fp = open('{}.csv'.format(self.keyword), 'a', encoding='utf-8-sig', newline='')

self.writer = csv.DictWriter(self.fp, self.header)

self.writer.writeheader()

def get_end_page(self):

response = requests.get(self.url.format(self.keyword, str(1)), headers=self.headers)

text = response.content.decode('gb18030')

json_obj = re.findall('window.__SEARCH_RESULT__ = (.*?)</script>', text)

print(json_obj)

py_obj = json.loads(json_obj[0])

end_page = py_obj['total_page']

return end_page

def get_url(self, count):

response = requests.get(url=self.url.format(self.keyword, count), headers=self.headers)

text = response.content.decode('gb18030')

json_obj = re.findall('window.__SEARCH_RESULT__ = (.*?)</script>', text)

py_obj = json.loads(json_obj[0])

detail_urls = [i['job_href'] for i in py_obj['engine_search_result']]

return detail_urls

def parse_url(self, url):

response = requests.get(url=url, headers=self.headers)

try: # 这里可能会出现解码错误,因为有个别很少的特殊网页结构,另类来的,不用管

text = response.content.decode('gb18030')

html = etree.HTML(text)

position = html.xpath("//div[@class='tHeader tHjob']//div[@class='cn']/h1/@title")[0] # 职位名

except Exception as e:

print("特殊网页:{},结束执行该函数,解析下一个详情url".format(e))

return

company = "".join(html.xpath("//div[@class='tHeader tHjob']//div[@class='cn']/p[1]/a[1]//text()")) # 公司名

wages = "".join(html.xpath("//div[@class='tHeader tHjob']//div[@class='cn']/strong/text()")) # 工资

informations = html.xpath("//div[@class='tHeader tHjob']//div[@class='cn']/p[2]/text()") # 获取地点经验学历等信息

informations = [i.strip() for i in informations]

place = informations[0]

education = "".join([i for i in informations if i in '初中及以下高中/中技/中专大专本科硕士博士无学历要求']) # 通过列表推导式获取学历

work_experience = "".join([i for i in informations if '经验' in i])

release_date = "".join([i for i in informations if '发布' in i])

limit_people = "".join([i for i in informations if '招' in i])

address = "".join(html.xpath("//div[@class='tCompany_main']/div[2]/div[@class='bmsg inbox']/p/text()")) # 上班地址

company_type = "".join(html.xpath("//div[@class='tCompany_sidebar']/div[1]/div[2]/p[1]/@title")) # 公司类型

company_size = "".join(html.xpath("//div[@class='tCompany_sidebar']/div[1]/div[2]/p[2]/@title")) # 公司规模

industry = "".join(html.xpath("//div[@class='tCompany_sidebar']/div[1]/div[2]/p[3]/@title")) # 所属行业

item = {'position': position, 'company': company, 'wages': wages, 'place': place, 'education': education,

'work_experience': work_experience, 'release_date': release_date, 'limit_people': limit_people,

'address': address, 'company_type': company_type, 'company_size': company_size,

'industry': industry}

print(item)

self.writer.writerow(item)

if name == 'main':

print("爬虫开始")

spider = Spider()

end_page = spider.get_end_page() # 获取该职位的总页数

print("总页数:{}".format(end_page))

page = input("输入采集页数:")

for count in range(1, int(page) + 1):

detail_urls = spider.get_url(count)

for detail_url in detail_urls:

spider.parse_url(detail_url)

print("已爬取第{}页".format(count))

spider.fp.close()

print("爬取结束")