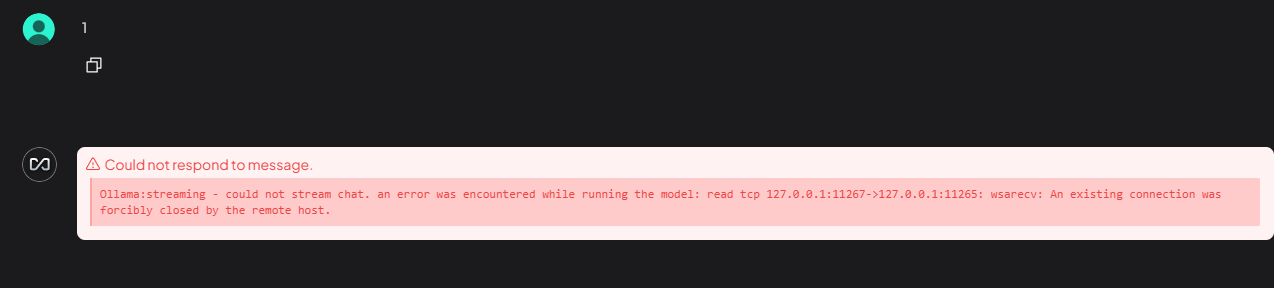

出现错误请求解决Could not respond to message.

Ollama:streaming - could not stream chat. an error was encountered while running the model: read tcp 127.0.0.1:11267->127.0.0.1:11265: wsarecv: An existing connection was forcibly closed by the remote host.

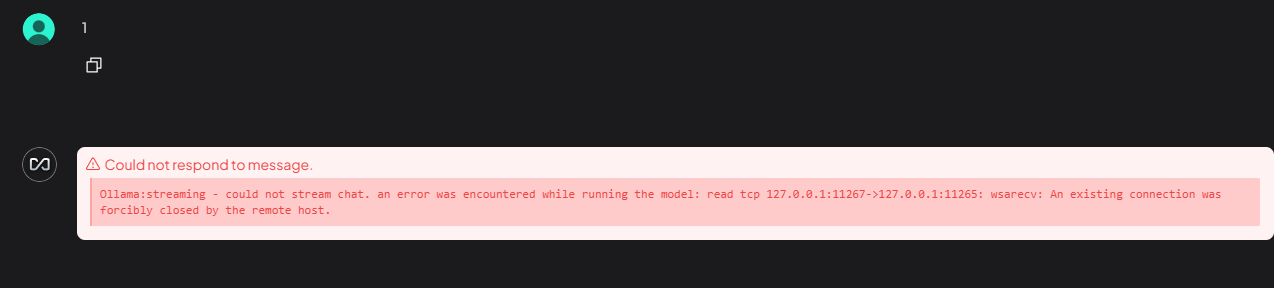

出现错误请求解决Could not respond to message.

Ollama:streaming - could not stream chat. an error was encountered while running the model: read tcp 127.0.0.1:11267->127.0.0.1:11265: wsarecv: An existing connection was forcibly closed by the remote host.

阿里嘎多学长整理AIGC生成,因移动端显示问题导致当前答案未能完全显示,请使用PC端查看更加详细的解答过程

你遇到了一个错误请求问题,错误信息是 "Could not respond to message.Ollama:streaming - could not stream chat. an error was encountered",并且你使用的是 Python。

这个错误可能是由于 Ollama 服务端的问题,或者是你的 Python 代码中存在一些错误。

可以尝试以下解决方案:

以下是一个简单的示例代码,用于连接 Ollama 服务端:

import requests

url = "https://api.ollama.com/streaming"

headers = {"Authorization": "YOUR_API_KEY"}

try:

response = requests.get(url, headers=headers)

if response.status_code == 200:

print("Connected to Ollama successfully")

else:

print("Error connecting to Ollama:", response.text)

except requests.exceptions.RequestException as e:

print("Error connecting to Ollama:", e)

请将 YOUR_API_KEY 替换为你的实际 API 密钥。

如果你仍然遇到问题,可以提供更多的代码信息和错误日志,我将尽力帮助你解决问题。