Flink local模式下,运行Flink自带的jar包一直报错

启动Flink:

执行: bin/flink run examples/streaming/SocketWindowWordCount.jar --port 8888

利用nc -lk 8888模拟socket 输入,然后会报错,并且页面也进不去了

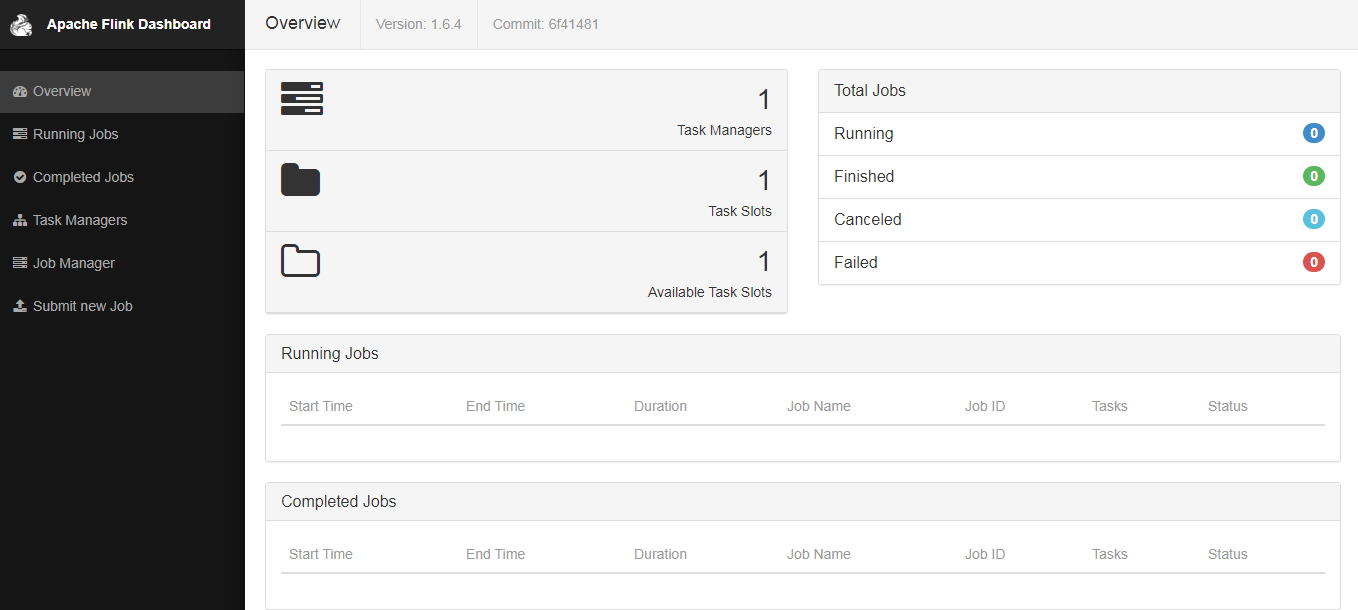

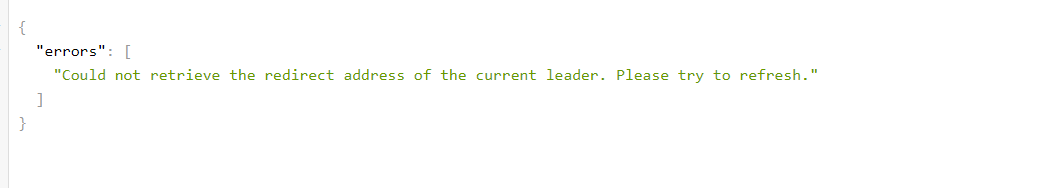

前台页面显示:

后台报错内容:

org.apache.flink.client.program.ProgramInvocationException: Could not retrieve the execution result. (JobID: f2ee89e0ed991f22ed9eaec00edfd789)

at org.apache.flink.client.program.rest.RestClusterClient.submitJob(RestClusterClient.java:261)

at org.apache.flink.client.program.ClusterClient.run(ClusterClient.java:486)

at org.apache.flink.streaming.api.environment.StreamContextEnvironment.execute(StreamContextEnvironment.java:66)

at org.apache.flink.streaming.examples.socket.SocketWindowWordCount.main(SocketWindowWordCount.java:92)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:483)

at org.apache.flink.client.program.PackagedProgram.callMainMethod(PackagedProgram.java:529)

at org.apache.flink.client.program.PackagedProgram.invokeInteractiveModeForExecution(PackagedProgram.java:421)

at org.apache.flink.client.program.ClusterClient.run(ClusterClient.java:426)

at org.apache.flink.client.cli.CliFrontend.executeProgram(CliFrontend.java:816)

at org.apache.flink.client.cli.CliFrontend.runProgram(CliFrontend.java:290)

at org.apache.flink.client.cli.CliFrontend.run(CliFrontend.java:216)

at org.apache.flink.client.cli.CliFrontend.parseParameters(CliFrontend.java:1053)

at org.apache.flink.client.cli.CliFrontend.lambda$main$11(CliFrontend.java:1129)

at org.apache.flink.client.cli.CliFrontend$$Lambda$1/1977310713.call(Unknown Source)

at org.apache.flink.runtime.security.HadoopSecurityContext$$Lambda$2/1169474473.run(Unknown Source)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1754)

at org.apache.flink.runtime.security.HadoopSecurityContext.runSecured(HadoopSecurityContext.java:41)

at org.apache.flink.client.cli.CliFrontend.main(CliFrontend.java:1129)

Caused by: org.apache.flink.runtime.client.JobSubmissionException: Failed to submit JobGraph.

at org.apache.flink.client.program.rest.RestClusterClient.lambda$submitJob$8(RestClusterClient.java:380)

at org.apache.flink.client.program.rest.RestClusterClient$$Lambda$11/256346753.apply(Unknown Source)

at java.util.concurrent.CompletableFuture$ExceptionCompletion.run(CompletableFuture.java:1246)

at java.util.concurrent.CompletableFuture.postComplete(CompletableFuture.java:193)

at java.util.concurrent.CompletableFuture.internalComplete(CompletableFuture.java:210)

at java.util.concurrent.CompletableFuture$ThenApply.run(CompletableFuture.java:723)

at java.util.concurrent.CompletableFuture.postComplete(CompletableFuture.java:193)

at java.util.concurrent.CompletableFuture.internalComplete(CompletableFuture.java:210)

at java.util.concurrent.CompletableFuture$ThenCopy.run(CompletableFuture.java:1333)

at java.util.concurrent.CompletableFuture.postComplete(CompletableFuture.java:193)

at java.util.concurrent.CompletableFuture.completeExceptionally(CompletableFuture.java:2361)

at org.apache.flink.runtime.concurrent.FutureUtils.lambda$retryOperationWithDelay$5(FutureUtils.java:203)

at org.apache.flink.runtime.concurrent.FutureUtils$$Lambda$21/100819684.accept(Unknown Source)

at java.util.concurrent.CompletableFuture$WhenCompleteCompletion.run(CompletableFuture.java:1298)

at java.util.concurrent.CompletableFuture.postComplete(CompletableFuture.java:193)

at java.util.concurrent.CompletableFuture.internalComplete(CompletableFuture.java:210)

at java.util.concurrent.CompletableFuture$ThenCopy.run(CompletableFuture.java:1333)

at java.util.concurrent.CompletableFuture.postComplete(CompletableFuture.java:193)

at java.util.concurrent.CompletableFuture.internalComplete(CompletableFuture.java:210)

at java.util.concurrent.CompletableFuture$AsyncCompose.exec(CompletableFuture.java:626)

at java.util.concurrent.CompletableFuture$Async.run(CompletableFuture.java:428)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

Caused by: org.apache.flink.runtime.rest.util.RestClientException: [Internal server error., <Exception on server side:

akka.pattern.AskTimeoutException: Ask timed out on [Actor[akka://flink/user/dispatcher#1680779493]] after [12000 ms]. Sender[null] sent message of type "org.apache.flink.runtime.rpc.messages.LocalFencedMessage".

at akka.pattern.PromiseActorRef$$anonfun$1.apply$mcV$sp(AskSupport.scala:604)

at akka.actor.Scheduler$$anon$4.run(Scheduler.scala:126)

at scala.concurrent.Future$InternalCallbackExecutor$.unbatchedExecute(Future.scala:601)

at scala.concurrent.BatchingExecutor$class.execute(BatchingExecutor.scala:109)

at scala.concurrent.Future$InternalCallbackExecutor$.execute(Future.scala:599)

at akka.actor.LightArrayRevolverScheduler$TaskHolder.executeTask(LightArrayRevolverScheduler.scala:329)

at akka.actor.LightArrayRevolverScheduler$$anon$4.executeBucket$1(LightArrayRevolverScheduler.scala:280)

at akka.actor.LightArrayRevolverScheduler$$anon$4.nextTick(LightArrayRevolverScheduler.scala:284)

at akka.actor.LightArrayRevolverScheduler$$anon$4.run(LightArrayRevolverScheduler.scala:236)

at java.lang.Thread.run(Thread.java:745)

End of exception on server side>]

at org.apache.flink.runtime.rest.RestClient.parseResponse(RestClient.java:350)

at org.apache.flink.runtime.rest.RestClient.lambda$submitRequest$3(RestClient.java:334)

at org.apache.flink.runtime.rest.RestClient$$Lambda$31/1522310222.apply(Unknown Source)

at java.util.concurrent.CompletableFuture$AsyncCompose.exec(CompletableFuture.java:604)

... 4 more

网上搜了一下,添加了这几个参数还是有问题:

akka.ask.timeout: 60 s

web.timeout: 12000

taskmanager.host: localhost

有人遇到过吗?