本人小白,通过学习网上的Bp算法的定义和公式,自己写了一个自己的Bp算法。

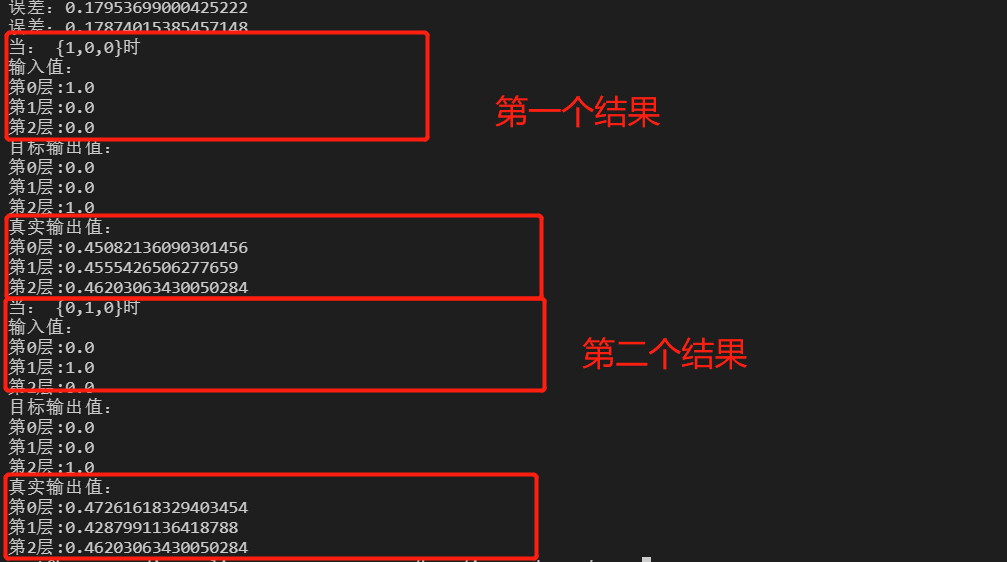

但是写完,运算 后发现其计算学习后的预测值与实际想要的不太相符,预测的数值非常平均。

平均现象如下:

麻烦各位大佬帮忙看看··这已经是我写的第三个版本了··出现的预测值还是很平均,不清楚到底是哪里出错了......真心求助

有任何疑问可以que我

我用的是java实现的。

总共有4个class

Bp.class :主类

import java.util.ArrayList;

import java.util.List;

import java.util.Random;

public class Bp{

int length;//层数长度

List<Layer> mLayer;

void setlen(int len){ //设置层数

length = len;

}

void setin(double[] in){//输入初始化

mLayer.get(0).setin(in);

}

void settarget(double[] target){//输出初始化

mLayer.get(length -1).settarget(target);

}

void init(int len,double[] in ,int nelen,double[] out){//初始化权值和偏置

//随机生成

//len 总层数

//in 输入值数组

//nelen 层中总neural个数

//out 目标输出

setlen(len);

mLayer = new ArrayList<>();

Layer inlayer = new Layer();

inlayer.addcount();

mLayer.add(inlayer);

for(int i = 0; i < len -1;i++){

Layer l = new Layer();

l.addcount();

l.randomWeight(len);

mLayer.add(l);

}

for(int j =0; j < mLayer.size();j++){

if(j == 0)

mLayer.get(j).Next = mLayer.get(j+1);

else if(j == length -1)

mLayer.get(j).pre = mLayer.get(j-1);

else{

mLayer.get(j).pre = mLayer.get(j-1);

mLayer.get(j).Next = mLayer.get(j+1);

}

}

setin(in);

settarget(out);

}

void feedward(){

for(int i =0;i< length;i++)

mLayer.get(i).feedward();

}

void backward(){

for(int i =length -1;i >= 0 ;i--)

mLayer.get(i).backward();

}

void printConstruction(){

System.out.println("输入值:");

for( int i =0; i < mLayer.get(0).length;i ++)

System.out.println("第"+i+"层:"+mLayer.get(0).lin[i]);

System.out.println("目标输出值:");

for( int i =0; i < mLayer.get(length-1).length ;i ++)

System.out.println("第"+i+"层:"+mLayer.get(length-1).lout[i]);

System.out.println("真实输出值:");

for( int i =0; i < mLayer.get(length-1).length ;i ++)

System.out.println("第"+i+"层:"+mLayer.get(length-1).realout[i]);

}

void printError(){

double sum = 0;

for( int i =0; i < mLayer.get(length-1).length ;i ++)

sum += mLayer.get(length-1).realout[i] - mLayer.get(length-1).lout[i];

sum = sum /(length -1);

System.out.println("误差:"+sum);

}

public static void main(String[] args) {

Bp backfeed = new Bp();

double[] in = {1,0,0};

double[] out = {1,0,0};

backfeed.init(3,in,3,out);

for (int i =0;i <3000;i++){

double[] in1 = {1,0,0};

double[] out1 = {1,0,0};

//Random r = new Random();

int y =0;

y = i%3;

if (y ==0){

in1 = new double[] {1,0,0};

out1 = new double[] {1,0,0};

}

if (y ==1){

in1 = new double[] {0,1,0};

out1 = new double[] {0,1,0};

}

if (y ==2){

in1 = new double[] {0,0,1};

out1 = new double[] {0,0,1};

}

backfeed.setin(in1);

backfeed.settarget(out1);

backfeed.feedward();

backfeed.backward();

backfeed.printError();

}

double[] in1 = {1,0,0};

System.out.println("当: {1,0,0}时");

in1 = new double[] {1,0,0};

backfeed.setin(in1);

backfeed.feedward();

backfeed.printConstruction();

System.out.println("当: {0,1,0}时");

in1 = new double[] {0,1,0};

backfeed.setin(in1);

backfeed.feedward();

backfeed.printConstruction();

}

}

//Layer.class

import java.util.*;

import java.lang.Math;

public class Layer{

static int _layercount = 0;//总层数

int layercount;//该层数

int length;

Neural[] neurals;

double[] lin;

double[] lout;//目标输出点

double[] net;

double[] realout;//真实输出点

Layer pre;

Layer Next;

double[] delta;//误差

double meu = Settings.meu;

void setin(double[] in){

length = in.length;

if(lin == null)

lin = new double[length];

if(net == null)

net = new double[length];

lin = in;

}

void settarget(double[] out){

length = out.length;

if(lout == null)

lout = new double[length];

lout = out;

}

void randomWeight(int len){

Random r = new Random();

length = len;

if(neurals ==null)

neurals = new Neural[length];

for(int i = 0; i < neurals.length; i++){

if (neurals[i] ==null){

neurals[i] = new Neural();

neurals[i].length = len;

}

if (neurals[i].Weight ==null)

neurals[i].Weight = new double[length];

for(int j = 0; j< neurals[i].Weight.length;j++){

neurals[i].Weight[j] = r.nextDouble();

}

}

}

void feedward(){

if(realout == null)

realout = new double[length];

if(net == null)

net = new double[length];

if(layercount == 1)

for (int i =0;i < length ;i++){

realout[i] = lin[i]; //输入层的输出 = 输入

}

else{

for (int i =0;i < length ;i++){ //这一层细胞数

double sum = 0;

for(int j =0;j< neurals[i].length;j++)//细胞数的weight总数

sum += neurals[i].Weight[j] * pre.realout[i]; //前者所有细胞的输出分别与该层这个细胞的权值相乘。

net[i] = sum;

realout[i] = sigmoid(net[i]);

}

}

//初始化

}

void calc_deta(){//计算误差delta δ

if (delta == null){

delta = new double[length];

}

if (layercount == _layercount)

for(int i = 0;i < length;i++){

double aj = realout[i];

delta[i] = aj * (1-aj)*(lout[i] -aj);

//该层下这个细胞对应delta误差

}

else{

for(int i =0; i < length;i++){

double sum =0;

double aj = realout[i];

for(int j =0; j > Next.neurals[i].Weight.length;j++){

sum += Next.delta[i] * Next.neurals[j].Weight[i];//下一层细胞的对应权值 * 通用误差δ 之和

}

delta[i] = sum * aj * (1-aj);

}

}

}

void updatew(){

if(layercount == 1)

return;

if(layercount == _layercount){

for(int i =0; i< length; i++)

for(int j =0; j < neurals[i].Weight.length;j++)

neurals[i].Weight[j] = neurals[i].Weight[j] + (delta[i] * pre.realout[i] * meu);

return;

}else{

for(int i =0; i< length; i++)

for(int j =0; j < neurals[i].Weight.length;j++)

neurals[i].Weight[j] = neurals[i].Weight[j] + (delta[i] * pre.realout[i] * meu);

}

//这一层的Δw = 这一层权值 - (上一层 与 权值 相对应的细胞输出值 * 学习率 * δ)

}

void backward(){

calc_deta();

updatew();

}

void addcount(){

_layercount++;

layercount = _layercount;

}

double sigmoid(double mnet){

return 1 / (1 + Math.exp(-mnet));

}

}

//Neurals.class

public class Neural{

int length;

double[] Weight;

}

//Settings.class

class Settings{

static double meu = 0.5;

}