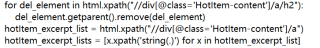

请问下图这个部分的代码是干什么用的呢

完整代码如下

import requests

from lxml import etree

from bs4 import BeautifulSoup

from requests.exceptions import RequestException

url = 'https://www.zhihu.com/hot'

soup = BeautifulSoup(open('知乎hot页面.html', encoding='utf-8'), 'lxml')

file1 = open('知乎hot页面.html', 'w', encoding='utf-8')

file1.write(soup.prettify())

file1.close()

def get_page():

try:

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/101.0.4951.54 Safari/537.36',

'cookie': r'_zap=185c368f-cac1-4124-8b97-6566c098a61d; d_c0="AEDfyG990RSPTrcXcq6AscVaAKM-SfvUl2I=|1650436018"; __snaker__id=CZaIBtjh7BGI0fe3; _9755xjdesxxd_=32; YD00517437729195%3AWM_TID=1MGOQ0YHQwZEBQEREAbEBHAyzS5C3RUR; q_c1=986cc29497854513b6ae85c82f874233|1650436058000|1650436058000; _xsrf=ca50ce23-6c53-499f-a783-541bbf89e40f; SESSIONID=x7LKH0X9dfWzWJ2ChMfsQme5YWCw9iYnLs9eOV0Tvlb; JOID=U10QBUmU-LkFdm3gG5PPY_cBFzYL6M_2Sj1ZuCHzmu58JSTWWNYoy2BxaeMcJTUeaDBulq-8tPjN1BwrJQqU4QI=; osd=VF0cAEyT-LUAc2rgF5bKZPcNEjMM6MPzTzpZtCT2ne5wICHRWNotzmdxZeYZIjUSbTVplqO5sf_N2BkuIgqY5Ac=; Hm_lvt_98beee57fd2ef70ccdd5ca52b9740c49=1650436017,1651221589,1651456645,1652075624; Hm_lpvt_98beee57fd2ef70ccdd5ca52b9740c49=1652090638; NOT_UNREGISTER_WAITING=1; gdxidpyhxdE=vhvSfmVey%2Fri2W%2BycmMJkM9wrOZ6Up8beup29UV997RDp0t1n%5CHlerKCLqSfEoPNNaQpD8M0jhULcgBjPZf9AfAuR2r83Sfsvb1nkxSzhNU8ibLcLl%2Bn35mGq7PfZ259OIGYJR5uW96L3w4A66PISuRTh2kAp%2FKHpn%2B7oSZyqcfLkLUL%3A1652092748568; YD00517437729195%3AWM_NI=y6XOSx82ILdIJiTE6%2F76xQHdhyiHhes6ZnijqsZAqjRftAoBo3y0LqLWBmH8GsLSTa1TuZPb%2BIt75iUODtARR9kfkJZ76O4CGrPeAMvbucFEyHf7Sqa19K93dccnr1YdU1Y%3D; YD00517437729195%3AWM_NIKE=9ca17ae2e6ffcda170e2e6ee87d94f9c899cd5e54287b88fa6c54e838e9eb0c54ea1b68fbbf37083efa3b4b82af0fea7c3b92abbea98b0d274fcee87ade7438ab79ca6b525f5eab8b2cb70aca7a7a9eb6fb1ea968fec62b3ad96a2f46292babca2ee4aadedb7d1cf7b90898e8ecb3485ad84b4d76bf38cbbbbd36ae997bcd8b86397918c95c45a92e7a587ea429ae9fab0b74985aea086c447b6bebb84c54fbb98aad8d27ba3eff7acbc39a8bdad8ff13b9bbc9bd2dc37e2a3; captcha_session_v2=2|1:0|10:1652092266|18:captcha_session_v2|88:ZGV1WEc5eVlPbXY3dEVVRkQ5dm1uZzRoV1hwRVlOak5PZ2ZlOGh3ZWZhdVNTZmhZcEladkYyQWt0UzVpKzNlVQ==|6601828d4cb02c5a70c72e399078a6819ddb1b6b38ee9ee4c92930f3682bc2df; captcha_ticket_v2=2|1:0|10:1652092274|17:captcha_ticket_v2|704:eyJ2YWxpZGF0ZSI6IkNOMzFfNkRZNnp4WFBGZldvQTFOd21FbFBFYWh4S184MHp0N1NxeDR4SWhpWGhHZnVkYlV0YVZXTGJJQk1YTHF3ZkxMd3lVekh5dUlkRzVIa1Nrc05FSno1OVhHQ3Z1MWdWNmNmajgyeHBnTFZLaS5xRzlGS19YZHJJWmNVSUdyay5XREFrVnpGdmhqVHg2UnVVQy1BTVk5UU84bXFkcFV0SUVobzh3aXVFcVZDdUtONmtlWGE1ZDY2RWVMMUt5NTU0em9HWVVQMGRyS1F1R0hYYXk3Q2ZtaktBei5FNnpubU9CUnpuMjl2dHlZeVF1SVVfbF9RX0JoYi1sTTFHTVVKd1pQb3Mxc3YtMS5nZnZ5ZHlsUG1CX3l6YmVGckFYak9wUVhfMFpxdjluOS5XeTkwc1ZKdkJOQjlSWHQuUFdyRGc3d0F1dmtNS0N4cVd0WndDQ182MmZob1VJUUtScHltY1pDUHZmbWNydXpYWThVbHNzXzhKY0lKdTRkblIxbk1fc2VlUFZDS1gwd3FZUWF5djRlRUEuSWNnS2FoLUZUNVd0b1FOUzE2MG1KZ1pvYkRGcjh5dXVtcy1IaGlSaDlsRlJEQTdhUEtyTzhkcDhYRld0LXUucFZhdjZTQkVRcl8xNWJ6Rml1LmltcGhDRUdUNGx4U2YxOGMyaUlfTGxGMyJ9|6a44cf5c65e83bb4dc814673fabfd4e10f679bda96786eec47a18c964f226812; z_c0=2|1:0|10:1652092290|4:z_c0|92:Mi4xQmNSWEN3QUFBQUFBUU5fSWIzM1JGQ1lBQUFCZ0FsVk5nanRtWXdEbDNHY3lwVmk3NzdfRHdVdFpoVDJ1T1lLLTBB|946777c30298838362de16cacae524be1a230e876feea46f729cd47f7ccbca5e; tst=h; KLBRSID=fe0fceb358d671fa6cc33898c8c48b48|1652092310|1652071589'

}

response = requests.get('https://www.zhihu.com/hot', headers=headers)

if response.status_code == 200:

print("网页获取成功..."+response.text)

return response

else:

print("网页获取失败...")

except RequestException:

return 'Request出现异常错误'

def parse_one_page_xpath(response):

html = etree.HTML(response.content)

hotItem_rank_list = html.xpath("//div[contains(@class,'HotItem-rank')]/text()")

hotItem_content_list = html.xpath("//div[@class='HotItem-content']/a/@href")

hotItem_title_list = html.xpath("//h2[@class='HotItem-title']/text()")

hotItem_metrics_list = html.xpath("//div[contains(@class,'HotItem-metrics')]/text()")

for del_element in html.xpath("//div[@class='HotItem-content']/a/h2"):

del_element.getparent().remove(del_element)

hotItem_excerpt_list = html.xpath("//div[@class='HotItem-content']/a")

hotItem_excerpt_lists = [x.xpath('string(.)') for x in hotItem_excerpt_list]

print(len(hotItem_rank_list))

print(len(hotItem_content_list))

print(len(hotItem_title_list))

print(len(hotItem_metrics_list))

print(len(hotItem_excerpt_list))

print(len(hotItem_excerpt_lists))

for item in range(len(hotItem_rank_list)):

yield{

'热榜排名':hotItem_rank_list[item],

'热榜链接':hotItem_content_list[item],

'热榜标题':hotItem_title_list[item],

'热榜内容':hotItem_excerpt_lists[item],

'热度':hotItem_metrics_list[item],

}

if __name__ == '__main__':

response = get_page()

for item in parse_one_page_xpath(response):

print(item)