1. 问题描述

尝试使用scrapy框架爬取网站,将爬取的数据存储到mysql数据库,执行完毕之后没有报错,但是我查询数据时,显示没有数据

(代码框架参考使用该博主代码尝试运行:

https://www.cnblogs.com/fromlantianwei/p/10607956.html)

2. 部分截图

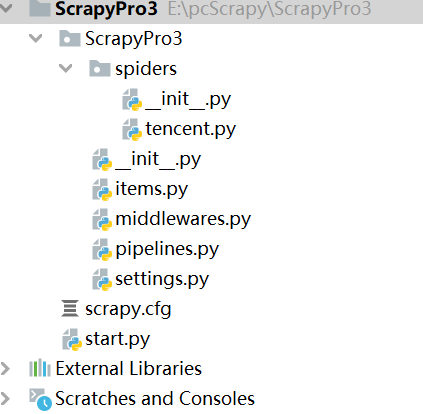

- scrapy项目:

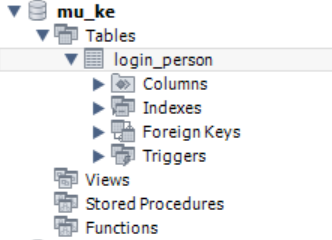

数据库创建:

##3. 相关代码

scrapy框架代码:

(1)tencent爬虫文件

# -*- coding: utf-8 -*-

import scrapy

from urllib import parse

import re

from copy import deepcopy

from ScrapyPro3.items import ScrapyPro3Item

class tencentSpider(scrapy.Spider):

name = 'tencent'

allowed_domains = []

start_urls = [

'http://tieba.baidu.com/mo/q----,sz@320_240-1-3---2/m?kw=%E6%A1%82%E6%9E%97%E7%94%B5%E5%AD%90%E7%A7%91%E6%8A%80%E5%A4%A7%E5%AD%A6%E5%8C%97%E6%B5%B7%E6%A0%A1%E5%8C%BA&pn=26140',

]

def parse(self, response): # 总页面

item = ScrapyPro3Item()

all_elements = response.xpath(".//div[@class='i']")

# print(all_elements)

for all_element in all_elements:

content = all_element.xpath("./a/text()").extract_first()

content = "".join(content.split())

change = re.compile(r'[\d]+.')

content = change.sub('', content)

item['comment'] = content

person = all_element.xpath("./p/text()").extract_first()

person = "".join(person.split())

# 去掉点赞数 评论数

change2 = re.compile(r'点[\d]+回[\d]+')

person = change2.sub('', person)

# 选择日期

change3 = re.compile(r'[\d]?[\d]?-[\d][\d](?=)')

date = change3.findall(person)

# 如果为今天则选择时间

change4 = re.compile(r'[\d]?[\d]?:[\d][\d](?=)')

time = change4.findall(person)

person = change3.sub('', person)

person = change4.sub('', person)

if time == []:

item['time'] = date

else:

item['time'] = time

item['name'] = person

# 增加密码 活跃

item['is_active'] = '1'

item['password'] = '123456'

print(item)

yield item

# 下一页

"""next_url = 'http://tieba.baidu.com/mo/q----,sz@320_240-1-3---2/' + parse.unquote(

response.xpath(".//div[@class='bc p']/a/@href").extract_first())

print(next_url)

yield scrapy.Request(

next_url,

callback=self.parse,

)"""

(2)item文件

# -*- coding: utf-8 -*-

# Define here the models for your scraped items

#

# See documentation in:

# https://doc.scrapy.org/en/latest/topics/items.html

import scrapy

class ScrapyPro3Item(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

comment = scrapy.Field()

time = scrapy.Field()

name = scrapy.Field()

password = scrapy.Field()

is_active = scrapy.Field()

(3)pipelines文件

-*- coding: utf-8 -*-

Define your item pipelines here

#

Don't forget to add your pipeline to the ITEM_PIPELINES setting

See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html

"""class Scrapypro3Pipeline(object):

def process_item(self, item, spider):

return item"""

import pymysql

from twisted.enterprise import adbapi

class Scrapypro3Pipeline(object):

def init(self, dbpool):

self.dbpool = dbpool

@classmethod

def from_settings(cls, settings): # 函数名固定,会被scrapy调用,直接可用settings的值

"""

数据库建立连接

:param settings: 配置参数

:return: 实例化参数

"""

adbparams = dict(

host='localhost',

db='mu_ke',

user='root',

password='root',

cursorclass=pymysql.cursors.DictCursor # 指定cursor类型

)

# 连接数据池ConnectionPool,使用pymysql或者Mysqldb连接

dbpool = adbapi.ConnectionPool('pymysql', **adbparams)

# 返回实例化参数

return cls(dbpool)

def process_item(self, item, spider):

"""

使用twisted将MySQL插入变成异步执行。通过连接池执行具体的sql操作,返回一个对象

"""

query = self.dbpool.runInteraction(self.do_insert, item) # 指定操作方法和操作数据

# 添加异常处理

query.addCallback(self.handle_error) # 处理异常

def do_insert(self, cursor, item):

# 对数据库进行插入操作,并不需要commit,twisted会自动commit

insert_sql = """

insert into login_person(name,password,is_active,comment,time) VALUES(%s,%s,%s,%s,%s)

"""

cursor.execute(insert_sql, (item['name'], item['password'], item['is_active'], item['comment'],

item['time']))

def handle_error(self, failure):

if failure:

# 打印错误信息

print(failure)```

(4) settings文件

-*- coding: utf-8 -*-

Scrapy settings for ScrapyPro3 project

#

For simplicity, this file contains only settings considered important or

commonly used. You can find more settings consulting the documentation:

#

https://doc.scrapy.org/en/latest/topics/settings.html

https://doc.scrapy.org/en/latest/topics/downloader-middleware.html

https://doc.scrapy.org/en/latest/topics/spider-middleware.html

BOT_NAME = 'ScrapyPro3'

SPIDER_MODULES = ['ScrapyPro3.spiders']

NEWSPIDER_MODULE = 'ScrapyPro3.spiders'

Crawl responsibly by identifying yourself (and your website) on the user-agent

USER_AGENT = 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.110 Safari/537.36'

MYSQL_HOST = 'localhost'

MYSQL_DBNAME = 'mu_ke'

MYSQL_USER = 'root'

MYSQL_PASSWD = 'root'

Obey robots.txt rules

ROBOTSTXT_OBEY = False

Configure maximum concurrent requests performed by Scrapy (default: 16)

#CONCURRENT_REQUESTS = 32

Configure a delay for requests for the same website (default: 0)

See https://doc.scrapy.org/en/latest/topics/settings.html#download-delay

See also autothrottle settings and docs

#DOWNLOAD_DELAY = 3

The download delay setting will honor only one of:

#CONCURRENT_REQUESTS_PER_DOMAIN = 16

#CONCURRENT_REQUESTS_PER_IP = 16

Disable cookies (enabled by default)

#COOKIES_ENABLED = False

Disable Telnet Console (enabled by default)

#TELNETCONSOLE_ENABLED = False

Override the default request headers:

#DEFAULT_REQUEST_HEADERS = {

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

'Accept-Language': 'en',

#}

Enable or disable spider middlewares

See https://doc.scrapy.org/en/latest/topics/spider-middleware.html

#SPIDER_MIDDLEWARES = {

'ScrapyPro3.middlewares.ScrapyPro3SpiderMiddleware': 543,

#}

Enable or disable downloader middlewares

See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html

#DOWNLOADER_MIDDLEWARES = {

'ScrapyPro3.middlewares.ScrapyPro3DownloaderMiddleware': 543,

#}

Enable or disable extensions

See https://doc.scrapy.org/en/latest/topics/extensions.html

#EXTENSIONS = {

'scrapy.extensions.telnet.TelnetConsole': None,

#}

Configure item pipelines

See https://doc.scrapy.org/en/latest/topics/item-pipeline.html

ITEM_PIPELINES = {

'ScrapyPro3.pipelines.Scrapypro3Pipeline':200,

}

Enable and configure the AutoThrottle extension (disabled by default)

See https://doc.scrapy.org/en/latest/topics/autothrottle.html

#AUTOTHROTTLE_ENABLED = True

The initial download delay

#AUTOTHROTTLE_START_DELAY = 5

The maximum download delay to be set in case of high latencies

#AUTOTHROTTLE_MAX_DELAY = 60

The average number of requests Scrapy should be sending in parallel to

each remote server

#AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0

Enable showing throttling stats for every response received:

#AUTOTHROTTLE_DEBUG = False

Enable and configure HTTP caching (disabled by default)

See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings

#HTTPCACHE_ENABLED = True

#HTTPCACHE_EXPIRATION_SECS = 0

#HTTPCACHE_DIR = 'httpcache'

#HTTPCACHE_IGNORE_HTTP_CODES = []

#HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'

(5)start文件——执行爬虫文件

from scrapy import cmdline

cmdline.execute(["scrapy","crawl","tencent"])

数据库创建代码:

create database mu_ke;

CREATE TABLE login_person (id int(10) NOT NULL AUTO_INCREMENT,name varchar(100) DEFAULT NULL,passsword varchar(100) DEFAULT NULL,is_active varchar(100) DEFAULT NULL,comment varchar(100) DEFAULT NULL,time varchar(100) DEFAULT NULL,

PRIMARY KEY (id)

) ENGINE=InnoDB AUTO_INCREMENT=1181 DEFAULT CHARSET=utf8;

select count(name) from login_person;#查询结果条数为0

# 运行完代码后查询数据,显示条数为0,这里面有什么问题吗?

(1)

执行过程正常

(2)运行

pycharm2019.3

python3.8

mysql8.0(workbench8.0)

(3) 数据连接没有