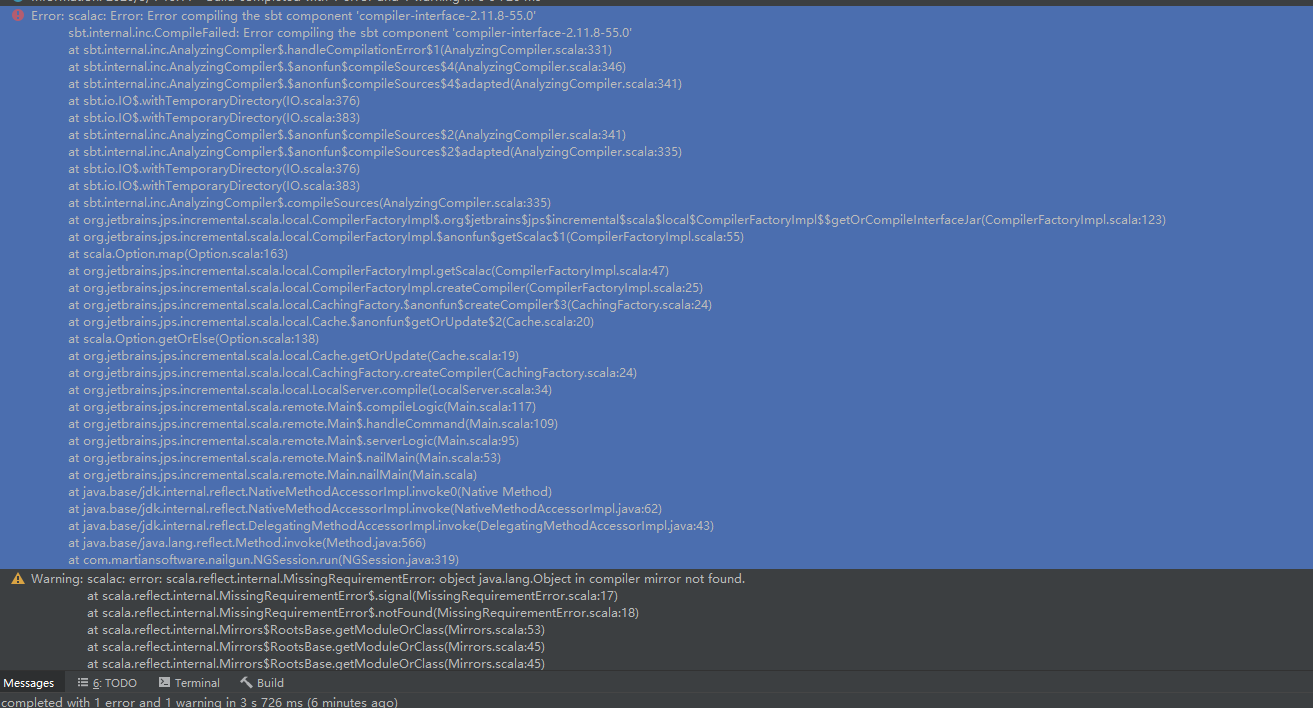

IDEA 运行 scala.class文件后报错

悬赏问题

- ¥15 基于卷积神经网络的声纹识别

- ¥15 Python中的request,如何使用ssr节点,通过代理requests网页。本人在泰国,需要用大陆ip才能玩网页游戏,合法合规。

- ¥100 为什么这个恒流源电路不能恒流?

- ¥15 有偿求跨组件数据流路径图

- ¥15 写一个方法checkPerson,入参实体类Person,出参布尔值

- ¥15 我想咨询一下路面纹理三维点云数据处理的一些问题,上传的坐标文件里是怎么对无序点进行编号的,以及xy坐标在处理的时候是进行整体模型分片处理的吗

- ¥15 CSAPPattacklab

- ¥15 一直显示正在等待HID—ISP

- ¥15 Python turtle 画图

- ¥15 stm32开发clion时遇到的编译问题