1.主题:我最近用netty4.x 做了一个app服务端,在部署到服务器上之后出现了很多close_wait 状态的TCP连接

,导致服务端卡住,不能再接收新的连接,但是换回本地测试又不会出现这样的问题。

2.详细描述:

1)当服务端出现卡住的情况时,使用netstat -ano 命令可以看到服务器的连接状态还是established,抓包也能看到客户端仍然在正常发送数据包,但是服务器只是回应了一个ACK(此时服务端已经卡住,控制台没有任何动作,也没有日志记录)。

下面那一个是心跳包。

只要客户端不关闭连接,一直是established,直到客户端断开连接后,就变成了close_wait。只有一次服务端从这种“卡死”状态恢复,并且打印了日志(比如"用户的断开连接")。

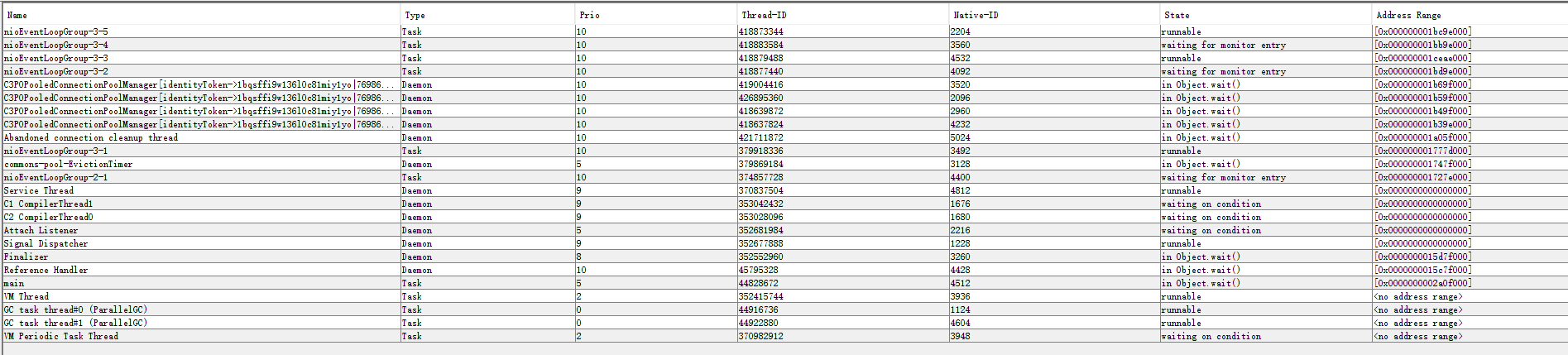

一开始我以为是某一步阻塞,而导致了这个情况,于是又用jstack 命令查看了阻塞状态,转下节,

2) 显示结果:

3)在查看官方的使用手册和《netty 实战》中,有提到入站出站消息需要使用ReferenceCountUtil.release()进行,手动释放,但我的编解码器用的分别是ByteToMessageDecoder和MessageToByteEncoder,源码上这两个都进行了Bytebuf的释放处理,

所以问题应该不是出在这里吧.....

以下是编解码器的部分代码:

encoder

@Override

protected void encode(ChannelHandlerContext ctx, YingHeMessage msg, ByteBuf out) throws Exception {

checkMsg(msg);// not null

int type = msg.getProtoId();

int contentLength = msg.getContentLength();

String body = msg.getBody();

out.writeInt(type);

out.writeInt(contentLength);

out.writeBytes(body.getBytes(Charset.forName("UTF-8")));

}

decoder

//int+int

private static final int HEADER_SIZE = 8;

private static final int LEAST_SIZE = 4;

private static final Logger LOG = LoggerFactory.getLogger(YingHeMessageDecoder.class);

@Override

protected void decode(ChannelHandlerContext ctx, ByteBuf in, List<Object> out) throws Exception {

in.markReaderIndex();//第一次mark

int readable = in.readableBytes();

LOG.info("check:{}", in.readableBytes() < HEADER_SIZE);

LOG.info("readable:{}", readable);

if (in.readableBytes() < HEADER_SIZE) {//消息过小回滚指针,不作处理

LOG.warn(">>>可读字节数小于头部长度!");

LOG.info("before reset:{}", in.readerIndex());

in.resetReaderIndex();

LOG.info("after reset:{}", in.readerIndex());

return;

}

//读取消息类型

int type = in.readInt();

int contentLength = in.readInt();

LOG.info("type:{},contentLength:{}", type, contentLength);

in.markReaderIndex();//第二次mark

int readable2 = in.readableBytes();

if (readable2 < contentLength) {

LOG.error("内容长度错误!length=" + contentLength);

in.resetReaderIndex();//重设readerIndex

LOG.info("重设,当前readerIndex:" + in.readerIndex());

return;

}

//读取内容

ByteBuf buf = in.readBytes(contentLength);

byte[] content = new byte[buf.readableBytes()];

buf.readBytes(content);

String body = new String(content, "UTF-8");

YingHeMessage message = new YingHeMessage(type, contentLength, body);

out.add(message);

}

下面是服务器启动类的配置:

public void run() throws Exception {

EventLoopGroup boss = new NioEventLoopGroup();

EventLoopGroup worker = new NioEventLoopGroup(5);

try {

ServerBootstrap b = new ServerBootstrap();

b.group(boss, worker)

.channel(NioServerSocketChannel.class)

.option(ChannelOption.SO_BACKLOG, 1024)

.option(ChannelOption.SO_REUSEADDR, true)

.childOption(ChannelOption.TCP_NODELAY, true)

.childOption(ChannelOption.SO_KEEPALIVE, true)

.handler(new LoggingHandler(LogLevel.DEBUG))

.childHandler(new ChannelInitializer<SocketChannel>() {

@Override

protected void initChannel(SocketChannel ch) throws Exception {

ch.pipeline()

.addLast(new LengthFieldBasedFrameDecoder(MAX_LENGTH,

LENGTH_FIELD_OFFSET,

LENGTH_FIELD_LENGTH,

LENGTH_ADJUSTMENT,

INITIAL_BYTES_TO_STRIP))

.addLast(new ReadTimeoutHandler(60))

.addLast(new YingHeMessageDecoder())

.addLast(new YingHeMessageEncoder())

.addLast(new ServerHandlerInitializer())

.addLast(new Zenith());

}

});

Properties properties = new Properties();

InputStream in = YingHeServer.class.getClassLoader().getResourceAsStream("net.properties");

properties.load(in);

Integer port = Integer.valueOf(properties.getProperty("port"));

ChannelFuture f = b.bind(port).sync();

LOG.info("服务器启动,绑定端口:" + port);

DiscardProcessorUtil.init();

System.out.println(">>>flush all:" + RedisConnector.getConnector().flushAll());

LOG.info(">>>redis connect test:ping---received:{}", RedisConnector.getConnector().ping());

f.channel().closeFuture().sync();

} catch (IOException e) {

e.printStackTrace();

} finally {

//清除

LOG.info("优雅退出...");

boss.shutdownGracefully();

worker.shutdownGracefully();

ChannelGroups.clear();

}

}

4)其他补充说明:

服务器为windows server 2012r;

客户端使用的C sharp编写;

服务端使用了Netty 4.1.26.Final,Mybatis,Spring,fastjson,redis(缓存),c3p0(连接池);

本地测试不会出现这种情况!

40c币奉上,还请各位大牛不吝赐教,救小弟于水火啊!