代码会爬取美国Target网站过敏药产品(大约100个)的价格,名字,产品id等信息. 特别的是在过程中会不断输入邮编地址(每个产品会根据100个不同的邮编爬取100次),代码会爬取不同邮编下产品的价格,名字,产品id等信息。

然后会把爬取的文件存入csv文件中。

我在爬取时使用了selenium的webdriver功能来输入邮编和爬取数据。同时为了加快爬取速度我使用了multithreading来同时打开数个产品网页爬取数据。我未来期望代码能在服务器上每小时运行持续一年。

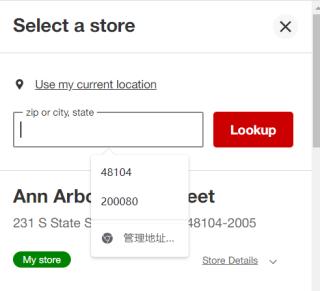

问题是因为Target发现我是机器人后会把我屏蔽锁住我的ip,所以我要购买并使用第三方代理。代理按照request次数收费但这个代码发出的request次数太多导致收费太高。同时我也觉得selenium打开网页一个个输入邮编效率太低了。请问各位有什么其他的好方法可以做到爬取不同邮编下同样产品的价格?

这是我的代码:

data = read_csv("C:\\Users\\12987\\desktop\\zipcode\\zc.csv")

# converting column data to list

zipCodeList = data['Zipcode'].tolist()

while(True):

AArray = []

def ScrapingTarget(url):

wait_imp = 10

CO = webdriver.ChromeOptions()

CO.add_experimental_option('useAutomationExtension', False)

CO.add_argument('--ignore-certificate-errors')

CO.add_argument('--start-maximized')

wd = webdriver.Chrome(r'D:\chromedriver\chromedriver_win32new\chromedriver_win32 (2)\chromedriver.exe',options=CO)

wd.get(url)

wd.implicitly_wait(wait_imp)

for zipcode in zipCodeList:

# click the My Store

myStore = wd.find_element(by=By.XPATH, value="//*[@id='web-store-id-msg-btn']/div[2]/div")

myStore.click()

sleep(0.5)

#输入邮编

inputZipCode = wd.find_element(by=By.XPATH, value="//*[@id='zip-or-city-state']")

inputZipCode.clear()

inputZipCode.send_keys(zipcode)

#click lookup

clickLoopUP = wd.find_element(by=By.XPATH, value="//*[@id='overlay-1']/div[2]/div[1]/div/div[3]/div[2]/button")

clickLoopUP.click()

sleep(0.5)

#choose Store

store = wd.find_element(by=By.XPATH, value="//*[@id='overlay-1']/div[2]/div[3]/div[2]/div[1]/button")

store.click()

#开始爬取数据

name = wd.find_element(by=By.XPATH, value="//*[@id='pageBodyContainer']/div[1]/div[1]/h1/span").text

price = wd.find_element(by=By.XPATH, value="//*[@id='pageBodyContainer']/div[1]/div[2]/div[2]/div/div[1]/div[1]/span").text

currentZipCode = zipcode

tz = pytz.timezone('Europe/London')

GMT = datetime.now(tz).strftime("%Y-%m-%d %H:%M:%S")

# needed to click onto the "Show more" to get the tcin and upc

xpath = '//*[@id="tabContent-tab-Details"]/div/button'

element_present = EC.presence_of_element_located((By.XPATH, xpath))

WebDriverWait(wd, 5).until(element_present)

showMore = wd.find_element(by=By.XPATH, value=xpath)

sleep(2)

showMore.click()

soup = BeautifulSoup(wd.page_source, 'html.parser')

# gets a list of all elements under "Specifications"

div = soup.find("div", {"class": "styles__StyledCol-sc-ct8kx6-0 iKGdHS h-padding-h-tight"})

list = div.find_all("div")

for a in range(len(list)):

list[a] = list[a].text

# locates the elements in the list

tcin = [v for v in list if v.startswith("TCIN")]

upc = [v for v in list if v.startswith("UPC")]

#scroll up

wd.find_element(by=By.TAG_NAME, value='body').send_keys(Keys.CONTROL + Keys.HOME)

AArray.append([name, price, currentZipCode, tcin, upc, GMT])

with concurrent.futures.ThreadPoolExecutor(10) as executor:

executor.map(ScrapingTarget, urlList)

with open(r'C:\Users\12987\PycharmProjects\python\Network\priceingAlgoriCoding\export_Target_dataframe.csv',

'a', newline="", encoding='utf-8') as f:

writer = csv.writer(f)

writer.writerows(AArray)

sleep(3600)