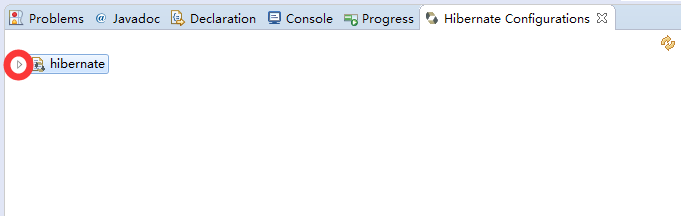

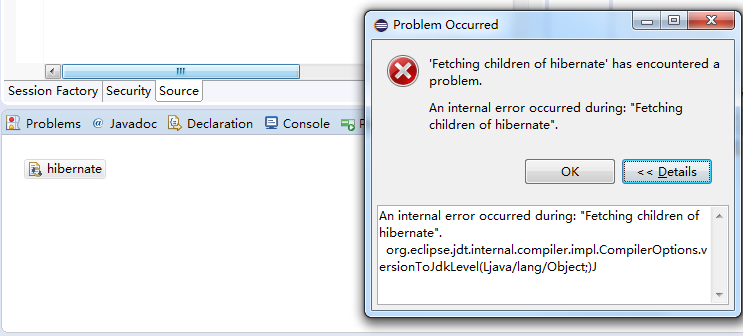

点击之后出现

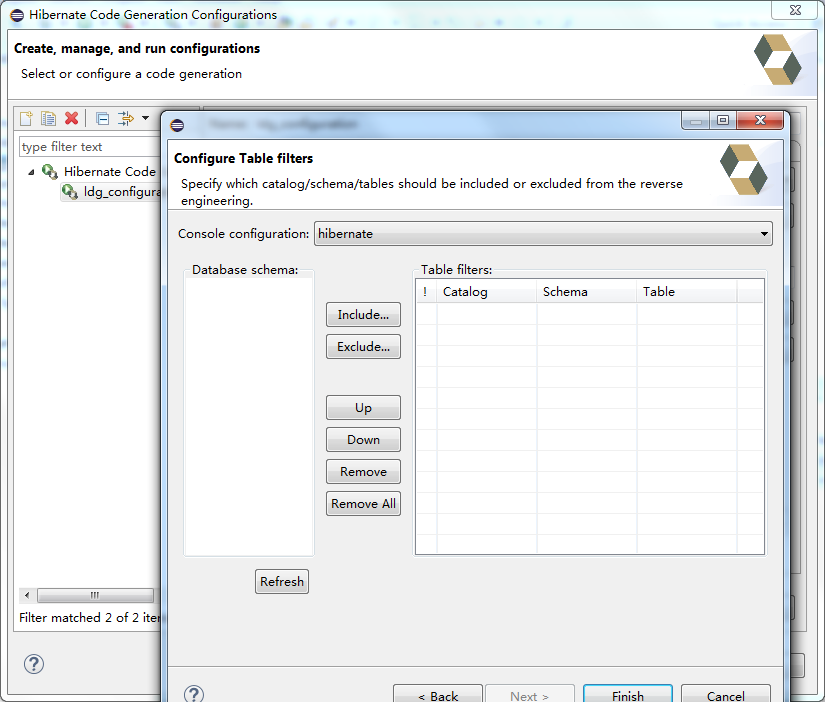

之后反向生成实体类的时候没有出现表

3条回答 默认 最新

z893222309 2017-07-12 08:08关注

z893222309 2017-07-12 08:08关注你看一下你的java compiler 里面配置的jdk版本是什么,换一下试试看

本回答被题主选为最佳回答 , 对您是否有帮助呢?解决 无用评论 打赏 举报

悬赏问题

- ¥15 sqlite 附加(attach database)加密数据库时,返回26是什么原因呢?

- ¥88 找成都本地经验丰富懂小程序开发的技术大咖

- ¥15 如何处理复杂数据表格的除法运算

- ¥15 如何用stc8h1k08的片子做485数据透传的功能?(关键词-串口)

- ¥15 有兄弟姐妹会用word插图功能制作类似citespace的图片吗?

- ¥200 uniapp长期运行卡死问题解决

- ¥15 latex怎么处理论文引理引用参考文献

- ¥15 请教:如何用postman调用本地虚拟机区块链接上的合约?

- ¥15 为什么使用javacv转封装rtsp为rtmp时出现如下问题:[h264 @ 000000004faf7500]no frame?

- ¥15 乘性高斯噪声在深度学习网络中的应用