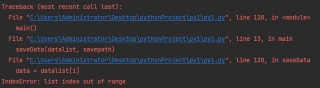

用python批量爬取网页时,中间有几个网页已经失效,或者有些网页内部格式发生变化,导致正则表达式无法匹配,如图:

就会报这种错误:

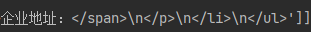

网页格式的变化是这样的:

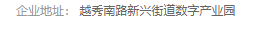

这是有内容的

这是没有内容的

我能想到的办法就是希望当正则匹配不到内容时输出空字符,想在现在代码的基础上进行修改,但是不知道怎么改了,希望能得到帮助,以下是我的代码,非常感谢!

# -*- coding: utf-8 -*-

from bs4 import BeautifulSoup

import re

import urllib.request,urllib.error

import xlwt

def main():

baseurl = "http://www.rcwjx.com"

datalist = getData(baseurl)

savepath = "嘉兴人才网.xls"

saveData(datalist, savepath)

findJob = re.compile(r'<span>(.*?)</span>')

findSalary = re.compile(r'<span class="orange font-size-base pay-base-info">\r\n(.*?)</span>',re.S)

findCompany = re.compile(r'class=".*">(.*?)</a>')

findGzdd = re.compile(r'<p><span class="label">工作地点:</span>(.*?)</p>')

findZprs = re.compile(r'<p><span class="label">招聘人数:</span>(.*?)</p>')

findXbyq = re.compile(r'<p><span class="label">性别要求:</span>(.*?)</p>')

findZwlx = re.compile(r'<p><span class="label">职位类型:</span>(.*?)</p>')

findZpbm = re.compile(r'<p><span class="label">招聘部门:</span>(.*?)</p>')

findNlyq = re.compile(r'<p><span class="label">年龄要求:</span>(.*?)</p>')

findGzjy = re.compile(r'<p><span class="label">工作经验:</span>(.*?)</p>')

findXlyq = re.compile(r'<p><span class="label">学历要求:</span>(.*?)</p>')

findZsqk = re.compile(r'<p><span class="label">住宿情况:</span>(.*?)</p>')

findZwms = re.compile('<div class="ItemContent JobRequire" id="ctl00_ContentPlaceHolder1_requirement">(.*?),谢谢!', re.S)

findLxr = re.compile(r'人:</span>\r\n (.*?)\r\n ')

findQydz = re.compile(r'企业地址:</span>\r\n (.*?)\r\n ')

def getData(baseurl):

datalist = []

name = ["/job/7178980.html"]

for i in name:

url = baseurl + i

html = askURL(url)

soup = BeautifulSoup(html, "html.parser")

for item in soup.find_all('div', id="ShowJobContent"):

data = []

item = str(item)

# print(item)

Job = re.findall(findJob, item)[0]

data.append(Job)

Salary = re.findall(findSalary, item)[0]

data.append(Salary.strip())

Company = re.findall(findCompany, item)[0]

data.append(Company)

Gzdd = re.findall(findGzdd, item)[0]

data.append(Gzdd)

Zprs = re.findall(findZprs, item)[0]

data.append(Zprs)

Xbyq = re.findall(findXbyq, item)[0]

data.append(Xbyq)

Zwlx = re.findall(findZwlx, item)[0]

data.append(Zwlx)

Zpbm = re.findall(findZpbm, item)[0]

if len(Zpbm) == 0:

data.append(" ")

else:

data.append(Zpbm)

Nlyq = re.findall(findNlyq, item)[0]

data.append(Nlyq)

Gzjy = re.findall(findGzjy, item)[0]

data.append(Gzjy)

Xlyq = re.findall(findXlyq, item)[0]

data.append(Xlyq)

Zsqk = re.findall(findZsqk, item)[0]

data.append(Zsqk)

Zwms = re.findall(findZwms, item)[0]

Zwms = re.sub('<br(\s+)?/>(\s+)?', " ", Zwms)

Zwms = re.sub('\xa0', " ", Zwms)

Zwms = re.sub('联系我时,请说是在嘉兴人才网上看到的', "联系我时,请说是在嘉兴人才网上看到的,谢谢!", Zwms)

data.append(Zwms)

Lxr = re.findall(findLxr, item)[0]

data.append(Lxr)

Qydz = re.findall(findQydz, item)[0]

data.append(Qydz)

print(1, Lxr, Qydz)

datalist.append(data)

print(datalist)

return datalist

def askURL(url):

head = {

"User-Agent": "Mozilla / 5.0(Windows NT 10.0;Win64;x64) AppleWebKit / 537.36(KHTML, likeGecko) Chrome/92.0.4515.159Safari/537.36"

}

request = urllib.request.Request(url,headers=head)

html = ""

try:

response = urllib.request.urlopen(request)

# html = response.read().decode("utf-8")

html = response.read().decode('GBK')

# print(html)

except urllib.error.URLError as e:

if hasattr(e, "code"):

print(e.code)

if hasattr(e, "reason"):

print(e.reason)

return html

def saveData(datalist,savepath):

print("save...")

book = xlwt.Workbook(encoding="utf-8",style_compression=0)

sheet = book.add_sheet('嘉兴人才网',cell_overwrite_ok=True)

col = ("岗位", "薪资", "公司", "工作地点", "招聘人数", "性别要求", "职位类型", "招聘部门", "年龄要求", "工作经验", "学历要求", "住宿情况", "职位描述", "联系人", "企业地址")

for i in range(0, 15):

sheet.write(0, i, col[i])

for i in range(0, 2000):

print("第%d条"%(i+1))

data = datalist[i]

for j in range(0, 15):

sheet.write(i+1, j, data[j])

book.save('嘉兴人才网.xls')

if __name__ == "__main__":

main()

print("爬取完毕!")