多相机参数标定。VS2015+opencv2.4.13

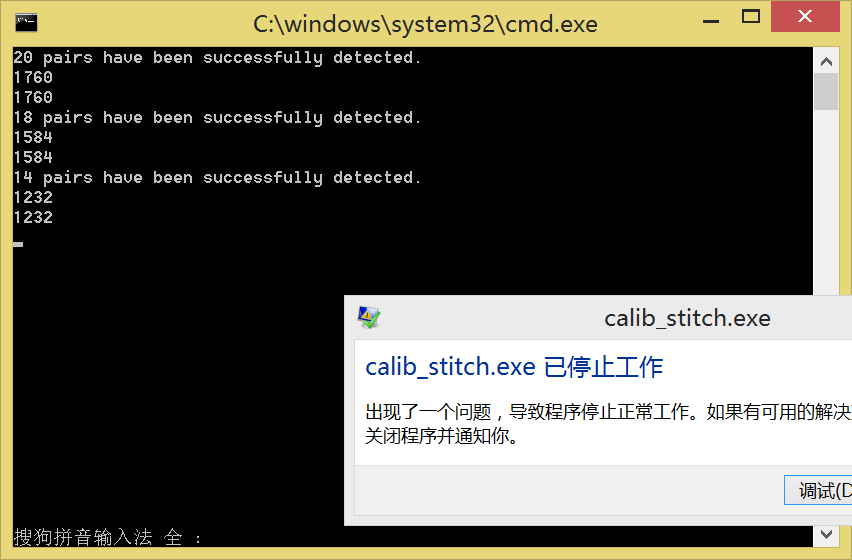

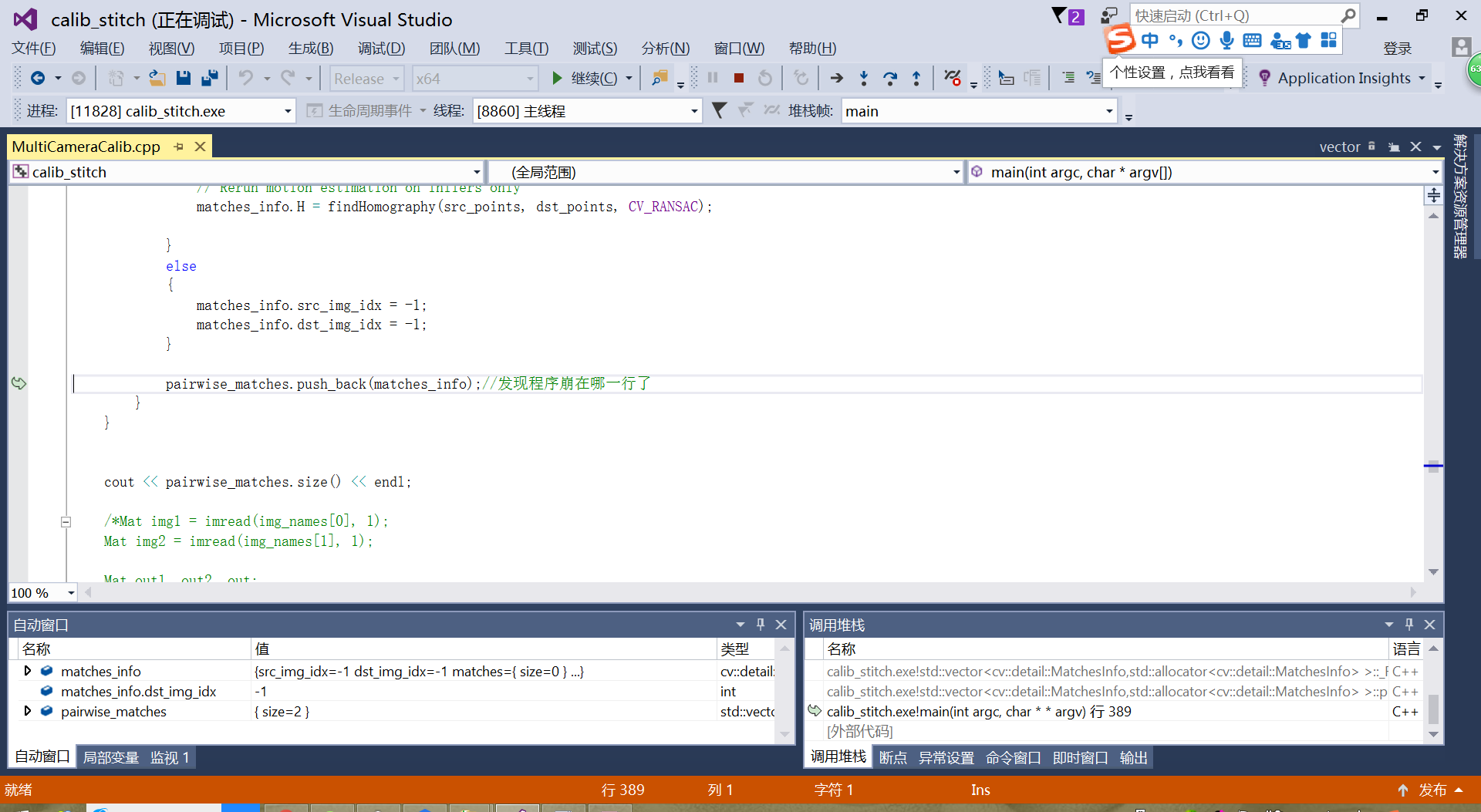

代码运行到匹配关系那一部分就会崩。虽然找到了问题在哪,但不会解决,求大神指教。报错内容为:0x00007FF8DCE3D3D8 (ucrtbase.dll) (calib_stitch.exe 中)处有未经处理的异常: 将一个无效参数传递给了将无效参数视为严重错误的函数。

下面是代码:

#include

#include

#include

#include "opencv2/core/core.hpp"

#include "opencv2/opencv_modules.hpp"

#include "opencv2/highgui/highgui.hpp"

#include "opencv2/stitching/detail/autocalib.hpp"

#include "opencv2/stitching/detail/blenders.hpp"

#include "opencv2/stitching/detail/camera.hpp"

#include "opencv2/stitching/detail/exposure_compensate.hpp"

#include "opencv2/stitching/detail/matchers.hpp"

#include "opencv2/stitching/detail/motion_estimators.hpp"

#include "opencv2/stitching/detail/seam_finders.hpp"

#include "opencv2/stitching/detail/util.hpp"

#include "opencv2/stitching/detail/warpers.hpp"

#include "opencv2/stitching/warpers.hpp"

#include "opencv2/features2d/features2d.hpp"

#include "opencv2/nonfree/nonfree.hpp"

#include

using namespace std;

using namespace cv;

using namespace cv::detail;

// Default command line args

bool preview = false;

bool try_gpu = false;

double work_megapix = -0.6; // 缩放参数

double seam_megapix = 0.1;

double compose_megapix = -1;

float conf_thresh = 1.f;

string features_type = "sift"; //orb surf sift

string ba_cost_func = "reproj"; //reproj ray

string ba_refine_mask = "xxllx";

bool do_wave_correct = true;

WaveCorrectKind wave_correct = detail::WAVE_CORRECT_HORIZ;

bool save_graph = true;

std::string save_graph_to;

string warp_type = "spherical"; //spherical cylindrical plane

int expos_comp_type = ExposureCompensator::GAIN_BLOCKS; //GAIN,OR NO

float match_conf = 0.3f;

string seam_find_type = "gc_color"; //no voronoi gc_color gc_colorgrad dp_color dp_colorgrad

int blend_type = Blender::MULTI_BAND; // Blender::FEATHER Blender::MULTI_BAND

float blend_strength = 5;//0就是关,默认5

string result_name = "result.jpg";

void detection(const vector imagelist, vector>& ransac_image_points_seq)

{

if (imagelist.size() % 2 != 0)

{

cout << "Error: the image list contains odd (non-even) number of elements\n";

return;

}

bool displayCorners = true;//true;

const int maxScale = 2;

const float squareSize = 1.f; // Set this to your actual square size

// ARRAY AND VECTOR STORAGE:

Size boardSize = Size(11, 8);

vector<vector<Point2f>> imagePoints[2];

vector<vector<Point3f> > objectPoints;

Size imageSize;

int i, j, k, nimages = (int)imagelist.size() / 2;

imagePoints[0].resize(nimages);

imagePoints[1].resize(nimages);

vector<string> goodImageList;

for (i = j = 0; i < nimages; i++)

{

for (k = 0; k < 2; k++)

{

const string& filename = imagelist[i * 2 + k];

Mat img = imread(filename, 0);

if (img.empty())

break;

if (imageSize == Size())

imageSize = img.size();

else if (img.size() != imageSize)

{

cout << "The image " << filename << " has the size different from the first image size. Skipping the pair\n";

break;

}

bool found = false;

vector<Point2f>& corners = imagePoints[k][j];

for (int scale = 1; scale <= maxScale; scale++)

{

Mat timg;

if (scale == 1)

timg = img;

else

resize(img, timg, Size(), scale, scale);

found = findChessboardCorners(timg, boardSize, corners,

CV_CALIB_CB_ADAPTIVE_THRESH | CV_CALIB_CB_NORMALIZE_IMAGE);

if (found)

{

if (scale > 1)

{

Mat cornersMat(corners);

cornersMat *= 1. / scale;

}

break;

}

}

if (displayCorners)

{

//cout << filename << endl;

Mat cimg, cimg1;

cvtColor(img, cimg, COLOR_GRAY2BGR);

drawChessboardCorners(cimg, boardSize, corners, found);

double sf = 640. / MAX(img.rows, img.cols);

resize(cimg, cimg1, Size(), sf, sf);

namedWindow("corners", 0);

imshow("corners", cimg1);

char c = (char)waitKey(1);

if (c == 27 || c == 'q' || c == 'Q') //Allow ESC to quit

exit(-1);

}

else

putchar('.');

if (!found)

break;

cornerSubPix(img, corners, Size(11, 11), Size(-1, -1), TermCriteria(CV_TERMCRIT_ITER + CV_TERMCRIT_EPS, 30, 0.01));

/* 亚像素精确化 */

//find4QuadCornerSubpix(img, corners, Size(5, 5)); //对粗提取的角点进行精确化

}

if (k == 2)

{

goodImageList.push_back(imagelist[i * 2]);

goodImageList.push_back(imagelist[i * 2 + 1]);

j++;

}

}

cout << j << " pairs have been successfully detected.\n";

nimages = j;

if (nimages < 2)

{

cout << "Error: too little pairs to run the calibration\n";

return;

}

imagePoints[0].resize(nimages);

imagePoints[1].resize(nimages);

vector<vector<Point2f>> image_points_seq;

for (int i = 0; i < 2; i++)

{

vector<Point2f> buf;

for (int j = 0; j < imagePoints[i].size(); j++)

{

for (int k = 0; k < imagePoints[i][j].size(); k++)

{

buf.push_back(imagePoints[i][j][k]);

}

}

image_points_seq.push_back(buf);

}

//RANSAC

cout << image_points_seq[0].size() << endl;

cout << image_points_seq[1].size() << endl;

vector<uchar> mask;

Mat h = findHomography(image_points_seq[0], image_points_seq[1], mask, CV_FM_RANSAC);

vector<Point2f> point1, point2;

for (int i = 0; i < image_points_seq[0].size(); i++)

{

//if (mask[i] == 1)

{

point1.push_back(image_points_seq[0][i]);

point2.push_back(image_points_seq[1][i]);

}

}

ransac_image_points_seq.push_back(point1);

ransac_image_points_seq.push_back(point2);

//cout << imagePoints[0].size() << endl;

//cout << imagePoints[1].size() << endl;

//return imagePoints;

}

int main(int argc, char* argv[])

{

int64 app_start_time = getTickCount();

string xml_name = "144-146-147-1481.yaml";

vector<vector<string>> img_names;

vector<vector<string>> names;

char file_name[256];

int num_pairs = 3;

int nums_pairs_count[4] = { 23,23,20 };

for (int i =0; i <= num_pairs; i++)

{

vector<string> temp;

for (int j = 1; j <= nums_pairs_count[i]; j++)

{

sprintf(file_name, "1234/%d/1/(%d).jpg", i, j);

temp.push_back(file_name);

sprintf(file_name, "1234/%d/2/(%d).jpg", i, j);

temp.push_back(file_name);

}

names.push_back(temp);

}

//棋盘格检测

vector<vector<Point2f>> double_image_points_seq;

int match_num[4][4] = {0};

int match_start[4][4][2] = {0};

//vector<vector<Point2f>> ransac_image_points_seq;

//detection(names[0], ransac_image_points_seq);

//match_num[0][1] = ransac_image_points_seq[0].size();

//match_num[1][0] = ransac_image_points_seq[0].size();

//match_start[0][1] = 0;

//match_start[1][0] = 0;

//match_num.push_back(ransac_image_points_seq[0].size());

//cout << ransac_image_points_seq[0].size() << endl;

//cout << ransac_image_points_seq[1].size() << endl;

for (int i = 0; i < num_pairs; i++)

{

vector<vector<Point2f>> ransac_image_points_seq;

detection(names[i], ransac_image_points_seq);

if (i != 0)

{

match_num[i][i + 1] = ransac_image_points_seq[0].size();

match_num[i+1][i] = ransac_image_points_seq[0].size();

match_start[i][i + 1][0] = match_num[i - 1][i];

match_start[i][i + 1][1] = 0;

match_start[i+1][i][0] = 0;

match_start[i+1][i][1] = match_num[i - 1][i];

for (int j = 0; j < ransac_image_points_seq[0].size(); j++)

{

double_image_points_seq[double_image_points_seq.size() - 1].push_back(ransac_image_points_seq[0][j]);

}

double_image_points_seq.push_back(ransac_image_points_seq[1]);

}

else

{

double_image_points_seq.push_back(ransac_image_points_seq[0]);

double_image_points_seq.push_back(ransac_image_points_seq[1]);

match_num[0][1] = ransac_image_points_seq[0].size();

match_num[1][0] = ransac_image_points_seq[0].size();

match_start[0][1][0] = 0;

match_start[0][1][1] = 0;

match_start[1][0][0] = 0;

match_start[1][0][1] = 0;

}

}

//特征点

vector<ImageFeatures> features(num_pairs + 1);

for (int i = 0; i <= num_pairs; i++)

{

vector<KeyPoint> keypoints;

for (int j = 0; j < double_image_points_seq[i].size(); j++)

{

KeyPoint point;

point.pt = double_image_points_seq[i][j];

keypoints.push_back(point);

}

features[i].keypoints = keypoints;

features[i].img_size = Size(2560, 1440);

features[i].img_idx = i;

}

//匹配关系

vector<MatchesInfo> pairwise_matches;

for (int i = 0; i <= num_pairs; i++)

{

for (int j = 0; j <= num_pairs; j++)

{

MatchesInfo matches_info;

if(j==i+1 || j==i-1)

{

vector<DMatch> match(match_num[i][j]);

vector<uchar> mask(match_num[i][j]);

for (int n = 0; n < match_num[i][j]; n++)

{

match[n].queryIdx = match_start[i][j][0] + n;

match[n].trainIdx = match_start[i][j][1] + n;

mask[n] = 1;

}

matches_info.src_img_idx = i;

matches_info.dst_img_idx = j;

matches_info.matches = match;

//info.inliers_mask = inliers_mask;

//info.num_inliers = match_num[i][j];

//vector<Point2f> pts_src, pts_dst;

Mat src_points(1, static_cast<int>(matches_info.matches.size()), CV_32FC2);

Mat dst_points(1, static_cast<int>(matches_info.matches.size()), CV_32FC2);

for (int n = 0; n < match_num[i][j]; n++)

{

const DMatch& m = matches_info.matches[n];

Point2f p = features[i].keypoints[m.queryIdx].pt;

p.x -= features[i].img_size.width * 0.5f;

p.y -= features[i].img_size.height * 0.5f;

src_points.at<Point2f>(0, static_cast<int>(n)) = p;

p = features[j].keypoints[m.trainIdx].pt;

p.x -= features[j].img_size.width * 0.5f;

p.y -= features[j].img_size.height * 0.5f;

dst_points.at<Point2f>(0, static_cast<int>(n)) = p;

//pts_src.push_back(features[i].keypoints[match[n].queryIdx].pt);

//pts_dst.push_back(features[j].keypoints[match[n].trainIdx].pt);

}

//vector<uchar> mask;

matches_info.H = findHomography(src_points, dst_points, matches_info.inliers_mask,CV_FM_RANSAC);

//matches_info.H = h.clone();

matches_info.num_inliers = 0;

for (size_t i = 0; i < matches_info.inliers_mask.size(); ++i)

if (matches_info.inliers_mask[i])

matches_info.num_inliers++;

//info.confidence = 2;

matches_info.confidence = matches_info.num_inliers / (8 + 0.3 * matches_info.matches.size());

// Set zero confidence to remove matches between too close images, as they don't provide

// additional information anyway. The threshold was set experimentally.

matches_info.confidence = matches_info.confidence > 3. ? 0. : matches_info.confidence;

//// Construct point-point correspondences for inliers only

src_points.create(1, matches_info.num_inliers, CV_32FC2);

dst_points.create(1, matches_info.num_inliers, CV_32FC2);

int inlier_idx = 0;

for (size_t n = 0; n < matches_info.matches.size(); ++n)

{

if (!matches_info.inliers_mask[n])

continue;

const DMatch& m = matches_info.matches[n];

Point2f p = features[i].keypoints[m.queryIdx].pt;

p.x -= features[i].img_size.width * 0.5f;

p.y -= features[i].img_size.height * 0.5f;

src_points.at<Point2f>(0, inlier_idx) = p;

p = features[j].keypoints[m.trainIdx].pt;

p.x -= features[j].img_size.width * 0.5f;

p.y -= features[j].img_size.height * 0.5f;

dst_points.at<Point2f>(0, inlier_idx) = p;

inlier_idx++;

}

// Rerun motion estimation on inliers only

matches_info.H = findHomography(src_points, dst_points, CV_RANSAC);

}

else

{

matches_info.src_img_idx = -1;

matches_info.dst_img_idx = -1;

}

pairwise_matches.push_back(matches_info);//发现程序崩在哪一行了

}

}

cout << pairwise_matches.size() << endl;

/*Mat img1 = imread(img_names[0], 1);

Mat img2 = imread(img_names[1], 1);

Mat out1, out2, out;

drawKeypoints(img1, features[0].keypoints, out1);

drawKeypoints(img1, features[0].keypoints, out2);

drawMatches(img1, features[0].keypoints, img2, features[1].keypoints, pairwise_matches[0].matches, out);

cv::namedWindow("out1", 0);

cv::imshow("out1", out);

cv::namedWindow("out2", 0);

cv::imshow("out2", out);

cv::namedWindow("out", 0);

cv::imshow("out", out);

cv::waitKey();*/

//for(int i=0; i<nu)

HomographyBasedEstimator estimator;

vector<CameraParams> cameras;

estimator(features, pairwise_matches, cameras);

for (size_t i = 0; i < cameras.size(); ++i)

{

Mat R;

cameras[i].R.convertTo(R, CV_32F);

cameras[i].R = R;

//cout << "Initial intrinsics #" << indices[i] + 1 << ":\n" << cameras[i].K() << endl;

}

Mat K1(Matx33d(

1.2755404529239545e+03, 0., 1.3099971348805052e+03, 0.,

1.2737998060528048e+03, 8.0764915313791903e+02, 0., 0., 1.

));

Mat K2(Matx33d(

1.2832823446505638e+03, 0., 1.2250954954648896e+03, 0.,

1.2831721912770793e+03, 7.1743301498758751e+02, 0., 0., 1.

));

Mat K3(Matx33d(

1.2840711959594287e+03, 0., 1.2473666273838244e+03, 0.,

1.2840499404560594e+03, 7.9051574509733359e+02, 0., 0., 1.));

Mat K4(Matx33d(

1.2865853945042952e+03, 0., 1.1876049192856492e+03, 0.,

1.2869927339670007e+03, 6.2306976561458930e+02, 0., 0., 1.

));

Mat K[4];

K[0] = K1.clone();

K[1] = K2.clone();

K[2] = K3.clone();

K[3] = K4.clone();

for (size_t i = 0; i < cameras.size(); ++i)

{

K[i].convertTo(K[i], CV_32F);

}

for (size_t i = 0; i < cameras.size(); ++i)

{

Mat R;

cameras[i].R.convertTo(R, CV_32F);

cameras[i].R = R;

cameras[i].focal = 0.5*(K[i].at<float>(0, 0)+ K[i].at<float>(1, 1)); // Focal length

cameras[i].ppx = K[i].at<float>(0,2); // Principal point X

cameras[i].ppy = K[i].at<float>(1,2); ; // Principal point Y

cout << cameras[i].K() << endl;

//cout << "Initial intrinsics #" << indices[i] + 1 << ":\n" << cameras[i].K() << endl;

}

Ptr<detail::BundleAdjusterBase> adjuster;

if (ba_cost_func == "reproj") adjuster = new detail::BundleAdjusterReproj();

else if (ba_cost_func == "ray") adjuster = new detail::BundleAdjusterRay();

else

{

cout << "Unknown bundle adjustment cost function: '" << ba_cost_func << "'.\n";

return -1;

}

adjuster->setConfThresh(conf_thresh);

Mat_<uchar> refine_mask = Mat::zeros(3, 3, CV_8U);

if (ba_refine_mask[0] == 'x') refine_mask(0, 0) = 1;

if (ba_refine_mask[1] == 'x') refine_mask(0, 1) = 1;

if (ba_refine_mask[2] == 'x') refine_mask(0, 2) = 1;

if (ba_refine_mask[3] == 'x') refine_mask(1, 1) = 1;

if (ba_refine_mask[4] == 'x') refine_mask(1, 2) = 1;

adjuster->setRefinementMask(refine_mask);

for (int i = 0; i < features.size(); i++)

{

features[i].descriptors = Mat();

}

(*adjuster)(features, pairwise_matches, cameras);

cout << "camera number: " << cameras.size() << endl;

cv::FileStorage fs(xml_name, cv::FileStorage::WRITE);

int num = cameras.size();

fs << "CameraNumber" << num;

//char file_name[256];

for (int i = 0; i<cameras.size(); i++)

{

sprintf(file_name, "Focal_Camera%d", i);

fs << file_name << cameras[i].focal;

sprintf(file_name, "ppx_Camera%d", i);

fs << file_name << cameras[i].ppx;

sprintf(file_name, "ppy_Camera%d", i);

fs << file_name << cameras[i].ppy;

sprintf(file_name, "K_Camera%d", i);

fs << file_name << cameras[i].K();

sprintf(file_name, "R_Camera%d", i);

fs << file_name << cameras[i].R;

}

//fs << "indices" << indices;

fs.release();

return 0;

}