beeline 连接不上。已经困扰我半个月,请各位师兄指点一下。我部署hadoop是单机版的。hive 能做查询,能简历数据库。

用!connect jdbc:hive2://devcrm:10000 出现权限问题

beeline> !connect jdbc:hive2://devcrm:10000

Connecting to jdbc:hive2://devcrm:10000

Enter username for jdbc:hive2://devcrm:10000: hadoop

Enter password for jdbc:hive2://devcrm:10000: ******

19/04/23 15:36:53 [main]: WARN jdbc.HiveConnection: Failed to connect to devcrm:10000

Error: Could not open client transport with JDBC Uri: jdbc:hive2://devcrm:10000: Failed to open new session: java.lang.RuntimeException: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.security.authorize.AuthorizationException): User: root is not allowed to impersonate hadoop (state=08S01,code=0)

用 beeline -u jdbc:hive2//devcrm:10000 -n hadoop连接也不行

[root@devcrm hadoop]# beeline -u jdbc:hive2//devcrm:10000 -n hadoop

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/kafka/hive/lib/log4j-slf4j-impl-2.6.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/kafka/hadoop-2.7.6/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

scan complete in 1ms

scan complete in 963ms

No known driver to handle "jdbc:hive2//devcrm:10000"

Beeline version 2.3.0 by Apache Hive

hive-site.xml文件

<configuration>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

<description>username to use against metastore database</description>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>123</value>

<description>password to use against metastore database</description>

</property>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://192.168.12.77:3306/hive?createDatabaseIfNotExist=true</value>

<description>JDBC connect string for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

<description>Driver class name for a JDBC metastore</description>

</property>

<property>

<name>hive.server2.thrift.client.user</name>

<value>hadoop</value>

<description>Username to use against thrift client</description>

</property>

<property>

<name>hive.server2.thrift.client.password</name>

<value>hadoop</value>

<description>Password to use against thrift client</description>

</property>

core-site.xml文件

<configuration>

<!--指定namenode的地址-->

<property>

<name>fs.defaultFS</name>

<value>hdfs://192.168.11.207:9000</value>

</property>

<!--用来指定使用hadoop时产生文件的存放目录-->

<property>

<name>hadoop.tmp.dir</name>

<!--<value>file:/usr/local/kafka/hadoop-2.7.6/tmp</value>-->

<value>file:/home/hadoop/temp</value>

</property>

<!--用来设置检查点备份日志的最长时间-->

<!-- <name>fs.checkpoint.period</name>

<value>3600</value>

-->

<!-- 表示设置 hadoop 的代理用户-->

<property>

<!--表示任意节点使用 hadoop 集群的代理用户 hadoop 都能访问 hdfs 集群-->

<name>hadoop.proxyuser.root.hosts</name>

<value>*</value>

</property>

<property>

<!--表示代理用户的组所属-->

<name>hadoop.proxyuser.root.groups</name>

<value>*</value>

</property>

</configuration>

hdfs-site.xml 文件

<configuration>

<!--指定hdfs保存数据的副本数量-->

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<!--指定hdfs中namenode的存储位置-->

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/usr/local/kafka/hadoop-2.7.6/tmp/dfs/name</value>

</property>

<!--指定hdfs中datanode的存储位置-->

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/usr/local/kafka/hadoop-2.7.6/tmp/dfs/data</value>

</property>

<property>

<name>dfs.secondary.http.address</name>

<value>192.168.11.207:50090</value>

</property>

<property>

<name>dfs.permissions</name>

<value>false</value>

</property>

<!-- 表示启用 webhdfs-->

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

</configuration>

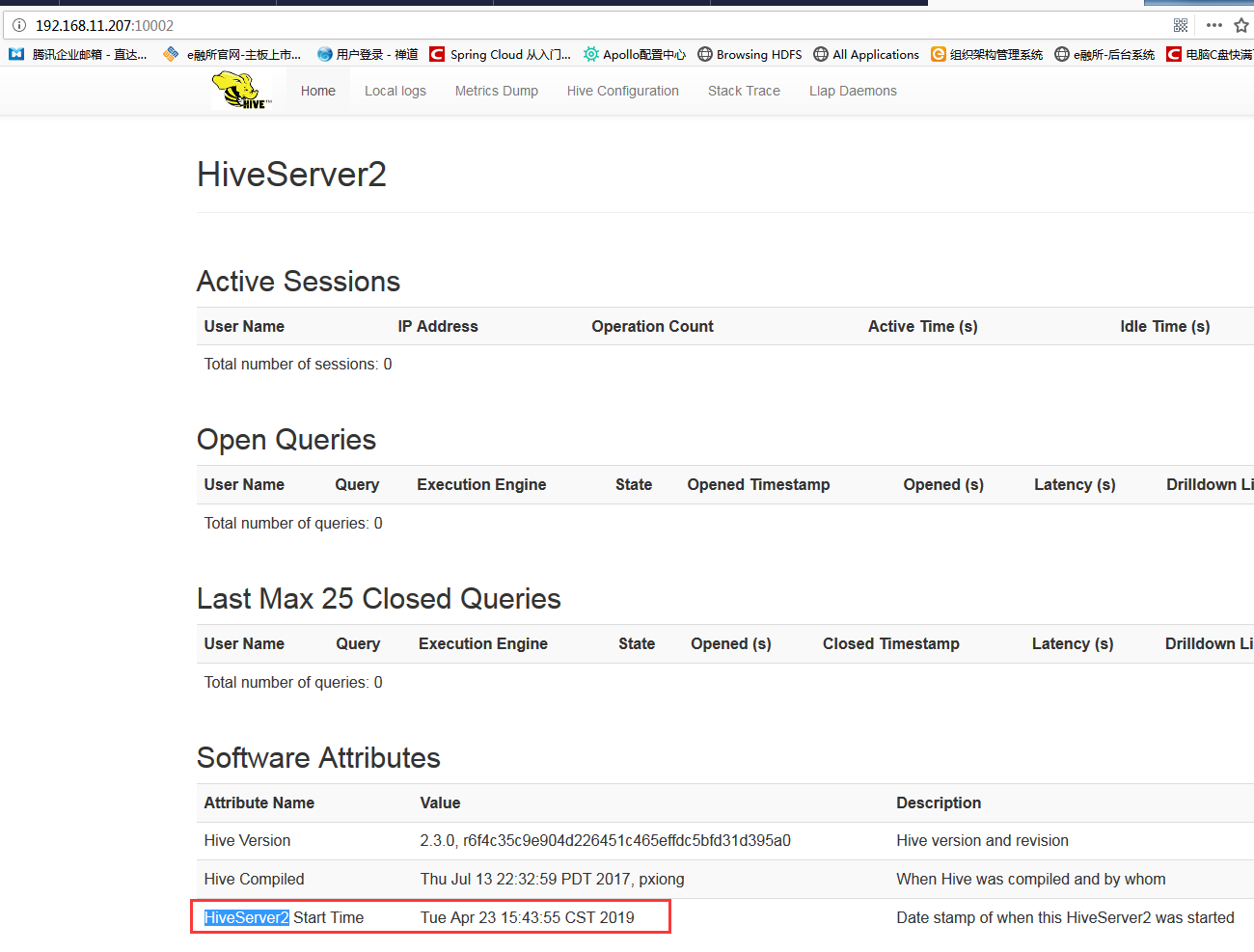

http://192.168.11.207:10002/页面能看到HiveServer2的启动时间

hive 的日志

2019-04-24T09:20:11,829 INFO [main] http.HttpServer: Started HttpServer[hiveserver2] on port 10002

2019-04-24T09:20:50,464 INFO [HiveServer2-Handler-Pool: Thread-38] thrift.ThriftCLIService: Client protocol version: HIVE_CLI_SERVICE_PROTOCOL_V10

2019-04-24T09:20:50,494 INFO [HiveServer2-Handler-Pool: Thread-38] conf.HiveConf: Using the default value passed in for log id: b0f59ac1-d17a-404f-8bf5-fbe4693c9964

2019-04-24T09:20:50,494 INFO [b0f59ac1-d17a-404f-8bf5-fbe4693c9964 HiveServer2-Handler-Pool: Thread-38] conf.HiveConf: Using the default value passed in for log id: b0f59ac1-d17a-404f-8bf5-fbe4693c9964

2019-04-24T09:20:50,494 INFO [HiveServer2-Handler-Pool: Thread-38] conf.HiveConf: Using the default value passed in for log id: b0f59ac1-d17a-404f-8bf5-fbe4693c9964

2019-04-24T09:20:50,495 INFO [b0f59ac1-d17a-404f-8bf5-fbe4693c9964 HiveServer2-Handler-Pool: Thread-38] conf.HiveConf: Using the default value passed in for log id: b0f59ac1-d17a-404f-8bf5-fbe4693c9964

2019-04-24T09:20:50,494 WARN [HiveServer2-Handler-Pool: Thread-38] service.CompositeService: Failed to open session

java.lang.RuntimeException: java.lang.RuntimeException: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.security.authorize.AuthorizationException): User: root is not allowed to impersonate hadoop

at org.apache.hive.service.cli.session.HiveSessionProxy.invoke(HiveSessionProxy.java:89) ~[hive-service-2.3.0.jar:2.3.0]

at org.apache.hive.service.cli.session.HiveSessionProxy.access$000(HiveSessionProxy.java:36) ~[hive-service-2.3.0.jar:2.3.0]

at org.apache.hive.service.cli.session.HiveSessionProxy$1.run(HiveSessionProxy.java:63) ~[hive-service-2.3.0.jar:2.3.0]

at java.security.AccessController.doPrivileged(Native Method) ~[?:1.7.0_80]

at javax.security.auth.Subject.doAs(Subject.java:415) ~[?:1.7.0_80]

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1758) ~[hadoop-common-2.7.6.jar:?]

at org.apache.hive.service.cli.session.HiveSessionProxy.invoke(HiveSessionProxy.java:59) ~[hive-service-2.3.0.jar:2.3.0]

at com.sun.proxy.$Proxy36.open(Unknown Source) ~[?:?]

at org.apache.hive.service.cli.session.SessionManager.createSession(SessionManager.java:410) ~[hive-service-2.3.0.jar:2.3.0]

at org.apache.hive.service.cli.session.SessionManager.openSession(SessionManager.java:362) ~[hive-service-2.3.0.jar:2.3.0]

at org.apache.hive.service.cli.CLIService.openSessionWithImpersonation(CLIService.java:193) ~[hive-service-2.3.0.jar:2.3.0]

at org.apache.hive.service.cli.thrift.ThriftCLIService.getSessionHandle(ThriftCLIService.java:440) ~[hive-service-2.3.0.jar:2.3.0]

at org.apache.hive.service.cli.thrift.ThriftCLIService.OpenSession(ThriftCLIService.java:322) ~[hive-service-2.3.0.jar:2.3.0]

at org.apache.hive.service.rpc.thrift.TCLIService$Processor$OpenSession.getResult(TCLIService.java:1377) ~[hive-exec-2.3.0.jar:2.3.0]

at org.apache.hive.service.rpc.thrift.TCLIService$Processor$OpenSession.getResult(TCLIService.java:1362) ~[hive-exec-2.3.0.jar:2.3.0]

at org.apache.thrift.ProcessFunction.process(ProcessFunction.java:39) ~[hive-exec-2.3.0.jar:2.3.0]

at org.apache.thrift.TBaseProcessor.process(TBaseProcessor.java:39) ~[hive-exec-2.3.0.jar:2.3.0]

at org.apache.hive.service.auth.TSetIpAddressProcessor.process(TSetIpAddressProcessor.java:56) ~[hive-service-2.3.0.jar:2.3.0]

at org.apache.thrift.server.TThreadPoolServer$WorkerProcess.run(TThreadPoolServer.java:286) ~[hive-exec-2.3.0.jar:2.3.0]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1145) [?:1.7.0_80]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:615) [?:1.7.0_80]

at java.lang.Thread.run(Thread.java:745) [?:1.7.0_80]

Caused by: java.lang.RuntimeException: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.security.authorize.AuthorizationException): User: root is not allowed to impersonate hadoop

at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:606) ~[hive-exec-2.3.0.jar:2.3.0]

at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:544) ~[hive-exec-2.3.0.jar:2.3.0]

at org.apache.hive.service.cli.session.HiveSessionImpl.open(HiveSessionImpl.java:164) ~[hive-service-2.3.0.jar:2.3.0]

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) ~[?:1.7.0_80]

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57) ~[?:1.7.0_80]

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[?:1.7.0_80]

at java.lang.reflect.Method.invoke(Method.java:606) ~[?:1.7.0_80]

at org.apache.hive.service.cli.session.HiveSessionProxy.invoke(HiveSessionProxy.java:78) ~[hive-service-2.3.0.jar:2.3.0]

... 21 more

Caused by: org.apache.hadoop.ipc.RemoteException: User: root is not allowed to impersonate hadoop

at org.apache.hadoop.ipc.Client.call(Client.java:1476) ~[hadoop-common-2.7.6.jar:?]

at org.apache.hadoop.ipc.Client.call(Client.java:1413) ~[hadoop-common-2.7.6.jar:?]

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:229) ~[hadoop-common-2.7.6.jar:?]

at com.sun.proxy.$Proxy29.getFileInfo(Unknown Source) ~[?:?]

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.getFileInfo(ClientNamenodeProtocolTranslatorPB.java:776) ~[hadoop-hdfs-2.7.6.jar:?]

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) ~[?:1.7.0_80]

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57) ~[?:1.7.0_80]

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[?:1.7.0_80]

at java.lang.reflect.Method.invoke(Method.java:606) ~[?:1.7.0_80]

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:191) ~[hadoop-common-2.7.6.jar:?]

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:102) ~[hadoop-common-2.7.6.jar:?]

at com.sun.proxy.$Proxy30.getFileInfo(Unknown Source) ~[?:?]

at org.apache.hadoop.hdfs.DFSClient.getFileInfo(DFSClient.java:2117) ~[hadoop-hdfs-2.7.6.jar:?]

at org.apache.hadoop.hdfs.DistributedFileSystem$22.doCall(DistributedFileSystem.java:1305) ~[hadoop-hdfs-2.7.6.jar:?]

at org.apache.hadoop.hdfs.DistributedFileSystem$22.doCall(DistributedFileSystem.java:1301) ~[hadoop-hdfs-2.7.6.jar:?]

at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81) ~[hadoop-common-2.7.6.jar:?]

at org.apache.hadoop.hdfs.DistributedFileSystem.getFileStatus(DistributedFileSystem.java:1317) ~[hadoop-hdfs-2.7.6.jar:?]

at org.apache.hadoop.fs.FileSystem.exists(FileSystem.java:1425) ~[hadoop-common-2.7.6.jar:?]

at org.apache.hadoop.hive.ql.session.SessionState.createRootHDFSDir(SessionState.java:704) ~[hive-exec-2.3.0.jar:2.3.0]

at org.apache.hadoop.hive.ql.session.SessionState.createSessionDirs(SessionState.java:650) ~[hive-exec-2.3.0.jar:2.3.0]

at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:582) ~[hive-exec-2.3.0.jar:2.3.0]

at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:544) ~[hive-exec-2.3.0.jar:2.3.0]

at org.apache.hive.service.cli.session.HiveSessionImpl.open(HiveSessionImpl.java:164) ~[hive-service-2.3.0.jar:2.3.0]

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) ~[?:1.7.0_80]

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57) ~[?:1.7.0_80]

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[?:1.7.0_80]

at java.lang.reflect.Method.invoke(Method.java:606) ~[?:1.7.0_80]

at org.apache.hive.service.cli.session.HiveSessionProxy.invoke(HiveSessionProxy.java:78) ~[hive-service-2.3.0.jar:2.3.0]

... 21 more

2019-04-24T09:20:50,494 INFO [HiveServer2-Handler-Pool: Thread-38] session.SessionState: Updating thread name to b0f59ac1-d17a-404f-8bf5-fbe4693c9964 HiveServer2-Handler-Pool: Thread-38

2019-04-24T09:20:50,494 INFO [HiveServer2-Handler-Pool: Thread-38] session.SessionState: Resetting thread name to HiveServer2-Handler-Pool: Thread-38

2019-04-24T09:20:50,494 INFO [HiveServer2-Handler-Pool: Thread-38] session.SessionState: Updating thread name to b0f59ac1-d17a-404f-8bf5-fbe4693c9964 HiveServer2-Handler-Pool: Thread-38

2019-04-24T09:20:50,495 INFO [HiveServer2-Handler-Pool: Thread-38] session.SessionState: Resetting thread name to HiveServer2-Handler-Pool: Thread-38

2019-04-24T09:20:50,509 WARN [HiveServer2-Handler-Pool: Thread-38] thrift.ThriftCLIService: Error opening session:

org.apache.hive.service.cli.HiveSQLException: Failed to open new session: java.lang.RuntimeException: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.security.authorize.AuthorizationException): User: root is not allowed to impersonate hadoop

at org.apache.hive.service.cli.session.SessionManager.createSession(SessionManager.java:419) ~[hive-service-2.3.0.jar:2.3.0]

at org.apache.hive.service.cli.session.SessionManager.openSession(SessionManager.java:362) ~[hive-service-2.3.0.jar:2.3.0]

at org.apache.hive.service.cli.CLIService.openSessionWithImpersonation(CLIService.java:193) ~[hive-service-2.3.0.jar:2.3.0]

at org.apache.hive.service.cli.thrift.ThriftCLIService.getSessionHandle(ThriftCLIService.java:440) ~[hive-service-2.3.0.jar:2.3.0]

at org.apache.hive.service.cli.thrift.ThriftCLIService.OpenSession(ThriftCLIService.java:322) ~[hive-service-2.3.0.jar:2.3.0]

at org.apache.hive.service.rpc.thrift.TCLIService$Processor$OpenSession.getResult(TCLIService.java:1377) ~[hive-exec-2.3.0.jar:2.3.0]

at org.apache.hive.service.rpc.thrift.TCLIService$Processor$OpenSession.getResult(TCLIService.java:1362) ~[hive-exec-2.3.0.jar:2.3.0]

at org.apache.thrift.ProcessFunction.process(ProcessFunction.java:39) ~[hive-exec-2.3.0.jar:2.3.0]

at org.apache.thrift.TBaseProcessor.process(TBaseProcessor.java:39) ~[hive-exec-2.3.0.jar:2.3.0]

at org.apache.hive.service.auth.TSetIpAddressProcessor.process(TSetIpAddressProcessor.java:56) ~[hive-service-2.3.0.jar:2.3.0]

at org.apache.thrift.server.TThreadPoolServer$WorkerProcess.run(TThreadPoolServer.java:286) ~[hive-exec-2.3.0.jar:2.3.0]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1145) [?:1.7.0_80]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:615) [?:1.7.0_80]

at java.lang.Thread.run(Thread.java:745) [?:1.7.0_80]

Caused by: java.lang.RuntimeException: java.lang.RuntimeException: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.security.authorize.AuthorizationException): User: root is not allowed to impersonate hadoop

at org.apache.hive.service.cli.session.HiveSessionProxy.invoke(HiveSessionProxy.java:89) ~[hive-service-2.3.0.jar:2.3.0]

at org.apache.hive.service.cli.session.HiveSessionProxy.access$000(HiveSessionProxy.java:36) ~[hive-service-2.3.0.jar:2.3.0]

at org.apache.hive.service.cli.session.HiveSessionProxy$1.run(HiveSessionProxy.java:63) ~[hive-service-2.3.0.jar:2.3.0]

at java.security.AccessController.doPrivileged(Native Method) ~[?:1.7.0_80]

at javax.security.auth.Subject.doAs(Subject.java:415) ~[?:1.7.0_80]

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1758) ~[hadoop-common-2.7.6.jar:?]

at org.apache.hive.service.cli.session.HiveSessionProxy.invoke(HiveSessionProxy.java:59) ~[hive-service-2.3.0.jar:2.3.0]

at com.sun.proxy.$Proxy36.open(Unknown Source) ~[?:?]

at org.apache.hive.service.cli.session.SessionManager.createSession(SessionManager.java:410) ~[hive-service-2.3.0.jar:2.3.0]

... 13 more

Caused by: java.lang.RuntimeException: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.security.authorize.AuthorizationException): User: root is not allowed to impersonate hadoop

at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:606) ~[hive-exec-2.3.0.jar:2.3.0]

at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:544) ~[hive-exec-2.3.0.jar:2.3.0]

at org.apache.hive.service.cli.session.HiveSessionImpl.open(HiveSessionImpl.java:164) ~[hive-service-2.3.0.jar:2.3.0]

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) ~[?:1.7.0_80]

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57) ~[?:1.7.0_80]

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[?:1.7.0_80]

at java.lang.reflect.Method.invoke(Method.java:606) ~[?:1.7.0_80]

at org.apache.hive.service.cli.session.HiveSessionProxy.invoke(HiveSessionProxy.java:78) ~[hive-service-2.3.0.jar:2.3.0]

at org.apache.hive.service.cli.session.HiveSessionProxy.access$000(HiveSessionProxy.java:36) ~[hive-service-2.3.0.jar:2.3.0]

at org.apache.hive.service.cli.session.HiveSessionProxy$1.run(HiveSessionProxy.java:63) ~[hive-service-2.3.0.jar:2.3.0]

at java.security.AccessController.doPrivileged(Native Method) ~[?:1.7.0_80]

at javax.security.auth.Subject.doAs(Subject.java:415) ~[?:1.7.0_80]

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1758) ~[hadoop-common-2.7.6.jar:?]

at org.apache.hive.service.cli.session.HiveSessionProxy.invoke(HiveSessionProxy.java:59) ~[hive-service-2.3.0.jar:2.3.0]

at com.sun.proxy.$Proxy36.open(Unknown Source) ~[?:?]

at org.apache.hive.service.cli.session.SessionManager.createSession(SessionManager.java:410) ~[hive-service-2.3.0.jar:2.3.0]

... 13 more

Caused by: org.apache.hadoop.ipc.RemoteException: User: root is not allowed to impersonate hadoop

at org.apache.hadoop.ipc.Client.call(Client.java:1476) ~[hadoop-common-2.7.6.jar:?]

at org.apache.hadoop.ipc.Client.call(Client.java:1413) ~[hadoop-common-2.7.6.jar:?]

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:229) ~[hadoop-common-2.7.6.jar:?]

at com.sun.proxy.$Proxy29.getFileInfo(Unknown Source) ~[?:?]

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.getFileInfo(ClientNamenodeProtocolTranslatorPB.java:776) ~[hadoop-hdfs-2.7.6.jar:?]

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) ~[?:1.7.0_80]

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57) ~[?:1.7.0_80]

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[?:1.7.0_80]

at java.lang.reflect.Method.invoke(Method.java:606) ~[?:1.7.0_80]

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:191) ~[hadoop-common-2.7.6.jar:?]

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:102) ~[hadoop-common-2.7.6.jar:?]

at com.sun.proxy.$Proxy30.getFileInfo(Unknown Source) ~[?:?]

at org.apache.hadoop.hdfs.DFSClient.getFileInfo(DFSClient.java:2117) ~[hadoop-hdfs-2.7.6.jar:?]

at org.apache.hadoop.hdfs.DistributedFileSystem$22.doCall(DistributedFileSystem.java:1305) ~[hadoop-hdfs-2.7.6.jar:?]

at org.apache.hadoop.hdfs.DistributedFileSystem$22.doCall(DistributedFileSystem.java:1301) ~[hadoop-hdfs-2.7.6.jar:?]

at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81) ~[hadoop-common-2.7.6.jar:?]

at org.apache.hadoop.hdfs.DistributedFileSystem.getFileStatus(DistributedFileSystem.java:1317) ~[hadoop-hdfs-2.7.6.jar:?]

at org.apache.hadoop.fs.FileSystem.exists(FileSystem.java:1425) ~[hadoop-common-2.7.6.jar:?]

at org.apache.hadoop.hive.ql.session.SessionState.createRootHDFSDir(SessionState.java:704) ~[hive-exec-2.3.0.jar:2.3.0]

at org.apache.hadoop.hive.ql.session.SessionState.createSessionDirs(SessionState.java:650) ~[hive-exec-2.3.0.jar:2.3.0]

at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:582) ~[hive-exec-2.3.0.jar:2.3.0]

at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:544) ~[hive-exec-2.3.0.jar:2.3.0]

at org.apache.hive.service.cli.session.HiveSessionImpl.open(HiveSessionImpl.java:164) ~[hive-service-2.3.0.jar:2.3.0]

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) ~[?:1.7.0_80]

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57) ~[?:1.7.0_80]

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[?:1.7.0_80]

at java.lang.reflect.Method.invoke(Method.java:606) ~[?:1.7.0_80]

at org.apache.hive.service.cli.session.HiveSessionProxy.invoke(HiveSessionProxy.java:78) ~[hive-service-2.3.0.jar:2.3.0]

at org.apache.hive.service.cli.session.HiveSessionProxy.access$000(HiveSessionProxy.java:36) ~[hive-service-2.3.0.jar:2.3.0]

at org.apache.hive.service.cli.session.HiveSessionProxy$1.run(HiveSessionProxy.java:63) ~[hive-service-2.3.0.jar:2.3.0]

at java.security.AccessController.doPrivileged(Native Method) ~[?:1.7.0_80]

at javax.security.auth.Subject.doAs(Subject.java:415) ~[?:1.7.0_80]

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1758) ~[hadoop-common-2.7.6.jar:?]

at org.apache.hive.service.cli.session.HiveSessionProxy.invoke(HiveSessionProxy.java:59) ~[hive-service-2.3.0.jar:2.3.0]

at com.sun.proxy.$Proxy36.open(Unknown Source) ~[?:?]

at org.apache.hive.service.cli.session.SessionManager.createSession(SessionManager.java:410) ~[hive-service-2.3.0.jar:2.3.0]

... 13 more