问题遇到的现象和发生背景

使用hdfs上传文件报错,如果是空文件上传就会成功,如果是有内容的文件就会上传失败

运行结果及报错内容

org.apache.hadoop.hdfs.CannotObtainBlockLengthException: Cannot obtain block length for LocatedBlock{BP-293563276-192.168.0.11-1634095772355:blk_1073784416_43592; getBlockSize()=0; corrupt=false; offset=0; locs=[DatanodeInfoWithStorage[192.168.0.11:9866,DS-0f59c3c4-7aae-4baa-b7df-786930725c03,DISK]]}

at org.apache.hadoop.hdfs.DFSInputStream.readBlockLength(DFSInputStream.java:363) ~[hadoop-hdfs-client-3.2.1.jar!/:?]

at org.apache.hadoop.hdfs.DFSInputStream.fetchLocatedBlocksAndGetLastBlockLength(DFSInputStream.java:270) ~[hadoop-hdfs-client-3.2.1.jar!/:?]

at org.apache.hadoop.hdfs.DFSInputStream.openInfo(DFSInputStream.java:201) ~[hadoop-hdfs-client-3.2.1.jar!/:?]

at org.apache.hadoop.hdfs.DFSInputStream.(DFSInputStream.java:185) ~[hadoop-hdfs-client-3.2.1.jar!/:?]

at org.apache.hadoop.hdfs.DFSClient.openInternal(DFSClient.java:1048) ~[hadoop-hdfs-client-3.2.1.jar!/:?]

at org.apache.hadoop.hdfs.DFSClient.open(DFSClient.java:1011) ~[hadoop-hdfs-client-3.2.1.jar!/:?]

at org.apache.hadoop.hdfs.DistributedFileSystem$4.doCall(DistributedFileSystem.java:319) ~[hadoop-hdfs-client-3.2.1.jar!/:?]

at org.apache.hadoop.hdfs.DistributedFileSystem$4.doCall(DistributedFileSystem.java:315) ~[hadoop-hdfs-client-3.2.1.jar!/:?]

at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81) ~[hadoop-common-3.2.1.jar!/:?]

at org.apache.hadoop.hdfs.DistributedFileSystem.open(DistributedFileSystem.java:327) ~[hadoop-hdfs-client-3.2.1.jar!/:?]

at org.apache.hadoop.fs.FileSystem.open(FileSystem.java:899) ~[hadoop-common-3.2.1.jar!/:?]

at com.jf.common.oss.service.impl.HdfsOSSImpl.getObject(HdfsOSSImpl.java:68) [jfcommon-oss-2.1.0.jar!/:?]

at com.jf.common.oss.JfOSSClient.getObject(JfOSSClient.java:90) [jfcommon-oss-2.1.0.jar!/:?]

at com.jfh.oss.OSSClientProxy.getOSSObject(OSSClientProxy.java:98) [classes!/:?]

at com.jfh.util.MergePartHelper.mergePartFile(MergePartHelper.java:78) [classes!/:?]

at com.jfh.helper.ViewFileHelper.mergePartFile(ViewFileHelper.java:125) [classes!/:?]

at com.jfh.controller.JFZGFileController.mergePartFile(JFZGFileController.java:99) [classes!/:?]

at com.jfh.controller.JFZGFileController$$FastClassBySpringCGLIB$$2bf96aa4.invoke() [classes!/:?]

at org.springframework.cglib.proxy.MethodProxy.invoke(MethodProxy.java:218) [spring-core-5.1.15.RELEASE.jar!/:5.1.15.RELEASE]

at org.springframework.aop.framework.CglibAopProxy$CglibMethodInvocation.invokeJoinpoint(CglibAopProxy.java:752) [spring-aop-5.1.15.RELEASE.jar!/:5.1.15.RELEASE]

at org.springframework.aop.framework.ReflectiveMethodInvocation.proceed(ReflectiveMethodInvocation.java:163) [spring-aop-5.1.15.RELEASE.jar!/:5.1.15.RELEASE]

我的解答思路和尝试过的方法

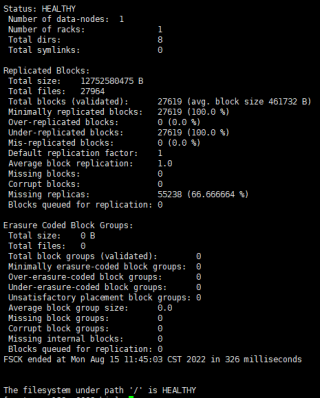

使用 hdfs fsck -openforwrite这个命令得到的数据中没有openforwrite