如何消除

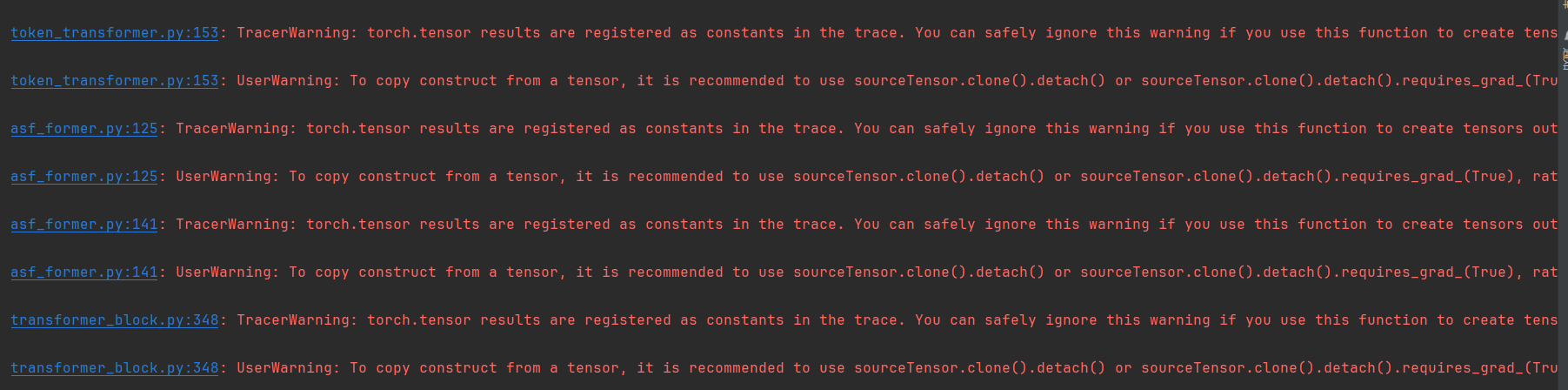

TracerWarning: torch.tensor results are registered as constants in the trace. You can safely ignore this warning if you use this function to create tensors out of constant variables that would be the same every time you call this function. In any other case, this might cause the trace to be incorrect.

sqrt_HW = torch.sqrt(torch.tensor(

UserWarning: To copy construct from a tensor, it is recommended to use sourceTensor.clone().detach() or sourceTensor.clone().detach().requires_grad_(True), rather than torch.tensor(sourceTensor).

sqrt_HW = torch.sqrt(torch.tensor(

源码如下:

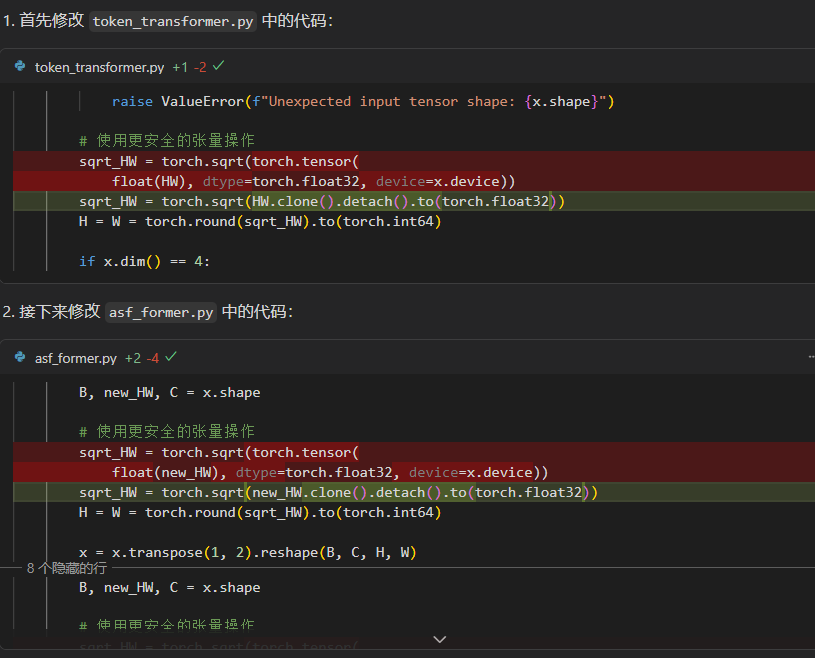

token_transformer.py

def forward(self, x):

if not self.expand_disabled:

x_emb = self.fc1(x)

else:

x_emb = x

x_attn = x_emb[:, :, 0:self.attn_dim]

x_conv = x_emb[:, :, self.attn_dim:]

B, HW, C = x_conv.shape

# 修改后的张量操作

HW = x_conv.size(1) # 获取HW作为张量值

# 警告位置

**sqrt_HW = torch.sqrt(torch.tensor(

HW, dtype=torch.float32, device=x.device))

H = W = torch.round(sqrt_HW).to(torch.int64)**

x_conv = x_conv.transpose(1, 2).reshape(B, C, H, W) # 使用张量H/W

x_attn = self.attn(self.norm1(x_attn))

# x_attn = self.attn(self.norm1(x_attn.transpose(1,2)).transpose(1,2))

x_conv = self.conv_branch(x_conv)

B, C, H, W = x_conv.shape

x_conv = x_conv.reshape(B, C, H*W).transpose(1, 2)

# x = self.split_ratio * x_attn + (1 - self.split_ratio) * x_conv

x, w_attn, w_conv = self.fuse(x_attn, x_conv)

x = x + self.drop_path(self.mlp(self.norm2(x)))

return x, w_attn, w_conv

asf_former.py

def forward(self, x):

w_attn_depth = []

w_conv_depth = []

# step0: soft split

x = self.soft_split0(x).transpose(1, 2)

# iteration1: re-structurization/reconstruction

x, w_attn, w_conv = self.attention1(x)

w_attn_depth.append(w_attn.mean())

w_conv_depth.append(w_conv.mean())

# 处理不同形状的输入张量

B, new_HW, C = x.shape

# 警告位置

**sqrt_HW = torch.sqrt(torch.tensor(

new_HW, dtype=torch.float32, device=x.device))

H = W = torch.round(sqrt_HW).to(torch.int64)**

x = x.transpose(1, 2).reshape(B, C, H, W)

# iteration1: soft split

x = self.soft_split1(x).transpose(1, 2)

# iteration2: re-structurization/reconstruction

x, w_attn, w_conv = self.attention2(x)

w_attn_depth.append(w_attn.mean())

w_conv_depth.append(w_conv.mean())

B, new_HW, C = x.shape

# 警告位置

sqrt_HW = torch.sqrt(torch.tensor(

new_HW, dtype=torch.float32, device=x.device))

H = W = torch.round(sqrt_HW).to(torch.int64)

x = x.transpose(1, 2).reshape(B, C, H, W)

# iteration2: soft split

x = self.soft_split2(x).transpose(1, 2)

# final tokens

x = self.project(x)

return x, w_attn_depth, w_conv_depth

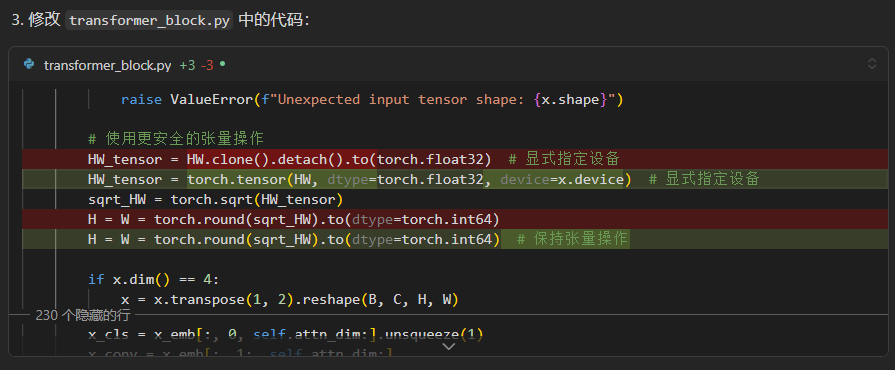

transformer_block.py

def forward(self, x):

if not self.expand_disabled:

x_emb = self.fc1(x)

else:

x_emb = x

x_attn = x_emb[:, :, 0:self.attn_dim]

x_cls = x_emb[:, 0, self.attn_dim:].unsqueeze(1)

x_conv = x_emb[:, 1:, self.attn_dim:]

B, HW, C = x_conv.shape

# 警告位置

**HW_tensor = torch.tensor(

HW, dtype=torch.float32, device=x_conv.device) # 显式指定设备

sqrt_HW = torch.sqrt(HW_tensor)

H = W = torch.round(sqrt_HW).to(dtype=torch.int64) # 保持张量操作**

x_conv = x_conv.transpose(1, 2).reshape(

B, C, H, W)

x_attn = self.attn(self.norm1(x_attn))

# x_attn = self.attn(self.norm1(x_attn.transpose(1,2)).transpose(1,2))

x_conv = self.conv_branch(x_conv)

B, C, H, W = x_conv.shape

x_conv = x_conv.reshape(B, C, H*W).transpose(1, 2)

x_conv = torch.cat((x_cls, x_conv), dim=1)

# x = x + self.drop_path(self.fc2(self.split_ratio * x_attn + (1 - self.split_ratio) * x_conv))

x_fuse, w_attn, w_conv = self.fuse(x_attn, x_conv)

x = x + self.drop_path(self.fc2(x_fuse))

x = x + self.drop_path(self.mlp(self.norm2(x)))

return x, w_attn, w_conv

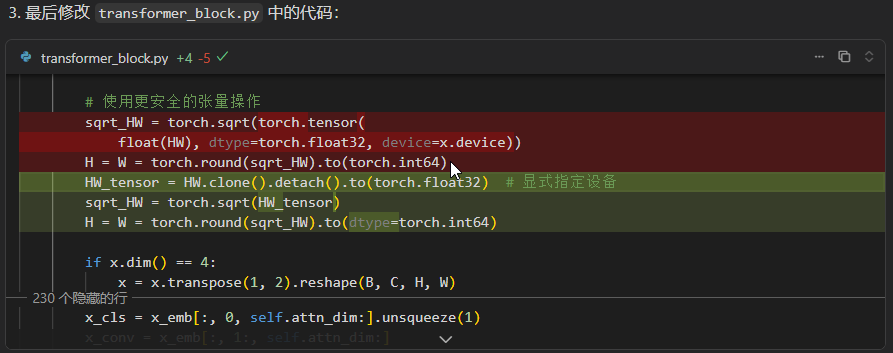

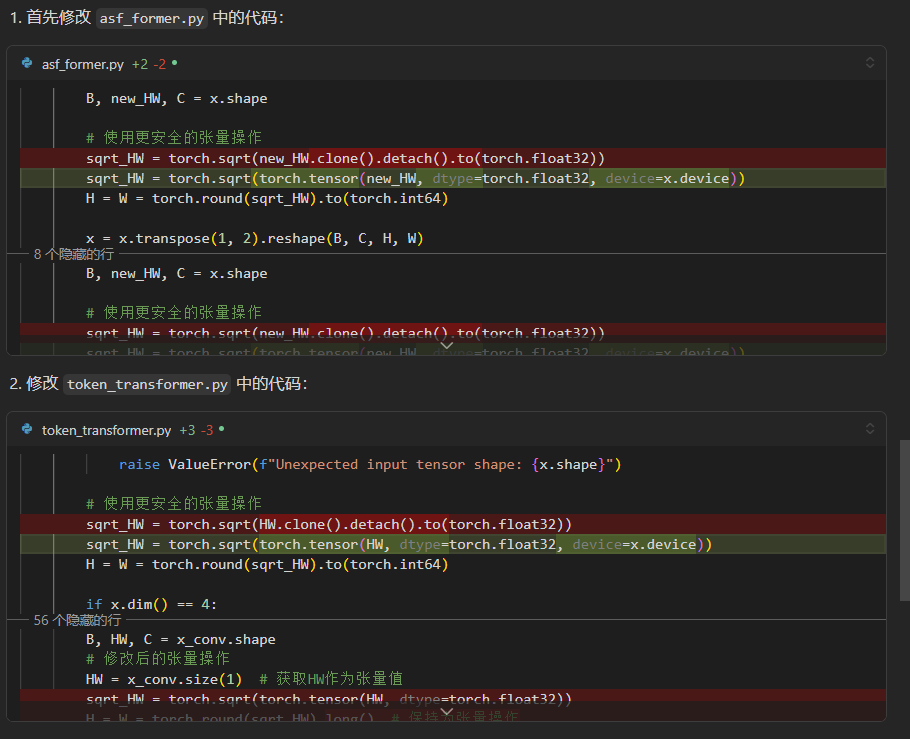

其实之前有尝试过改为sourceTensor.clone().detach()

但是后面又报

C:\Users\范先生\Desktop\大三\图像处理_2419\农作物病虫害检测\Leaf_Disease\models\token_transformer.py:152: TracerWarning: torch.tensor results are registered as constants in the trace. You can safely ignore this warning if you use this function to create tensors out of constant variables that would be the same every time you call this function. In any other case, this might cause the trace to be incorrect.

sqrt_HW = torch.sqrt(torch.tensor(HW, dtype=torch.float32))

C:\Users\范先生\Desktop\大三\图像处理_2419\农作物病虫害检测\Leaf_Disease\models\token_transformer.py:152: UserWarning: To copy construct from a tensor, it is recommended to use sourceTensor.clone().detach() or sourceTensor.clone().detach().requires_grad_(True), rather than torch.tensor(sourceTensor).

sqrt_HW = torch.sqrt(torch.tensor(HW, dtype=torch.float32))

Traceback (most recent call last):

File "G:\anaconda\envs\pytorch\lib\site-packages\torch\jit\_trace.py", line 477, in run_mod_and_filter_tensor_outputs

outs = wrap_retval(mod(*_clone_inputs(inputs)))

File "G:\anaconda\envs\pytorch\lib\site-packages\torch\nn\modules\module.py", line 1736, in _wrapped_call_impl

return self._call_impl(*args, **kwargs)

File "G:\anaconda\envs\pytorch\lib\site-packages\torch\nn\modules\module.py", line 1747, in _call_impl

return forward_call(*args, **kwargs)

File "C:\Users\范先生\Desktop\大三\图像处理_2419\农作物病虫害检测\Leaf_Disease\tools\common_tools_torchmetrics_old.py", line 86, in forward

asf_output = self.asf_former(x)

File "G:\anaconda\envs\pytorch\lib\site-packages\torch\nn\modules\module.py", line 1736, in _wrapped_call_impl

return self._call_impl(*args, **kwargs)

File "G:\anaconda\envs\pytorch\lib\site-packages\torch\nn\modules\module.py", line 1747, in _call_impl

return forward_call(*args, **kwargs)

File "C:\Users\范先生\Desktop\大三\图像处理_2419\农作物病虫害检测\Leaf_Disease\models\asf_former.py", line 273, in forward

x, w_attn_depth, w_conv_depth, w_attn_class, w_conv_class = self.forward_features(

File "C:\Users\范先生\Desktop\大三\图像处理_2419\农作物病虫害检测\Leaf_Disease\models\asf_former.py", line 238, in forward_features

x, w_attn_depth, w_conv_depth = self.tokens_to_token(x)

File "G:\anaconda\envs\pytorch\lib\site-packages\torch\nn\modules\module.py", line 1736, in _wrapped_call_impl

return self._call_impl(*args, **kwargs)

File "G:\anaconda\envs\pytorch\lib\site-packages\torch\nn\modules\module.py", line 1747, in _call_impl

return forward_call(*args, **kwargs)

File "C:\Users\范先生\Desktop\大三\图像处理_2419\农作物病虫害检测\Leaf_Disease\models\asf_former.py", line 125, in forward

sqrt_HW = torch.sqrt(new_HW.clone().detach().to(torch.float32))

AttributeError: 'int' object has no attribute 'clone'

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "C:\Users\范先生\Desktop\大三\图像处理_2419\农作物病虫害检测\Leaf_Disease\train_CustomNet.py", line 159, in <module>

writer.add_graph(custom_model, input_to_model=torch.rand(

File "G:\anaconda\envs\pytorch\lib\site-packages\torch\utils\tensorboard\writer.py", line 841, in add_graph

graph(model, input_to_model, verbose, use_strict_trace)

File "G:\anaconda\envs\pytorch\lib\site-packages\torch\utils\tensorboard\_pytorch_graph.py", line 331, in graph

trace = torch.jit.trace(model, args, strict=use_strict_trace)

File "G:\anaconda\envs\pytorch\lib\site-packages\torch\jit\_trace.py", line 1002, in trace

traced_func = _trace_impl(

File "G:\anaconda\envs\pytorch\lib\site-packages\torch\jit\_trace.py", line 698, in _trace_impl

return trace_module(

File "G:\anaconda\envs\pytorch\lib\site-packages\torch\jit\_trace.py", line 1306, in trace_module

_check_trace(

File "G:\anaconda\envs\pytorch\lib\site-packages\torch\utils\_contextlib.py", line 116, in decorate_context

return func(*args, **kwargs)

File "G:\anaconda\envs\pytorch\lib\site-packages\torch\jit\_trace.py", line 583, in _check_trace

fn_outs = run_mod_and_filter_tensor_outputs(func, inputs, "Python function")

File "G:\anaconda\envs\pytorch\lib\site-packages\torch\jit\_trace.py", line 483, in run_mod_and_filter_tensor_outputs

raise TracingCheckError(

torch.jit._trace.TracingCheckError: Tracing failed sanity checks!

encountered an exception while running the Python function with test inputs.

Exception:

'int' object has no attribute 'clone'

后面又改回来了

结果依旧报第一个追踪的警告,不知道如何解决。