import pandas as pd

import chardet

from lxml import etree

import requests

import re

import time

import warnings

warnings.filterwarnings("ignore")

def get_CI(url):

url = 'https://www.shixi.com/search/index?key=%E5%A4%A7%E6%95%B0%E6%8D%AE&districts=&education=0&full_opportunity=0&stage=0&practice_days=0&nature=0&trades=&lang=zh_cn'

dic = {'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/96.0.4664.110 Safari/537.36 Edg/96.0.1054.57'}

# 移除感叹号

requests.packages.urllib3.disable_warnings()

resp = requests.get(url,headers=dic,verify=False)

resp.encoding = chardet.detect(resp.content)['encoding']

et = etree.HTML(resp.text)

# 公司

company_list = et.xpath('//div[@class="job-pannel-list"]//div[@class="job-pannel-one"]//a/text()')

company_list = [company_list[i].strip() for i in range(len(company_list)) if i % 2 != 0]

# 岗位

job_list = et.xpath('//div[@class="job-pannel-list"]//div[@class="job-pannel-one"]//a/text()')

job_list = [job_list[i].strip() for i in range(len(job_list)) if i % 2 == 0]

# 地址

address_list = et.xpath('//div[@class="job-pannel-two"]//a/text()')

# 学历

degree_list = et.xpath('//div[@class="job-pannel-list"]//dd[@class="job-des"]/span/text()')

# 薪资

salary_list = et.xpath('//div[@class="job-pannel-two"]//div[@class="company-info-des"]//text()')

salary_list = [i.strip() for i in salary_list]

# 时间

time_list = et.xpath('//div[@class="job-pannel-two"]//span[@class="job-time"]/text()')

# 获取二级界面

deep_url_list = et.xpath('//div[@class="job-pannel-list"]//dt/a/@href')

x = "https://www.shixi.com"

deep_url_list = [x + i for i in deep_url_list]

demand_list = []

for deep_url in deep_url_list:

rqg = requests.get(deep_url, headers=dic, verify=False)

rqg.encoding = chardet.detect(rqg.content)['encoding']

html = etree.HTML(rqg.text)

discribe =html.xpath('//div[@class="container-fluid"]//div[@class="work_b"]/text()')

demand_list.append(discribe)

data = {'公司名': company_list, '岗位名': job_list, '地址': address_list, "学历": degree_list,

'薪资': salary_list, '时间': time_list, '岗位需求量': demand_list}

df = pd.DataFrame.from_dict(data, orient='index')

return (df)

x = "https://www.shixi.com/search/index?key=%E5%A4%A7%E6%95%B0%E6%8D%AE&page="

url_list = [x + str(i) for i in range(1,4)]

res = pd.DataFrame(columns=['公司名', '岗位名', '地址', "学历", '薪资', '时间', '岗位需求量'])

# 翻页

for url in url_list:

res0 = get_CI(url)

res = pd.concat([res, res0])

time.sleep(2)

res.to_csv('a.csv', encoding='utf_8_sig')

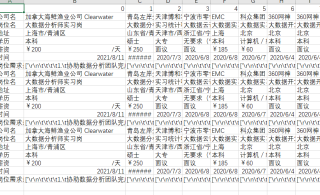

爬出来的是第一页的重复数据

请问怎么解决?