python3 scrapy Request 请求时,scrapy 会自动将headers 中的参数 格式化,使其保持首字母大写,下划线等特殊符号后第一个字母大写。但现在有个问题 我要往服务端传一个headers的参数,但参数本身没有大写,经过scrapy 请求后参数变为首字母大写,服务器端根本不认这个参数,我就想问下有谁知道scrapy,Request 有不处理headers的方法吗? 但使用requests请求时,而不是用scrapy.Request时,headers 是没有变化的。

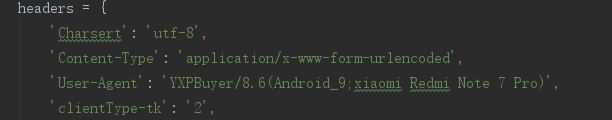

这是headers 请求之前的

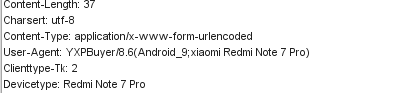

这是抓包抓到的请求头