我尝试着使用tf2.0来搭建一个DeepFM模型来预测用户是否喜欢某部影片,

optimizer选择Adam,loss选择BinaryCrossentropy,评价指标是AUC;

因为涉及到了影片ID,所以我用了shared_embedding,并且必须关闭eager模式;

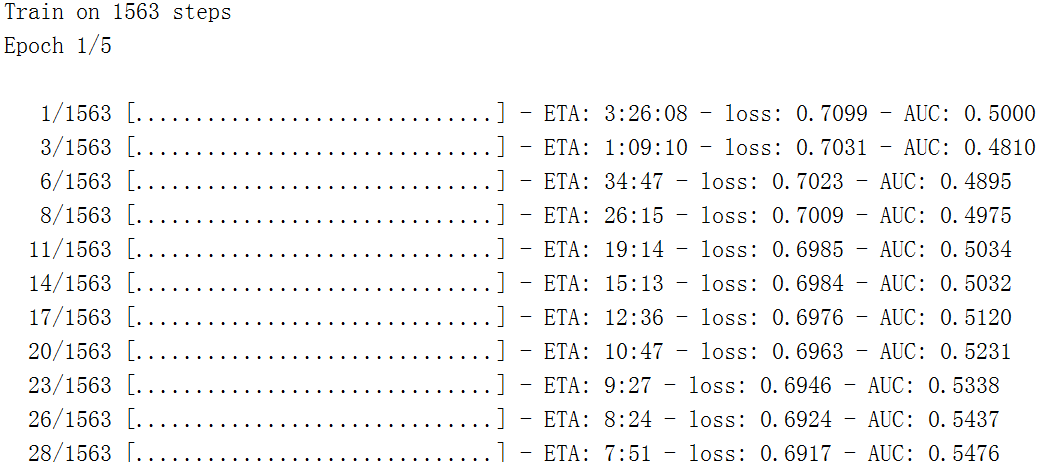

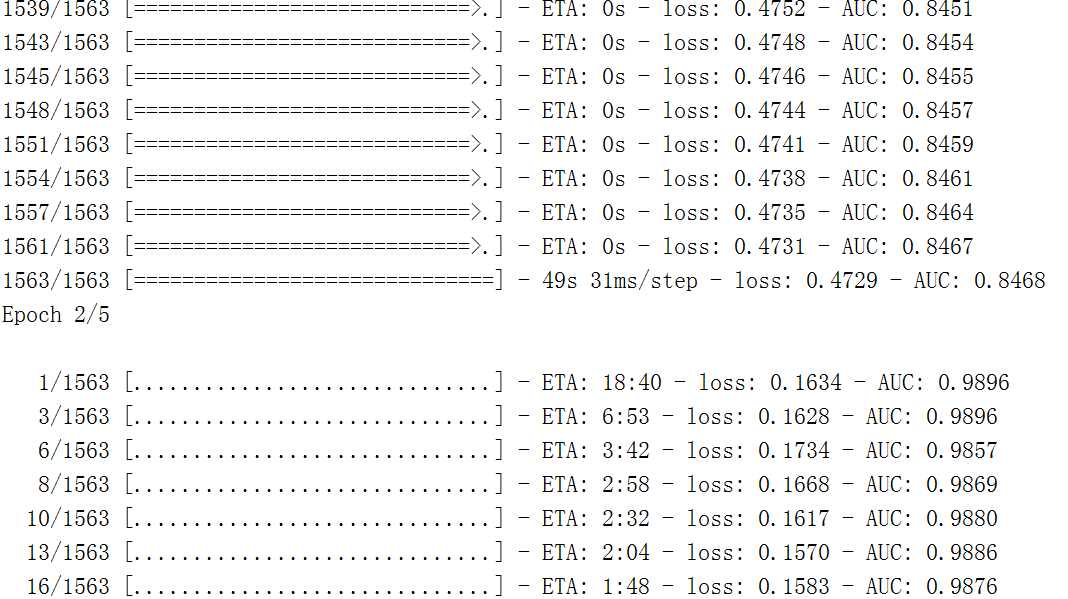

选用binary_crossentropy作为损失函数时模型在训练时AUC很快就到1了,但选用categorical_crossentropy时loss没太大变化,并且AUC一直保持在0.5,准确率也一直在0.5附近震荡。

下面是选用binary_crossentropy时的输出日志:

下面是我的代码:

one_order_feature_layer = tf.keras.layers.DenseFeatures(one_order_feature_columns)

one_order_feature_layer_outputs = one_order_feature_layer(feature_layer_inputs)

two_order_feature_layer = tf.keras.layers.DenseFeatures(two_order_feature_columns)

two_order_feature_layer_outputs = two_order_feature_layer(feature_layer_inputs)

# lr部分

lr_layer = tf.keras.layers.Dense(len(one_order_feature_columns), kernel_initializer=initializer)(

one_order_feature_layer_outputs)

# fm部分

reshape = tf.reshape(two_order_feature_layer_outputs,

[-1, len(two_order_feature_columns), two_order_feature_columns[0].dimension])

sum_square = tf.square(tf.reduce_sum(reshape, axis=1))

square_sum = tf.reduce_sum(tf.square(reshape), axis=1)

fm_layers = tf.multiply(0.5, tf.subtract(sum_square, square_sum))

# DNN部分

dnn_hidden_layer_1 = tf.keras.layers.Dense(64, activation='selu', kernel_initializer=initializer,

kernel_regularizer=regularizer)(two_order_feature_layer_outputs)

dnn_hidden_layer_2 = tf.keras.layers.Dense(64, activation='selu', kernel_initializer=initializer,

kernel_regularizer=regularizer)(dnn_hidden_layer_1)

dnn_hidden_layer_3 = tf.keras.layers.Dense(64, activation='selu', kernel_initializer=initializer,

kernel_regularizer=regularizer)(dnn_hidden_layer_2)

dnn_dropout = tf.keras.layers.Dropout(0.5, seed=29)(dnn_hidden_layer_3)

# 连接并输出

concatenate_layer = tf.keras.layers.concatenate([lr_layer, fm_layers, dnn_dropout])

out_layer = tf.keras.layers.Dense(1, activation='sigmoid')(concatenate_layer)

model = tf.keras.Model(inputs=[v for v in feature_layer_inputs.values()], outputs=out_layer)

model.compile(optimizer=tf.keras.optimizers.Adam(learning_rate=learning_rate),

loss=tf.keras.losses.BinaryCrossentropy(),

metrics=['AUC'])

# tf.keras.utils.plot_model(model, 'test.png', show_shapes=True)

train_ds = make_dataset(train_df, buffer_size=None, shuffle=True)

test_ds = make_dataset(test_df)

with tf.compat.v1.Session() as sess:

sess.run([tf.compat.v1.global_variables_initializer(), tf.compat.v1.tables_initializer()])

model.fit(train_ds, epochs=5)

loss, auc = model.evaluate(test_ds)

print("AUC", auc)