报错为:Error when checking target: expected activation_1 to have 3 dimensions, but got array with shape (32, 10)

keras+tensorflow后端

代码如下

# coding=utf-8

import matplotlib

from PIL import Image

matplotlib.use("Agg")

import matplotlib.pyplot as plt

import argparse

import numpy as np

from keras.models import Sequential

from keras.layers import Conv2D, MaxPooling2D, UpSampling2D, BatchNormalization, Reshape, Permute, Activation, Flatten

# from keras.utils.np_utils import to_categorical

# from keras.preprocessing.image import img_to_array

from keras.models import Model

from keras.layers import Input

from keras.callbacks import ModelCheckpoint

# from sklearn.preprocessing import LabelBinarizer

# from sklearn.model_selection import train_test_split

# import pickle

import matplotlib.pyplot as plt

import os

from keras.preprocessing.image import ImageDataGenerator

train_datagen = ImageDataGenerator(

rescale=1./255,

shear_range=0.2,

zoom_range=0.2,

horizontal_flip=True)

test_datagen = ImageDataGenerator(rescale=1./255)

path = '/tmp/2'

os.chdir(path)

training_set = train_datagen.flow_from_directory(

'trainset',

target_size=(64,64),

batch_size=32,

class_mode='categorical',

shuffle=True)

test_set = test_datagen.flow_from_directory(

'testset',

target_size=(64,64),

batch_size=32,

class_mode='categorical',

shuffle=True)

def SegNet():

model = Sequential()

# encoder

model.add(Conv2D(64, (3, 3), strides=(1, 1), input_shape=(64, 64, 3), padding='same', activation='relu'))

model.add(BatchNormalization())

model.add(Conv2D(64, (3, 3), strides=(1, 1), padding='same', activation='relu'))

model.add(BatchNormalization())

model.add(MaxPooling2D(pool_size=(2, 2)))

# (128,128)

model.add(Conv2D(128, (3, 3), strides=(1, 1), padding='same', activation='relu'))

model.add(BatchNormalization())

model.add(Conv2D(128, (3, 3), strides=(1, 1), padding='same', activation='relu'))

model.add(BatchNormalization())

model.add(MaxPooling2D(pool_size=(2, 2)))

# (64,64)

model.add(Conv2D(256, (3, 3), strides=(1, 1), padding='same', activation='relu'))

model.add(BatchNormalization())

model.add(Conv2D(256, (3, 3), strides=(1, 1), padding='same', activation='relu'))

model.add(BatchNormalization())

model.add(Conv2D(256, (3, 3), strides=(1, 1), padding='same', activation='relu'))

model.add(BatchNormalization())

model.add(MaxPooling2D(pool_size=(2, 2)))

# (32,32)

model.add(Conv2D(512, (3, 3), strides=(1, 1), padding='same', activation='relu'))

model.add(BatchNormalization())

model.add(Conv2D(512, (3, 3), strides=(1, 1), padding='same', activation='relu'))

model.add(BatchNormalization())

model.add(Conv2D(512, (3, 3), strides=(1, 1), padding='same', activation='relu'))

model.add(BatchNormalization())

model.add(MaxPooling2D(pool_size=(2, 2)))

# (16,16)

model.add(Conv2D(512, (3, 3), strides=(1, 1), padding='same', activation='relu'))

model.add(BatchNormalization())

model.add(Conv2D(512, (3, 3), strides=(1, 1), padding='same', activation='relu'))

model.add(BatchNormalization())

model.add(Conv2D(512, (3, 3), strides=(1, 1), padding='same', activation='relu'))

model.add(BatchNormalization())

model.add(MaxPooling2D(pool_size=(2, 2)))

# (8,8)

# decoder

model.add(UpSampling2D(size=(2, 2)))

# (16,16)

model.add(Conv2D(512, (3, 3), strides=(1, 1), padding='same', activation='relu'))

model.add(BatchNormalization())

model.add(Conv2D(512, (3, 3), strides=(1, 1), padding='same', activation='relu'))

model.add(BatchNormalization())

model.add(Conv2D(512, (3, 3), strides=(1, 1), padding='same', activation='relu'))

model.add(BatchNormalization())

model.add(UpSampling2D(size=(2, 2)))

# (32,32)

model.add(Conv2D(512, (3, 3), strides=(1, 1), padding='same', activation='relu'))

model.add(BatchNormalization())

model.add(Conv2D(512, (3, 3), strides=(1, 1), padding='same', activation='relu'))

model.add(BatchNormalization())

model.add(Conv2D(512, (3, 3), strides=(1, 1), padding='same', activation='relu'))

model.add(BatchNormalization())

model.add(UpSampling2D(size=(2, 2)))

# (64,64)

model.add(Conv2D(256, (3, 3), strides=(1, 1), padding='same', activation='relu'))

model.add(BatchNormalization())

model.add(Conv2D(256, (3, 3), strides=(1, 1), padding='same', activation='relu'))

model.add(BatchNormalization())

model.add(Conv2D(256, (3, 3), strides=(1, 1), padding='same', activation='relu'))

model.add(BatchNormalization())

model.add(UpSampling2D(size=(2, 2)))

# (128,128)

model.add(Conv2D(128, (3, 3), strides=(1, 1), padding='same', activation='relu'))

model.add(BatchNormalization())

model.add(Conv2D(128, (3, 3), strides=(1, 1), padding='same', activation='relu'))

model.add(BatchNormalization())

model.add(UpSampling2D(size=(2, 2)))

# (256,256)

model.add(Conv2D(64, (3, 3), strides=(1, 1), input_shape=(64, 64, 3), padding='same', activation='relu'))

model.add(BatchNormalization())

model.add(Conv2D(64, (3, 3), strides=(1, 1), padding='same', activation='relu'))

model.add(BatchNormalization())

model.add(Conv2D(10, (1, 1), strides=(1, 1), padding='valid', activation='relu'))

model.add(BatchNormalization())

model.add(Reshape((64*64, 10)))

# axis=1和axis=2互换位置,等同于np.swapaxes(layer,1,2)

model.add(Permute((2, 1)))

#model.add(Flatten())

model.add(Activation('softmax'))

model.compile(loss='categorical_crossentropy', optimizer='sgd', metrics=['accuracy'])

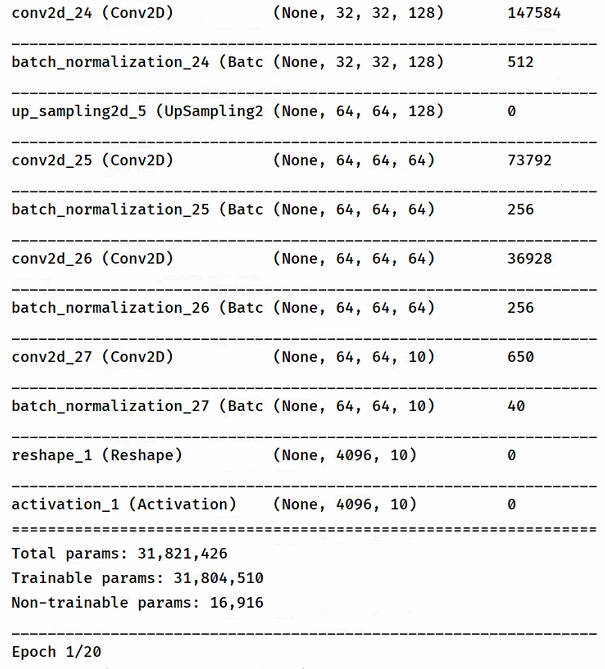

model.summary()

return model

def main():

model = SegNet()

filepath = "/tmp/2/weights.best.hdf5"

checkpoint = ModelCheckpoint(filepath, monitor='val_acc', verbose=1, save_best_only=True, mode='max')

callbacks_list = [checkpoint]

history = model.fit_generator(

training_set,

steps_per_epoch=(training_set.samples / 32),

epochs=20,

callbacks=callbacks_list,

validation_data=test_set,

validation_steps=(test_set.samples / 32))

# Plotting the Loss and Classification Accuracy

model.metrics_names

print(history.history.keys())

# "Accuracy"

plt.plot(history.history['acc'])

plt.plot(history.history['val_acc'])

plt.title('Model Accuracy')

plt.ylabel('Accuracy')

plt.xlabel('Epoch')

plt.legend(['train', 'test'], loc='upper left')

plt.show()

# "Loss"

plt.plot(history.history['loss'])

plt.plot(history.history['val_loss'])

plt.title('Model loss')

plt.ylabel('Loss')

plt.xlabel('Epoch')

plt.legend(['train', 'test'], loc='upper left')

plt.show()

if __name__ == '__main__':

main()

主要是这里,segnet没有全连接层,最后输出的应该是一个和输入图像同等大小的有判别标签的shape吗。。。求教怎么改。

输入图像是64 64的,3通道,总共10类,分别放在testset和trainset两个文件夹里