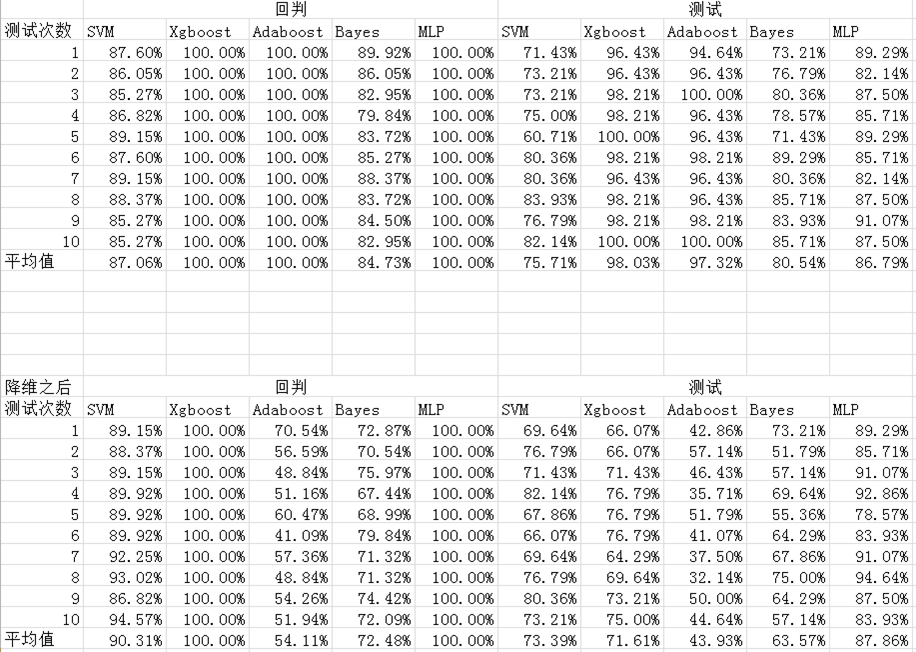

我使用五种方法同时对原始数据和主成分分析PCA处理之后的数据进行分析,并且进行回判和预测,发现SVM和神经网络前后变化不大,但是XGBoost、AdaBoost以及Bayes的成功率反而有所降低,请问是不是因为这几个方法不适合主成分分析降维?

主成分分析降维会影响到机器学习的精度么?

- 写回答

- 好问题 0 提建议

- 关注问题

- 邀请回答

-

2条回答 默认 最新

threenewbee 2019-07-18 19:27关注

threenewbee 2019-07-18 19:27关注这个和你的数据的关联度有关。做了PCA降维以后,那些关联性比较小的被你剔除了,它们或多或少包含了一些信息也就丢失了。那么或多或少会影响精度。

但是从另外一个角度看,如果你的计算规模大幅缩小,那么你机器学习的效率就提高了,在给定的有限时间和成本上,学习效率提高,你反倒可以得到更好的效果。打一个比方,我们要挖金子,你说是先探明哪里有矿再挖掘,找到的金子多,还是漫无目的挖掘找的多?这个问题得这么看:

如果你有无限多的时间、不计成本,你不管哪里有矿没矿,把整个地球全部挖一遍,肯定得到的金子最多,因为即便不是矿的地方,多少也能挖一点点非常微量的金子,甚至海水中都溶解着金子。

但是现实中,我们不是有无穷多的矿工,无穷多的时间,那么先找到哪里有矿,在矿场挖,在给定的成本里,肯定比随便挖挖到的金子多。本回答被题主选为最佳回答 , 对您是否有帮助呢?评论 打赏 举报解决 5无用