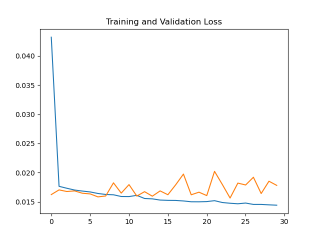

请问在使用tensorflow.keras训练模型预测时val_loss变化非常奇怪

loss是正常下降,但val_loss一开始就很低,而且一直震荡

如下图

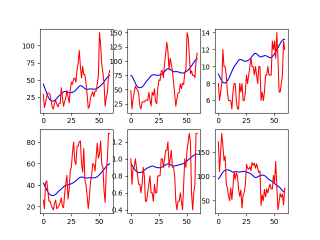

最后预测结果也很差,如下图

我训练的模型如下

Xtrain.shape, Xtest.shape, Ytrain.shape, Ytest.shape为(2036, 60, 6) (400, 60, 6) (2036, 60, 6) (400, 60, 6)

model = keras.models.Sequential()

model.add(keras.layers.LSTM(40, input_shape=(Xtrain.shape[1:]), return_sequences=True, ))

model.add(keras.layers.Dropout(0.1))

model.add(keras.layers.LSTM(30, return_sequences=True)) # model.add(keras.layers.Dropout(0.5))

model.add(keras.layers.Dropout(0.1))

model.add(keras.layers.LSTM(40, return_sequences=True))

model.add(keras.layers.Dropout(0.1))

model.add(keras.layers.LSTM(40, return_sequences=True))

model.add(keras.layers.BatchNormalization()) # 批标准化:对一小批数据(batch)做标准化处理(使数据符合均值为0,标准差为1分布)

model.add(keras.layers.TimeDistributed(keras.layers.Dense(Ytrain.shape[2])))

model.compile(optimizer=keras.optimizers.Adam(lr=0.0001, amsgrad=True), loss='mse') # mae: mean_absolute_error

model.summary()

history = model.fit(

Xtrain, Ytrain,

validation_data=(Xtest, Ytest),

batch_size=32,

epochs=30,

verbose=1)

预测部分代码如下

predict = model.predict(Xtest)

predict = scalar.inverse_transform(predict[0])

Ytesting = scalar.inverse_transform(Ytest[0])

for i in range(6):

plt.subplot(2, 3, i + 1)

plt.plot(predict[:, i], color='blue')

plt.plot(Ytesting[:, i], color='red')

plt.show()

请问是模型结构问题还是模型参数问题啊,亦或者训练模型的数据集有问题啊