3条回答 默认 最新

ricky_lyq 2018-06-26 12:17关注

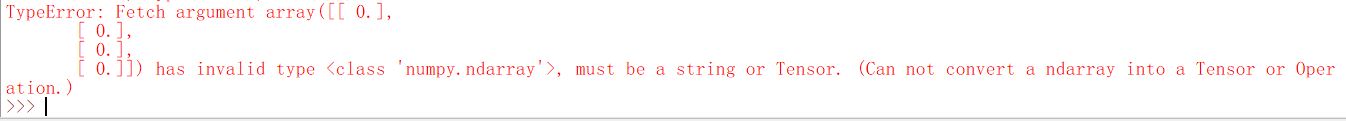

ricky_lyq 2018-06-26 12:17关注可以feed的数据类型: Python scalars, strings, lists, numpy ndarrays, or TensorHandles.

可以fetch的数据类型:Tensor, string.

在运行图的时,tensor用sess.run()取出来,然后再feed进去。解决 无用评论 打赏 举报

可以feed的数据类型: Python scalars, strings, lists, numpy ndarrays, or TensorHandles.

可以fetch的数据类型:Tensor, string.

在运行图的时,tensor用sess.run()取出来,然后再feed进去。