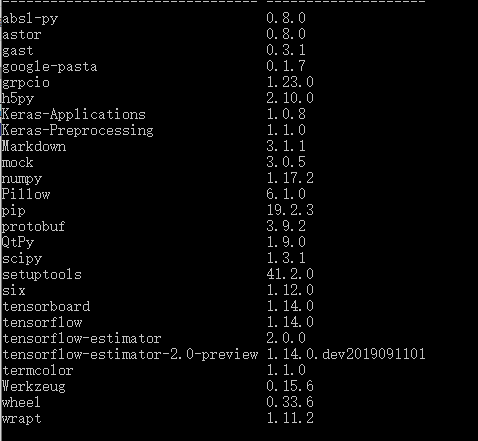

如何解决cannot import name 'dense_features' from 'tensorflow.python.feature_column'

- 写回答

- 好问题 0 提建议

- 追加酬金

- 关注问题

- 邀请回答

-

1条回答 默认 最新

悬赏问题

- ¥17 pro*C预编译“闪回查询”报错SCN不能识别

- ¥15 微信会员卡接入微信支付商户号收款

- ¥15 如何获取烟草零售终端数据

- ¥15 数学建模招标中位数问题

- ¥15 phython路径名过长报错 不知道什么问题

- ¥15 深度学习中模型转换该怎么实现

- ¥15 Stata外部命令安装问题求帮助!

- ¥15 从键盘随机输入A-H中的一串字符串,用七段数码管方法进行绘制。提交代码及运行截图。

- ¥15 如何用python向钉钉机器人发送可以放大的图片?

- ¥15 matlab(相关搜索:紧聚焦)